Hi everyone,

Migrating to new server hardware, but would like to keep using the same disks for Proxmox (expensive enterprise SSDs). I'll refer to the disks as A and B.

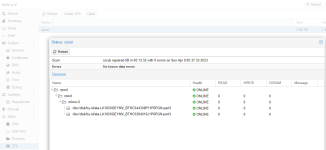

If I move Disks A and B to the target server, it fails to boot. The Raid controller in the new server shows that one disk is normal and the other "contains SMART configuration data and has been hidden from the OS". I have the option to clear this data, but unsure what that entails.

If I move only disk A, the server does not boot, not messages in Raid controller or elsewhere.

If I move only disk B, the server does not boot, and the same message as above is shown in the Raid controller.

Reversing the order of the disks does nothing.

Reinstalling the disks back into the previous server allows this server to boot into Proxmox again and reports no errors.

I'm at a bit of a loss as to how to proceed?

Can I safely clear the SMART data without loosing the data on the disk? Or do I need to do some intermediate step, like a fresh install of Proxmox on an extra HDD, cluster with existing server, migrate VMs and the shut down old server and install Proxmox again on the target disks installed into the new server?

Ad finally, if zpool displays these disks as a mirror, can Proxmox simply boot in the old server from just one of these disks? If so, I could move the other disk, install Proxmox to it and move stuff over, then add the second disk to the mirror in the target server?

Migrating to new server hardware, but would like to keep using the same disks for Proxmox (expensive enterprise SSDs). I'll refer to the disks as A and B.

If I move Disks A and B to the target server, it fails to boot. The Raid controller in the new server shows that one disk is normal and the other "contains SMART configuration data and has been hidden from the OS". I have the option to clear this data, but unsure what that entails.

If I move only disk A, the server does not boot, not messages in Raid controller or elsewhere.

If I move only disk B, the server does not boot, and the same message as above is shown in the Raid controller.

Reversing the order of the disks does nothing.

Reinstalling the disks back into the previous server allows this server to boot into Proxmox again and reports no errors.

I'm at a bit of a loss as to how to proceed?

Can I safely clear the SMART data without loosing the data on the disk? Or do I need to do some intermediate step, like a fresh install of Proxmox on an extra HDD, cluster with existing server, migrate VMs and the shut down old server and install Proxmox again on the target disks installed into the new server?

Ad finally, if zpool displays these disks as a mirror, can Proxmox simply boot in the old server from just one of these disks? If so, I could move the other disk, install Proxmox to it and move stuff over, then add the second disk to the mirror in the target server?