Hi, ok here it comes:

pveversion -v

proxmox-ve: 7.0-2 (running kernel: 5.11.22-5-pve)

pve-manager: 7.0-8 (running version: 7.0-8/b1dbf562)

pve-kernel-5.11: 7.0-8~bpo10+1

pve-kernel-helper: 7.0-3

pve-kernel-5.11.22-5-pve: 5.11.22-10~bpo10+1

pve-kernel-5.11.22-4-pve: 5.11.22-8~bpo10+1

pve-kernel-5.11.22-1-pve: 5.11.22-2

ceph-fuse: 15.2.13-pve1

corosync: 3.1.2-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.0.0-1+pve5

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.22-pve1~bpo10+1

libproxmox-acme-perl: 1.1.1

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.0-4

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.0-4

libpve-guest-common-perl: 4.0-2

libpve-http-server-perl: 4.0-2

libpve-storage-perl: 7.0-7

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.9-2

lxcfs: 4.0.8-pve1

novnc-pve: 1.2.0-3

proxmox-backup-client: 2.0.1-1

proxmox-backup-file-restore: 2.0.1-1

proxmox-mini-journalreader: 1.2-1

proxmox-widget-toolkit: 3.2-4

pve-cluster: 7.0-3

pve-container: 4.0-5

pve-docs: 7.0-5

pve-edk2-firmware: 3.20200531-1

pve-firewall: 4.2-2

pve-firmware: 3.3-2

pve-ha-manager: 3.3-1

pve-i18n: 2.4-1

pve-qemu-kvm: 6.0.0-2

pve-xtermjs: 4.12.0-1

qemu-server: 7.0-7

smartmontools: 7.2-pve2

spiceterm: 3.2-2

vncterm: 1.7-1

zfsutils-linux: 2.0.6-pve1~bpo10+1

pct config 101

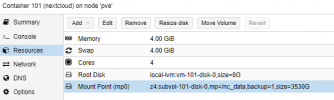

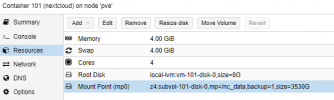

arch: amd64

cores: 4

hostname: nextcloud

memory: 4096

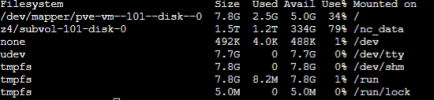

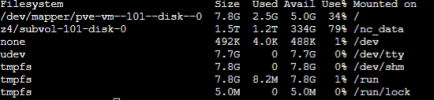

mp0: z4:subvol-101-disk-0,mp=/nc_data,backup=1,size=3530G

net0: name=eth0,bridge=vmbr0,firewall=1,gw=192.168.1.1,hwaddr=EE:26:0B:10:F9:66,ip=192.168.1.121/24,type=veth

onboot: 1

ostype: debian

rootfs: local-lvm:vm-101-disk-0,size=8G

swap: 4096

unprivileged: 1

zfs get all z4/subvol-101-disk-0

NAME PROPERTY VALUE SOURCE

z4/subvol-101-disk-0 type filesystem -

z4/subvol-101-disk-0 creation Mon Aug 30 23:16 2021 -

z4/subvol-101-disk-0 used 1.17T -

z4/subvol-101-disk-0 available 333G -

z4/subvol-101-disk-0 referenced 1.17T -

z4/subvol-101-disk-0 compressratio 1.05x -

z4/subvol-101-disk-0 mounted yes -

z4/subvol-101-disk-0 quota 3.42T local

z4/subvol-101-disk-0 reservation none default

z4/subvol-101-disk-0 recordsize 128K default

z4/subvol-101-disk-0 mountpoint /z4/subvol-101-disk-0 default

z4/subvol-101-disk-0 sharenfs off default

z4/subvol-101-disk-0 checksum on default

z4/subvol-101-disk-0 compression on inherited from z4

z4/subvol-101-disk-0 atime on default

z4/subvol-101-disk-0 devices on default

z4/subvol-101-disk-0 exec on default

z4/subvol-101-disk-0 setuid on default

z4/subvol-101-disk-0 readonly off default

z4/subvol-101-disk-0 zoned off default

z4/subvol-101-disk-0 snapdir hidden default

z4/subvol-101-disk-0 aclmode discard default

z4/subvol-101-disk-0 aclinherit restricted default

z4/subvol-101-disk-0 createtxg 1571 -

z4/subvol-101-disk-0 canmount on default

z4/subvol-101-disk-0 xattr sa local

z4/subvol-101-disk-0 copies 1 default

z4/subvol-101-disk-0 version 5 -

z4/subvol-101-disk-0 utf8only off -

z4/subvol-101-disk-0 normalization none -

z4/subvol-101-disk-0 casesensitivity sensitive -

z4/subvol-101-disk-0 vscan off default

z4/subvol-101-disk-0 nbmand off default

z4/subvol-101-disk-0 sharesmb off default

z4/subvol-101-disk-0 refquota 3.45T local

z4/subvol-101-disk-0 refreservation none default

z4/subvol-101-disk-0 guid 15788814030405313371 -

z4/subvol-101-disk-0 primarycache all default

z4/subvol-101-disk-0 secondarycache all default

z4/subvol-101-disk-0 usedbysnapshots 0B -

z4/subvol-101-disk-0 usedbydataset 1.17T -

z4/subvol-101-disk-0 usedbychildren 0B -

z4/subvol-101-disk-0 usedbyrefreservation 0B -

z4/subvol-101-disk-0 logbias latency default

z4/subvol-101-disk-0 objsetid 654 -

z4/subvol-101-disk-0 dedup off default

z4/subvol-101-disk-0 mlslabel none default

z4/subvol-101-disk-0 sync standard default

z4/subvol-101-disk-0 dnodesize legacy default

z4/subvol-101-disk-0 refcompressratio 1.05x -

z4/subvol-101-disk-0 written 1.17T -

z4/subvol-101-disk-0 logicalused 1.24T -

z4/subvol-101-disk-0 logicalreferenced 1.24T -

z4/subvol-101-disk-0 volmode default default

z4/subvol-101-disk-0 filesystem_limit none default

z4/subvol-101-disk-0 snapshot_limit none default

z4/subvol-101-disk-0 filesystem_count none default

z4/subvol-101-disk-0 snapshot_count none default

z4/subvol-101-disk-0 snapdev hidden default

z4/subvol-101-disk-0 acltype posix local

z4/subvol-101-disk-0 context none default

z4/subvol-101-disk-0 fscontext none default

z4/subvol-101-disk-0 defcontext none default

z4/subvol-101-disk-0 rootcontext none default

z4/subvol-101-disk-0 relatime off default

z4/subvol-101-disk-0 redundant_metadata all default

z4/subvol-101-disk-0 overlay on default

z4/subvol-101-disk-0 encryption off default

z4/subvol-101-disk-0 keylocation none default

z4/subvol-101-disk-0 keyformat none default

z4/subvol-101-disk-0 pbkdf2iters 0 default

z4/subvol-101-disk-0 special_small_blocks 0 default