Hello

I ve recently noticed 3 things with my 2 SSDs running in mirror created during prox installation.

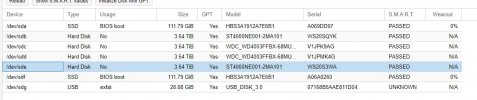

First I noticed that in the GUI -> Node->Disks, under the tab usage each ssd has the <<partitions>> word (installation made with mbr method)

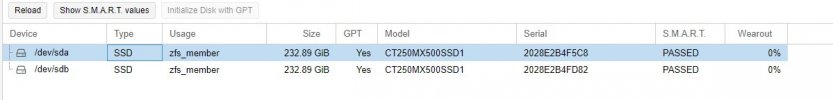

In another proxmox installation again with 2 ssds in mirror mode, it shows under GUI -> Node->Disks zfs_member under Usage tab (installation made with uefi mode)

Why is that naming scheme difference?

Second thing I ve noticed is that I have in both (different) nodes a message while running zpool status displaying

<<

status: Some supported features are not enabled on the pool. The pool can

still be used, but some features are unavailable.

action: Enable all features using 'zpool upgrade'. Once this is done,

the pool may no longer be accessible by software that does not support

the features. See zpool-features(5) for details.

>>

Does anyone else having this message and did he run the zpool upgrade command?

Last but not least, even though the wearout level is 0% (reminding you that they are both new drives with less than a day hours) for both of them (same WD probably enterprise drives 128gb each model HBS3A1912A7E6B1) if i press in each of them the show SMART values I get a line Media Wearout indicator with a value of 10 (before a while it as 9)

Does anyone have any experience with the above issues?

Thank you

PS Maybe important but forgot to mention that since the workstation DellT7610 has no official room for 10 drives (which I managed to fit) I have one ssd connected to the motherboard via a pci-e card from OWC and the other one via the odd (by removing the cd rom and changing to an hdd caddy)

I ve recently noticed 3 things with my 2 SSDs running in mirror created during prox installation.

First I noticed that in the GUI -> Node->Disks, under the tab usage each ssd has the <<partitions>> word (installation made with mbr method)

In another proxmox installation again with 2 ssds in mirror mode, it shows under GUI -> Node->Disks zfs_member under Usage tab (installation made with uefi mode)

Why is that naming scheme difference?

Second thing I ve noticed is that I have in both (different) nodes a message while running zpool status displaying

<<

status: Some supported features are not enabled on the pool. The pool can

still be used, but some features are unavailable.

action: Enable all features using 'zpool upgrade'. Once this is done,

the pool may no longer be accessible by software that does not support

the features. See zpool-features(5) for details.

>>

Does anyone else having this message and did he run the zpool upgrade command?

Last but not least, even though the wearout level is 0% (reminding you that they are both new drives with less than a day hours) for both of them (same WD probably enterprise drives 128gb each model HBS3A1912A7E6B1) if i press in each of them the show SMART values I get a line Media Wearout indicator with a value of 10 (before a while it as 9)

Does anyone have any experience with the above issues?

Thank you

PS Maybe important but forgot to mention that since the workstation DellT7610 has no official room for 10 drives (which I managed to fit) I have one ssd connected to the motherboard via a pci-e card from OWC and the other one via the odd (by removing the cd rom and changing to an hdd caddy)

Attachments

Last edited: