Hi,

ive searched the forum but i didn't find a good question for my questions.. so my question is I have a cluster with few nodes and two of the nodes have NVME, so I created a VM on node1 with one local disk + nvme disk. When I try to migrate it to node2 I cannot do it, because on node2 I cannot add the same nvme id as on the first node, so what's the best practice to migrate a VM with two local disks to another node?

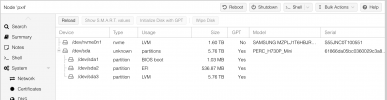

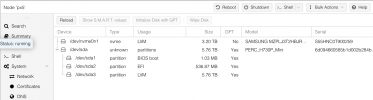

How I added nvme datastore:

1. datacenter > storage > add > lvm-think and I added it as nvme

2. datacenter > storage > add > lvm-think and I added it as nvme-node2 (because I got an error that nvme id is already in use)

thanks for your answers.

ive searched the forum but i didn't find a good question for my questions.. so my question is I have a cluster with few nodes and two of the nodes have NVME, so I created a VM on node1 with one local disk + nvme disk. When I try to migrate it to node2 I cannot do it, because on node2 I cannot add the same nvme id as on the first node, so what's the best practice to migrate a VM with two local disks to another node?

How I added nvme datastore:

1. datacenter > storage > add > lvm-think and I added it as nvme

2. datacenter > storage > add > lvm-think and I added it as nvme-node2 (because I got an error that nvme id is already in use)

thanks for your answers.