Hi together,

I am facing a crashed ha config with mainly two servers. One of the servers ist crashed. I have managed to startup the second server for what i have to messup the ha-config (corosync) abit. Now I am trying to migrate the lxc and kvm containers to the second server for now till the new hardware arrives.

Is there a common way to "free" the stucked containers from pve01 and migrate it to pve02.

I can access the /etc/pve/nodes directory and even can see the config files. Images and some other data is stored on a still working nfs share. If I try to simply copy config files from /etc/pve/nodes/pve01 to /etc/pve/nodes/pve02 I get a permission erros.

If I create a new lcx container on pve02 (regardless if I use the old MAC-address and/or IP or not) and copy the raw-image to the new container, I can startup the container but not getting a working ssh connection and even so cant login via the proxmox console. I get a login promt in the console but the former username(s) and password(s) are not working anymore. I believe that no shell is working at all or at least sshd ist not starting up correctly. By the way, I can ping the machines as of that the network-interfaces seems to be working in general.

Does anyone know how to "free" the stucked containers from pve01 and restart it at pve02 ... or at least what is the prefered way to mount the raw files in another container to have access to the data inside the container.

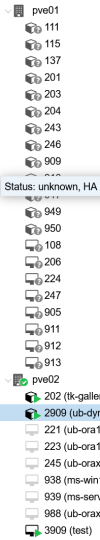

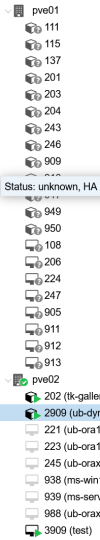

Below is a partial screenshot of how it looks currently in webgui.

Thanks in advance

Michael

I am facing a crashed ha config with mainly two servers. One of the servers ist crashed. I have managed to startup the second server for what i have to messup the ha-config (corosync) abit. Now I am trying to migrate the lxc and kvm containers to the second server for now till the new hardware arrives.

Is there a common way to "free" the stucked containers from pve01 and migrate it to pve02.

I can access the /etc/pve/nodes directory and even can see the config files. Images and some other data is stored on a still working nfs share. If I try to simply copy config files from /etc/pve/nodes/pve01 to /etc/pve/nodes/pve02 I get a permission erros.

If I create a new lcx container on pve02 (regardless if I use the old MAC-address and/or IP or not) and copy the raw-image to the new container, I can startup the container but not getting a working ssh connection and even so cant login via the proxmox console. I get a login promt in the console but the former username(s) and password(s) are not working anymore. I believe that no shell is working at all or at least sshd ist not starting up correctly. By the way, I can ping the machines as of that the network-interfaces seems to be working in general.

Does anyone know how to "free" the stucked containers from pve01 and restart it at pve02 ... or at least what is the prefered way to mount the raw files in another container to have access to the data inside the container.

Below is a partial screenshot of how it looks currently in webgui.

Thanks in advance

Michael

Last edited: