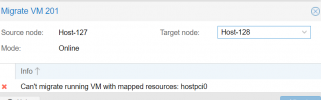

Migrate VM with vGPU to another host

- Thread starter PNXNS

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

https://pve.proxmox.com/wiki/PCI_Pa...ith passed-through devices cannot be migrated.

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

Code:

PCI passthrough allows you to use a physical PCI device (graphics card, network card) inside a VM (KVM virtualization only).

If you "PCI passthrough" a device, the device is not available to the host anymore. Note that VMs with passed-through devices cannot be migrated.Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

note that only is valid for live migration. offline migration should work without problems (thats one usecase for the resource mappings)

live migration with vgpus could work (with a new enough kernel), but we have to do some adaptions of the code for that (for now the code assumes pci passthrough devices cannot be migrated, which would be invalid then for example)

live migration with vgpus could work (with a new enough kernel), but we have to do some adaptions of the code for that (for now the code assumes pci passthrough devices cannot be migrated, which would be invalid then for example)

is that planned or just aspirational?but we have to do some adaptions of the code for that

(This is 100% personal opinion, so sorry in advance for exagerations and strong words)is that planned or just aspirational?

You realize, that this isn't a 10 minute exercise?

GPUs themselves hold an incredible amount of state and then there is the host-side mapping, too. It's also not really just a Promox feature but something that KVM and Linux need to support.

So I'd say don't hold your breath, especially since this affects lots of other heterogenous compute devices and accelerators who'd love the ease of live-migrations, without proper hardware abstractions in the x86/PCIe architecture making that easy: Linux or any other Unix doesn't really understand heterogeneous computing devices and there is little chance this will improve as these devices experience a cambrian explosion in diversity.

The biggest thing missing here is hyperscaler interest: migrating a virtual machine isn't what they'd do generally and less for pass-through accelerators.

Actually I'd say we're lucky to have live migrations at all, because they date back to the days when consolidation via scale-in was much more the norm. Now that hypescaler scale-out is the prevalent operational mode, chances of such a functionality being developed would be much slimmer, IMHO.

Just look how CRIU or container live-migration has basically never gone anywhere mainstream.

I have worked in development on operating systems, on features you have used every day if you use windows, hyper-v and remoting, so yes i have an incredibly good idea of the amount of work, not to mention reliance on upstream features.You realize, that this isn't a 10 minute exercise?

I asked because i want to plan my strategy of what i put where, and the feature is evidently something the devs have noodled on and I just wanted to get a sense of how they think about it, but thanks for replying for them instead.

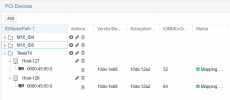

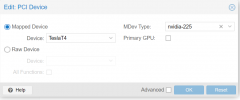

Now i have actually implemented a multi node pool i have some more observations /asks / questions:offline migration should work without problems

- Feature Request: when clicking migrate on an online machine in the UI - rather than blocking the UI from doing a migrate, offer to do an offline migration (aka shutdown machine, move, restart). I assume if I use command line to do the move i won't be blocked like in the UI?

- I am unclear what happens in a HA event - will the machine migrate to a running node, startup and just pick first device?

- Given proxmox understands the pool, and the first device is picked, i assume the worry in a live migrations are:

- memory state of the vGPU being invalidate as the target VFs will not have state and need to be reinitialized?

- whether the guestOS sees a hotplug event or not

- whether the guestOS driver can cope with that (on windows given the driver model my hope would be it would treated (at worst) as any other video driver crash and re-initialize?

- I am sure I am missing something too..?

- I guess for PCIE pass through there would be a need to treat devices either as hot-plug or not (i,e, some devices would be fine to be migrated live and some will not - and there would need to be a UI mechanism to classify them.... definitely a complex set of UI features required!)

- I love that the VM on start will just pick the first available VFs - thats super neat!

Last edited:

Well I've been there and wanted that, too.I have worked in development on operating systems, on features you have used every day if you use windows, hyper-v and remoting, so yes i have an incredibly good idea of the amount of work, not to mention reliance on upstream features.

I asked because i want to plan my strategy of what i put where, and the feature is evidently something the devs have noodled on and I just wanted to get a sense of how they think about it, but thanks for replying for them instead.

I know how easy it's to like having that feature, especially if you happen to have a relatively uniform farm, where sizeable groups of hosts have identical GPUs. At least GPUs (contrary to some other devices) have state that can wholly RAM mapped and thus could in theory be copied. But since that's not true for many other classes of devices, KVM and other hypervisors have always drawn a line on PCI pass-through.

And when it comes to CUDA, one could even imagine a logic similar to what live-migation checking logic is currently doing on vSphere, Xen or KVM, looking at lowest common denominators for CPU types, RAM etc. and at least CUDA devices so far have a clear linear hierarchy of compute facilities, unlike some of the x86 ISA extensions.

But CUDA already makes it pretty obvious, that the ROI for such live-migration logic would be very low, because CUDA applications really need to know what they are running on, to configure the sizes of their wavefronts and the code variants to use to match the capabilities of the hardware. A CUDA app first takes an inventory, then sets up for a given number of cores and capabilities and lets things rip. AFAIK there is no ability to re-adjust kernels mid-flight, do some kind of on-the-fly reconfiguration to match fluctuating demands or even live migrations.

CUDA is 1960's batch inside the GPU in terms of architecture, vGPUs an attempt to simulate something VM/370, the cost of migration and reconfiguration so huge, that time slices smaller than seconds might not be worth the effort while workloads that tolerate that might just be HPC and AI training, certainly not VDI, games or inference.

Most of the HPC and AI training jobs already contain checkpointing logic, so for them live-migration is essentially solved externally.

So it's very hard to see how the critical mass of skill, free developer time and financial incentive are ever going to condense in a single place to make this happen, even if it's technically possible.

Yes, I'd love to have that, too. But unless your data and conclusions are very different, it looks like it's not going to happen.

Last edited:

we want to implement it yes, but no timetable yet, as this touches quite a few places in our vm management logicis that planned or just aspirational?

to answer the remaining questions (please ping if i forgot something or it's unclear):

no currently there is no such features for vms at this point (container have such a 'restart' migration, vms do not yet. feel free to open an enhancement request here: https://bugzilla.proxmox.com )Feature Request: when clicking migrate on an online machine in the UI - rather than blocking the UI from doing a migrate, offer to do an offline migration (aka shutdown machine, move, restart). I assume if I use command line to do the move i won't be blocked like in the UI?

if you mean a node loses connection to the rest of the cluster with 'ha event' then no this cannot work. if a node loses connection, the node will fence itself and the vm will simply be restarted on another node (according to the ha group settings)

- I am unclear what happens in a HA event - will the machine migrate to a running node, startup and just pick first device?

actually no the the memory state etc. is handled by qemu and the driver so that is not the problem.Given proxmox understands the pool, and the first device is picked, i assume the worry in a live migrations are:

- memory state of the vGPU being invalidate as the target VFs will not have state and need to be reinitialized?

- whether the guestOS sees a hotplug event or not

- whether the guestOS driver can cope with that (on windows given the driver model my hope would be it would treated (at worst) as any other video driver crash and re-initialize?

- I am sure I am missing something too..?

the issues are mostly that on generating the qemu commandline we did not have to be careful to generate the same layout when pci passthrough is enabled (it probably is in most cases everywhere but we'd have to check and make sure that this now always holds)

there are some things we have to check how they'd work, e.g. snapshots, suspend/resume etc. (they use similar mechanics as to live migration)

the integration of the config to make sure users can only configure that for devices that are able to live migrate (e.g. how do we detect if a vgpu is live migratable? do we simple let the user determine that and fail if it does not work?)

and mostly it's about testing that it works reliably (e.g. nvidia only officially supports citrix + vmware for live migration AFAICS, but it should work on recent kernels)

does not really have anything to do with hotplug, but yes as i wrote above, it's also a ux problem

- I guess for PCIE pass through there would be a need to treat devices either as hot-plug or not (i,e, some devices would be fine to be migrated live and some will not - and there would need to be a UI mechanism to classify them.... definitely a complex set of UI features required!)

yes, i implemented that because it's super convenient, glad to hear it works as intended

- I love that the VM on start will just pick the first available VFs - thats super neat!

Thanks, that’s what I meant I should have said failover not migrate. Thanks!vm will simply be restarted on another node

Also thanks for the answers, gives me a good idea of where things stand. I work for one of the companies you mentioned so have a little insight to how deep the rabbit hole goes! i am super pleased with my proxmox PoC - week 3 this week of playing, pbs next up!

Ahh I love these dilemmas, no right answer… unless you are doing a class based allow list where you hard code what device classes are and are not migrateable i think you are only left with defaulting to all are non-migrateable and then admin declares what would be migrateable. Having a list the proxmox team would have to keep accurately populated seem to be an exercise in ‘whack-a-mole’ where the list is constantly updated - but i dont know enough about how pcie devices declare themselves to know (or what linux kernel driver constructs might allow that - I am more of a windows guy…).(e.g. how do we detect if a vgpu is live migratable? do we simple let the user determine that and fail if it does not work?)

Last edited:

yeah if the kernel/device/driver exposes some flag that would be very nice, but i haven't looked into that yet (too much to do, too little time). in any case i want it to be intuitive and mostly friction-less (so it should not be too much work, but you shouldn't be able to wrongly configure it either if possible...)Ahh I love these dilemmas, no right answer… unless you are doing a class based allow list where you hard code what device classes are and are not migrateable i think you are only left with defaulting to all are non-migrateable and then admin declares what would be migrateable. Having a list the proxmox team would have to keep accurately populated seem to be an exercise in ‘whack-a-mole’ where the list is constantly updated - but i dont know enough about how pcie devices declare themselves to know (or what linux kernel driver constructs might allow that - I am more of a windows guy…).

edit: i forgot a word

indeed as few 'hangnails' as possible is a good mantrabut you shouldn't be able to wrongly configure it either if possible.

there are definitely a lot of those elsewhere in proxmox that should take priority - for example when i was doing my ceph setup i found if i messed up (got it into a read-only-never-initialized-state it would hang the storage part of the ui completely for all nodes all storage types if i clicked on the wrong thing, but now i am way off topic for this thread and so will back away slowly

I'd have an off-topic issue, which I turned into one: how to manage a potentially larger number of on-demand nodes in a cluster that shouldn't have votes while they are offline.