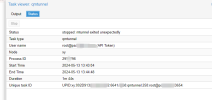

2024-05-13 14:03:54 remote: started tunnel worker 'UPID:xy:002DBA84:03015538:6641AD4A:qmtunnel:138:root@pam!Qq123654:'

tunnel: -> sending command "version" to remote

tunnel: <- got reply

2024-05-13 14:03:54 local WS tunnel version: 2

2024-05-13 14:03:54 remote WS tunnel version: 2

2024-05-13 14:03:54 minimum required WS tunnel version: 2

websocket tunnel started

2024-05-13 14:03:54 starting migration of VM 258 to node 'xy' (110.42.110.28)

tunnel: -> sending command "bwlimit" to remote

tunnel: <- got reply

tunnel: -> sending command "bwlimit" to remote

tunnel: <- got reply

2024-05-13 14:03:54 found local disk 'local:258/vm-258-disk-0.qcow2' (attached)

2024-05-13 14:03:54 found local disk 'local:258/vm-258-disk-1.qcow2' (attached)

2024-05-13 14:03:54 mapped: net1 from vmbr10 to vmbr0

2024-05-13 14:03:54 mapped: net0 from vmbr0 to vmbr0

2024-05-13 14:03:54 Allocating volume for drive 'scsi0' on remote storage 'Data'..

tunnel: -> sending command "disk" to remote

tunnel: <- got reply

2024-05-13 14:03:54 volume 'local:258/vm-258-disk-0.qcow2' is 'Data:138/vm-138-disk-0.qcow2' on the target

2024-05-13 14:03:54 Allocating volume for drive 'scsi1' on remote storage 'Data'..

tunnel: -> sending command "disk" to remote

tunnel: <- got reply

2024-05-13 14:03:55 volume 'local:258/vm-258-disk-1.qcow2' is 'Data:138/vm-138-disk-1.qcow2' on the target

tunnel: -> sending command "config" to remote

tunnel: <- got reply

tunnel: -> sending command "start" to remote

tunnel: <- got reply

2024-05-13 14:03:56 Setting up tunnel for '/run/qemu-server/258.migrate'

2024-05-13 14:03:56 Setting up tunnel for '/run/qemu-server/258_nbd.migrate'

2024-05-13 14:03:56 starting storage migration

2024-05-13 14:03:56 scsi0: start migration to nbd:unix:/run/qemu-server/258_nbd.migrate:exportname=drive-scsi0

drive mirror is starting for drive-scsi0

tunnel: accepted new connection on '/run/qemu-server/258_nbd.migrate'

tunnel: requesting WS ticket via tunnel

tunnel: established new WS for forwarding '/run/qemu-server/258_nbd.migrate'

drive-scsi0: transferred 1.0 MiB of 40.0 GiB (0.00%) in 0s

drive-scsi0: transferred 43.6 MiB of 40.0 GiB (0.11%) in 1s

drive-scsi0: transferred 145.0 MiB of 40.0 GiB (0.35%) in 2s

drive-scsi0: transferred 162.0 MiB of 40.0 GiB (0.40%) in 3s

drive-scsi0: transferred 179.0 MiB of 40.0 GiB (0.44%) in 4s

drive-scsi0: transferred 195.0 MiB of 40.0 GiB (0.48%) in 5s

drive-scsi0: transferred 212.0 MiB of 40.0 GiB (0.52%) in 6s

drive-scsi0: transferred 229.0 MiB of 40.0 GiB (0.56%) in 7s

drive-scsi0: transferred 246.0 MiB of 40.0 GiB (0.60%) in 8s

drive-scsi0: transferred 262.0 MiB of 40.0 GiB (0.64%) in 9s

drive-scsi0: transferred 279.0 MiB of 40.0 GiB (0.68%) in 10s

drive-scsi0: transferred 296.0 MiB of 40.0 GiB (0.72%) in 11s

drive-scsi0: transferred 313.0 MiB of 40.0 GiB (0.76%) in 12s

drive-scsi0: transferred 330.0 MiB of 40.0 GiB (0.81%) in 14s

drive-scsi0: transferred 347.0 MiB of 40.0 GiB (0.85%) in 15s

drive-scsi0: transferred 364.0 MiB of 40.0 GiB (0.89%) in 16s

drive-scsi0: transferred 381.0 MiB of 40.0 GiB (0.93%) in 17s

drive-scsi0: transferred 398.4 MiB of 40.0 GiB (0.97%) in 18s

drive-scsi0: transferred 416.0 MiB of 40.0 GiB (1.02%) in 19s

drive-scsi0: transferred 432.0 MiB of 40.0 GiB (1.05%) in 20s

drive-scsi0: transferred 452.0 MiB of 40.0 GiB (1.10%) in 21s

drive-scsi0: transferred 469.0 MiB of 40.0 GiB (1.15%) in 22s

drive-scsi0: transferred 488.6 MiB of 40.0 GiB (1.19%) in 23s

drive-scsi0: transferred 505.0 MiB of 40.0 GiB (1.23%) in 24s

drive-scsi0: transferred 522.6 MiB of 40.0 GiB (1.28%) in 25s

drive-scsi0: transferred 541.0 MiB of 40.0 GiB (1.32%) in 26s

drive-scsi0: transferred 557.4 MiB of 40.0 GiB (1.36%) in 27s

drive-scsi0: transferred 575.0 MiB of 40.0 GiB (1.40%) in 28s

drive-scsi0: transferred 592.0 MiB of 40.0 GiB (1.45%) in 29s

drive-scsi0: transferred 609.0 MiB of 40.0 GiB (1.49%) in 30s

drive-scsi0: transferred 626.0 MiB of 40.0 GiB (1.53%) in 31s

drive-scsi0: transferred 643.4 MiB of 40.0 GiB (1.57%) in 32s

drive-scsi0: transferred 661.0 MiB of 40.0 GiB (1.61%) in 33s

drive-scsi0: transferred 679.4 MiB of 40.0 GiB (1.66%) in 34s

drive-scsi0: transferred 701.1 MiB of 40.0 GiB (1.71%) in 35s

drive-scsi0: transferred 719.4 MiB of 40.0 GiB (1.76%) in 36s

drive-scsi0: transferred 743.6 MiB of 40.0 GiB (1.82%) in 37s

drive-scsi0: transferred 765.4 MiB of 40.0 GiB (1.87%) in 38s

drive-scsi0: transferred 786.8 MiB of 40.0 GiB (1.92%) in 39s

drive-scsi0: transferred 804.0 MiB of 40.0 GiB (1.96%) in 40s

drive-scsi0: transferred 822.0 MiB of 40.0 GiB (2.01%) in 41s

drive-scsi0: transferred 839.0 MiB of 40.0 GiB (2.05%) in 42s

drive-scsi0: transferred 855.0 MiB of 40.0 GiB (2.09%) in 43s

drive-scsi0: transferred 872.0 MiB of 40.0 GiB (2.13%) in 44s

drive-scsi0: transferred 889.0 MiB of 40.0 GiB (2.17%) in 45s

drive-scsi0: transferred 907.4 MiB of 40.0 GiB (2.22%) in 46s

drive-scsi0: transferred 924.0 MiB of 40.0 GiB (2.26%) in 47s

drive-scsi0: transferred 941.0 MiB of 40.0 GiB (2.30%) in 48s

drive-scsi0: transferred 958.0 MiB of 40.0 GiB (2.34%) in 49s

drive-scsi0: transferred 976.2 MiB of 40.0 GiB (2.38%) in 50s

drive-scsi0: transferred 994.0 MiB of 40.0 GiB (2.43%) in 51s

drive-scsi0: transferred 1010.0 MiB of 40.0 GiB (2.47%) in 52s

drive-scsi0: transferred 1.0 GiB of 40.0 GiB (2.51%) in 53s

drive-scsi0: transferred 1.0 GiB of 40.0 GiB (2.55%) in 54s

drive-scsi0: transferred 1.0 GiB of 40.0 GiB (2.59%) in 55s

drive-scsi0: transferred 1.1 GiB of 40.0 GiB (2.63%) in 56s

drive-scsi0: transferred 1.1 GiB of 40.0 GiB (2.67%) in 57s

drive-scsi0: transferred 1.1 GiB of 40.0 GiB (2.71%) in 58s

drive-scsi0: transferred 1.1 GiB of 40.0 GiB (2.76%) in 59s

drive-scsi0: transferred 1.1 GiB of 40.0 GiB (2.80%) in 1m

drive-scsi0: transferred 1.1 GiB of 40.0 GiB (2.84%) in 1m 1s

drive-scsi0: transferred 1.2 GiB of 40.0 GiB (2.88%) in 1m 2s

drive-scsi0: transferred 1.2 GiB of 40.0 GiB (2.92%) in 1m 3s

drive-scsi0: transferred 1.2 GiB of 40.0 GiB (2.97%) in 1m 4s

drive-scsi0: transferred 1.2 GiB of 40.0 GiB (3.01%) in 1m 5s

drive-scsi0: transferred 1.2 GiB of 40.0 GiB (3.05%) in 1m 6s

drive-scsi0: transferred 1.2 GiB of 40.0 GiB (3.09%) in 1m 7s

drive-scsi0: transferred 1.3 GiB of 40.0 GiB (3.13%) in 1m 8s

drive-scsi0: transferred 1.3 GiB of 40.0 GiB (3.17%) in 1m 9s

drive-scsi0: transferred 1.3 GiB of 40.0 GiB (3.21%) in 1m 10s

drive-scsi0: transferred 1.3 GiB of 40.0 GiB (3.25%) in 1m 11s

drive-scsi0: transferred 1.3 GiB of 40.0 GiB (3.29%) in 1m 12s

drive-scsi0: transferred 1.3 GiB of 40.0 GiB (3.33%) in 1m 13s

drive-scsi0: transferred 1.4 GiB of 40.0 GiB (3.38%) in 1m 14s

drive-scsi0: transferred 1.4 GiB of 40.0 GiB (3.43%) in 1m 15s

drive-scsi0: transferred 1.4 GiB of 40.0 GiB (3.47%) in 1m 16s

drive-scsi0: transferred 1.4 GiB of 40.0 GiB (3.52%) in 1m 17s

drive-scsi0: transferred 1.4 GiB of 40.0 GiB (3.56%) in 1m 18s

drive-scsi0: transferred 1.4 GiB of 40.0 GiB (3.60%) in 1m 19s

drive-scsi0: transferred 1.5 GiB of 40.0 GiB (3.64%) in 1m 20s

drive-scsi0: transferred 1.5 GiB of 40.0 GiB (3.68%) in 1m 21s

drive-scsi0: transferred 1.5 GiB of 40.0 GiB (3.73%) in 1m 22s

drive-scsi0: transferred 1.5 GiB of 40.0 GiB (3.78%) in 1m 23s

drive-scsi0: transferred 2.1 GiB of 40.0 GiB (5.31%) in 1m 24s

drive-scsi0: transferred 2.1 GiB of 40.0 GiB (5.36%) in 1m 25s

drive-scsi0: transferred 2.2 GiB of 40.0 GiB (5.40%) in 1m 26s

drive-scsi0: transferred 2.2 GiB of 40.0 GiB (5.44%) in 1m 27s

drive-scsi0: transferred 2.2 GiB of 40.0 GiB (5.48%) in 1m 28s

drive-scsi0: transferred 2.2 GiB of 40.0 GiB (5.52%) in 1m 29s

drive-scsi0: transferred 2.2 GiB of 40.0 GiB (5.56%) in 1m 30s

drive-scsi0: transferred 2.2 GiB of 40.0 GiB (5.60%) in 1m 31s

drive-scsi0: transferred 2.3 GiB of 40.0 GiB (5.64%) in 1m 32s

drive-scsi0: transferred 2.3 GiB of 40.0 GiB (5.68%) in 1m 33s

drive-scsi0: transferred 2.3 GiB of 40.0 GiB (5.72%) in 1m 34s

drive-scsi0: transferred 2.3 GiB of 40.0 GiB (5.82%) in 1m 35s

drive-scsi0: transferred 2.3 GiB of 40.0 GiB (5.85%) in 1m 36s

drive-scsi0: transferred 40.0 GiB of 40.0 GiB (100.00%) in 1m 37s, ready

all 'mirror' jobs are ready

2024-05-13 14:05:33 scsi1: start migration to nbd:unix:/run/qemu-server/258_nbd.migrate:exportname=drive-scsi1

drive mirror is starting for drive-scsi1

tunnel: accepted new connection on '/run/qemu-server/258_nbd.migrate'

tunnel: requesting WS ticket via tunnel

tunnel: established new WS for forwarding '/run/qemu-server/258_nbd.migrate'

drive-scsi1: transferred 0.0 B of 40.0 GiB (0.00%) in 0s

drive-scsi1: transferred 47.2 MiB of 40.0 GiB (0.12%) in 1s

drive-scsi1: transferred 64.0 MiB of 40.0 GiB (0.16%) in 2s

drive-scsi1: transferred 80.0 MiB of 40.0 GiB (0.20%) in 3s

drive-scsi1: transferred 97.0 MiB of 40.0 GiB (0.24%) in 4s

drive-scsi1: transferred 113.0 MiB of 40.0 GiB (0.28%) in 5s

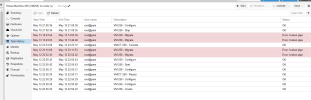

TASK ERROR: broken pipe

we use api Migrate virtual machine to a remote cluster. Creates a new migration task. EXPERIMENTAL feature!

tunnel: -> sending command "version" to remote

tunnel: <- got reply

2024-05-13 14:03:54 local WS tunnel version: 2

2024-05-13 14:03:54 remote WS tunnel version: 2

2024-05-13 14:03:54 minimum required WS tunnel version: 2

websocket tunnel started

2024-05-13 14:03:54 starting migration of VM 258 to node 'xy' (110.42.110.28)

tunnel: -> sending command "bwlimit" to remote

tunnel: <- got reply

tunnel: -> sending command "bwlimit" to remote

tunnel: <- got reply

2024-05-13 14:03:54 found local disk 'local:258/vm-258-disk-0.qcow2' (attached)

2024-05-13 14:03:54 found local disk 'local:258/vm-258-disk-1.qcow2' (attached)

2024-05-13 14:03:54 mapped: net1 from vmbr10 to vmbr0

2024-05-13 14:03:54 mapped: net0 from vmbr0 to vmbr0

2024-05-13 14:03:54 Allocating volume for drive 'scsi0' on remote storage 'Data'..

tunnel: -> sending command "disk" to remote

tunnel: <- got reply

2024-05-13 14:03:54 volume 'local:258/vm-258-disk-0.qcow2' is 'Data:138/vm-138-disk-0.qcow2' on the target

2024-05-13 14:03:54 Allocating volume for drive 'scsi1' on remote storage 'Data'..

tunnel: -> sending command "disk" to remote

tunnel: <- got reply

2024-05-13 14:03:55 volume 'local:258/vm-258-disk-1.qcow2' is 'Data:138/vm-138-disk-1.qcow2' on the target

tunnel: -> sending command "config" to remote

tunnel: <- got reply

tunnel: -> sending command "start" to remote

tunnel: <- got reply

2024-05-13 14:03:56 Setting up tunnel for '/run/qemu-server/258.migrate'

2024-05-13 14:03:56 Setting up tunnel for '/run/qemu-server/258_nbd.migrate'

2024-05-13 14:03:56 starting storage migration

2024-05-13 14:03:56 scsi0: start migration to nbd:unix:/run/qemu-server/258_nbd.migrate:exportname=drive-scsi0

drive mirror is starting for drive-scsi0

tunnel: accepted new connection on '/run/qemu-server/258_nbd.migrate'

tunnel: requesting WS ticket via tunnel

tunnel: established new WS for forwarding '/run/qemu-server/258_nbd.migrate'

drive-scsi0: transferred 1.0 MiB of 40.0 GiB (0.00%) in 0s

drive-scsi0: transferred 43.6 MiB of 40.0 GiB (0.11%) in 1s

drive-scsi0: transferred 145.0 MiB of 40.0 GiB (0.35%) in 2s

drive-scsi0: transferred 162.0 MiB of 40.0 GiB (0.40%) in 3s

drive-scsi0: transferred 179.0 MiB of 40.0 GiB (0.44%) in 4s

drive-scsi0: transferred 195.0 MiB of 40.0 GiB (0.48%) in 5s

drive-scsi0: transferred 212.0 MiB of 40.0 GiB (0.52%) in 6s

drive-scsi0: transferred 229.0 MiB of 40.0 GiB (0.56%) in 7s

drive-scsi0: transferred 246.0 MiB of 40.0 GiB (0.60%) in 8s

drive-scsi0: transferred 262.0 MiB of 40.0 GiB (0.64%) in 9s

drive-scsi0: transferred 279.0 MiB of 40.0 GiB (0.68%) in 10s

drive-scsi0: transferred 296.0 MiB of 40.0 GiB (0.72%) in 11s

drive-scsi0: transferred 313.0 MiB of 40.0 GiB (0.76%) in 12s

drive-scsi0: transferred 330.0 MiB of 40.0 GiB (0.81%) in 14s

drive-scsi0: transferred 347.0 MiB of 40.0 GiB (0.85%) in 15s

drive-scsi0: transferred 364.0 MiB of 40.0 GiB (0.89%) in 16s

drive-scsi0: transferred 381.0 MiB of 40.0 GiB (0.93%) in 17s

drive-scsi0: transferred 398.4 MiB of 40.0 GiB (0.97%) in 18s

drive-scsi0: transferred 416.0 MiB of 40.0 GiB (1.02%) in 19s

drive-scsi0: transferred 432.0 MiB of 40.0 GiB (1.05%) in 20s

drive-scsi0: transferred 452.0 MiB of 40.0 GiB (1.10%) in 21s

drive-scsi0: transferred 469.0 MiB of 40.0 GiB (1.15%) in 22s

drive-scsi0: transferred 488.6 MiB of 40.0 GiB (1.19%) in 23s

drive-scsi0: transferred 505.0 MiB of 40.0 GiB (1.23%) in 24s

drive-scsi0: transferred 522.6 MiB of 40.0 GiB (1.28%) in 25s

drive-scsi0: transferred 541.0 MiB of 40.0 GiB (1.32%) in 26s

drive-scsi0: transferred 557.4 MiB of 40.0 GiB (1.36%) in 27s

drive-scsi0: transferred 575.0 MiB of 40.0 GiB (1.40%) in 28s

drive-scsi0: transferred 592.0 MiB of 40.0 GiB (1.45%) in 29s

drive-scsi0: transferred 609.0 MiB of 40.0 GiB (1.49%) in 30s

drive-scsi0: transferred 626.0 MiB of 40.0 GiB (1.53%) in 31s

drive-scsi0: transferred 643.4 MiB of 40.0 GiB (1.57%) in 32s

drive-scsi0: transferred 661.0 MiB of 40.0 GiB (1.61%) in 33s

drive-scsi0: transferred 679.4 MiB of 40.0 GiB (1.66%) in 34s

drive-scsi0: transferred 701.1 MiB of 40.0 GiB (1.71%) in 35s

drive-scsi0: transferred 719.4 MiB of 40.0 GiB (1.76%) in 36s

drive-scsi0: transferred 743.6 MiB of 40.0 GiB (1.82%) in 37s

drive-scsi0: transferred 765.4 MiB of 40.0 GiB (1.87%) in 38s

drive-scsi0: transferred 786.8 MiB of 40.0 GiB (1.92%) in 39s

drive-scsi0: transferred 804.0 MiB of 40.0 GiB (1.96%) in 40s

drive-scsi0: transferred 822.0 MiB of 40.0 GiB (2.01%) in 41s

drive-scsi0: transferred 839.0 MiB of 40.0 GiB (2.05%) in 42s

drive-scsi0: transferred 855.0 MiB of 40.0 GiB (2.09%) in 43s

drive-scsi0: transferred 872.0 MiB of 40.0 GiB (2.13%) in 44s

drive-scsi0: transferred 889.0 MiB of 40.0 GiB (2.17%) in 45s

drive-scsi0: transferred 907.4 MiB of 40.0 GiB (2.22%) in 46s

drive-scsi0: transferred 924.0 MiB of 40.0 GiB (2.26%) in 47s

drive-scsi0: transferred 941.0 MiB of 40.0 GiB (2.30%) in 48s

drive-scsi0: transferred 958.0 MiB of 40.0 GiB (2.34%) in 49s

drive-scsi0: transferred 976.2 MiB of 40.0 GiB (2.38%) in 50s

drive-scsi0: transferred 994.0 MiB of 40.0 GiB (2.43%) in 51s

drive-scsi0: transferred 1010.0 MiB of 40.0 GiB (2.47%) in 52s

drive-scsi0: transferred 1.0 GiB of 40.0 GiB (2.51%) in 53s

drive-scsi0: transferred 1.0 GiB of 40.0 GiB (2.55%) in 54s

drive-scsi0: transferred 1.0 GiB of 40.0 GiB (2.59%) in 55s

drive-scsi0: transferred 1.1 GiB of 40.0 GiB (2.63%) in 56s

drive-scsi0: transferred 1.1 GiB of 40.0 GiB (2.67%) in 57s

drive-scsi0: transferred 1.1 GiB of 40.0 GiB (2.71%) in 58s

drive-scsi0: transferred 1.1 GiB of 40.0 GiB (2.76%) in 59s

drive-scsi0: transferred 1.1 GiB of 40.0 GiB (2.80%) in 1m

drive-scsi0: transferred 1.1 GiB of 40.0 GiB (2.84%) in 1m 1s

drive-scsi0: transferred 1.2 GiB of 40.0 GiB (2.88%) in 1m 2s

drive-scsi0: transferred 1.2 GiB of 40.0 GiB (2.92%) in 1m 3s

drive-scsi0: transferred 1.2 GiB of 40.0 GiB (2.97%) in 1m 4s

drive-scsi0: transferred 1.2 GiB of 40.0 GiB (3.01%) in 1m 5s

drive-scsi0: transferred 1.2 GiB of 40.0 GiB (3.05%) in 1m 6s

drive-scsi0: transferred 1.2 GiB of 40.0 GiB (3.09%) in 1m 7s

drive-scsi0: transferred 1.3 GiB of 40.0 GiB (3.13%) in 1m 8s

drive-scsi0: transferred 1.3 GiB of 40.0 GiB (3.17%) in 1m 9s

drive-scsi0: transferred 1.3 GiB of 40.0 GiB (3.21%) in 1m 10s

drive-scsi0: transferred 1.3 GiB of 40.0 GiB (3.25%) in 1m 11s

drive-scsi0: transferred 1.3 GiB of 40.0 GiB (3.29%) in 1m 12s

drive-scsi0: transferred 1.3 GiB of 40.0 GiB (3.33%) in 1m 13s

drive-scsi0: transferred 1.4 GiB of 40.0 GiB (3.38%) in 1m 14s

drive-scsi0: transferred 1.4 GiB of 40.0 GiB (3.43%) in 1m 15s

drive-scsi0: transferred 1.4 GiB of 40.0 GiB (3.47%) in 1m 16s

drive-scsi0: transferred 1.4 GiB of 40.0 GiB (3.52%) in 1m 17s

drive-scsi0: transferred 1.4 GiB of 40.0 GiB (3.56%) in 1m 18s

drive-scsi0: transferred 1.4 GiB of 40.0 GiB (3.60%) in 1m 19s

drive-scsi0: transferred 1.5 GiB of 40.0 GiB (3.64%) in 1m 20s

drive-scsi0: transferred 1.5 GiB of 40.0 GiB (3.68%) in 1m 21s

drive-scsi0: transferred 1.5 GiB of 40.0 GiB (3.73%) in 1m 22s

drive-scsi0: transferred 1.5 GiB of 40.0 GiB (3.78%) in 1m 23s

drive-scsi0: transferred 2.1 GiB of 40.0 GiB (5.31%) in 1m 24s

drive-scsi0: transferred 2.1 GiB of 40.0 GiB (5.36%) in 1m 25s

drive-scsi0: transferred 2.2 GiB of 40.0 GiB (5.40%) in 1m 26s

drive-scsi0: transferred 2.2 GiB of 40.0 GiB (5.44%) in 1m 27s

drive-scsi0: transferred 2.2 GiB of 40.0 GiB (5.48%) in 1m 28s

drive-scsi0: transferred 2.2 GiB of 40.0 GiB (5.52%) in 1m 29s

drive-scsi0: transferred 2.2 GiB of 40.0 GiB (5.56%) in 1m 30s

drive-scsi0: transferred 2.2 GiB of 40.0 GiB (5.60%) in 1m 31s

drive-scsi0: transferred 2.3 GiB of 40.0 GiB (5.64%) in 1m 32s

drive-scsi0: transferred 2.3 GiB of 40.0 GiB (5.68%) in 1m 33s

drive-scsi0: transferred 2.3 GiB of 40.0 GiB (5.72%) in 1m 34s

drive-scsi0: transferred 2.3 GiB of 40.0 GiB (5.82%) in 1m 35s

drive-scsi0: transferred 2.3 GiB of 40.0 GiB (5.85%) in 1m 36s

drive-scsi0: transferred 40.0 GiB of 40.0 GiB (100.00%) in 1m 37s, ready

all 'mirror' jobs are ready

2024-05-13 14:05:33 scsi1: start migration to nbd:unix:/run/qemu-server/258_nbd.migrate:exportname=drive-scsi1

drive mirror is starting for drive-scsi1

tunnel: accepted new connection on '/run/qemu-server/258_nbd.migrate'

tunnel: requesting WS ticket via tunnel

tunnel: established new WS for forwarding '/run/qemu-server/258_nbd.migrate'

drive-scsi1: transferred 0.0 B of 40.0 GiB (0.00%) in 0s

drive-scsi1: transferred 47.2 MiB of 40.0 GiB (0.12%) in 1s

drive-scsi1: transferred 64.0 MiB of 40.0 GiB (0.16%) in 2s

drive-scsi1: transferred 80.0 MiB of 40.0 GiB (0.20%) in 3s

drive-scsi1: transferred 97.0 MiB of 40.0 GiB (0.24%) in 4s

drive-scsi1: transferred 113.0 MiB of 40.0 GiB (0.28%) in 5s

TASK ERROR: broken pipe

we use api Migrate virtual machine to a remote cluster. Creates a new migration task. EXPERIMENTAL feature!