Hi guys

I started to do something but fear to have messed it up:

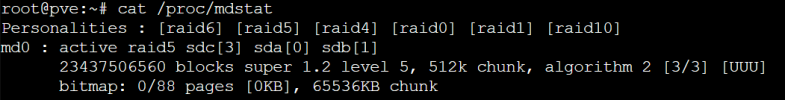

I run a Dell server with 2x 600 GB SSD in a hardware raid 1 config and 3x 12 TB HDD in a software raid 5 config. The SSDs carry the proxmox VE and the HDDs are for the VMs. Now I added a 16TB HDD and wanted to expand my SW RAID 5. I was googling around with increasing confusion. Finally I followed the this explanation: https://www.tecmint.com/extend-and-reduce-lvms-in-linux/. Here's what I already did:

1. Entered the new HDD into an empty slot (disk was immediatly recognised)

2. added the new HDD using fdisk (fdisk -c /dev/sdd) and created max partition

3. created new PV using pvcreate (pvcreated /dev/sdd1) (this was probably the mistake??)

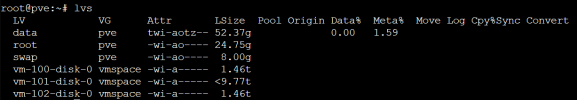

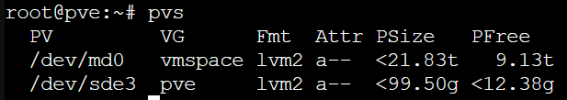

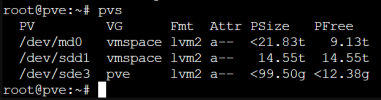

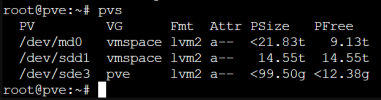

4. checked with pvs:

(Note: it seems to me that besides the raid5 pool (md0) another "vmspace" was created based on the new hdd sdd1 (?)

5. extended volume group with vgextend (vgextend vmspace /dev/sdd1)

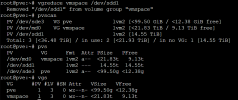

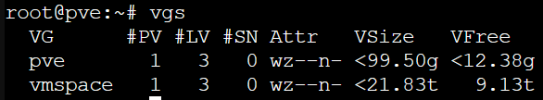

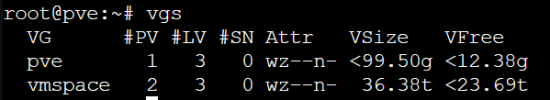

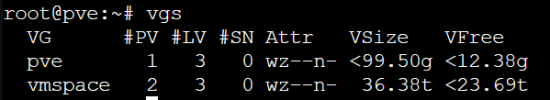

6. checked with vgs:

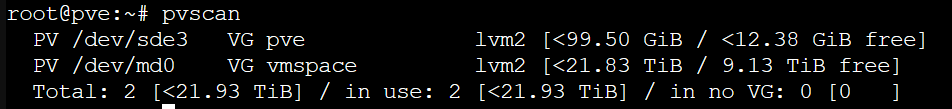

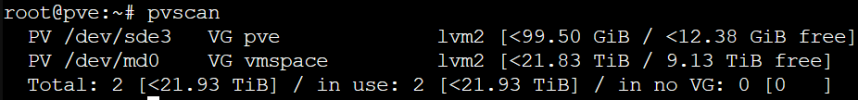

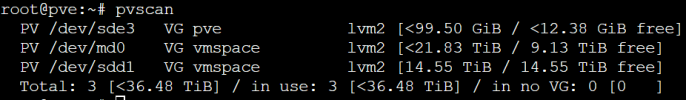

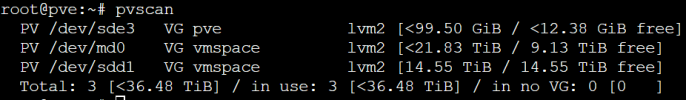

7. pvscan:

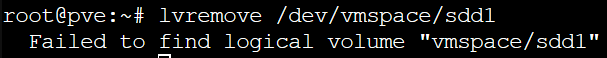

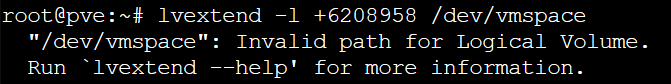

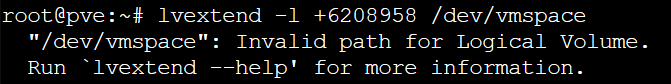

8. I then wanted to expand the logical volume with lvextend which resulted in the following error:

Thanks for any inputs on how to finish the process - the current lsblk gives the following output:

I started to do something but fear to have messed it up:

I run a Dell server with 2x 600 GB SSD in a hardware raid 1 config and 3x 12 TB HDD in a software raid 5 config. The SSDs carry the proxmox VE and the HDDs are for the VMs. Now I added a 16TB HDD and wanted to expand my SW RAID 5. I was googling around with increasing confusion. Finally I followed the this explanation: https://www.tecmint.com/extend-and-reduce-lvms-in-linux/. Here's what I already did:

1. Entered the new HDD into an empty slot (disk was immediatly recognised)

2. added the new HDD using fdisk (fdisk -c /dev/sdd) and created max partition

3. created new PV using pvcreate (pvcreated /dev/sdd1) (this was probably the mistake??)

4. checked with pvs:

(Note: it seems to me that besides the raid5 pool (md0) another "vmspace" was created based on the new hdd sdd1 (?)

5. extended volume group with vgextend (vgextend vmspace /dev/sdd1)

6. checked with vgs:

7. pvscan:

8. I then wanted to expand the logical volume with lvextend which resulted in the following error:

Thanks for any inputs on how to finish the process - the current lsblk gives the following output: