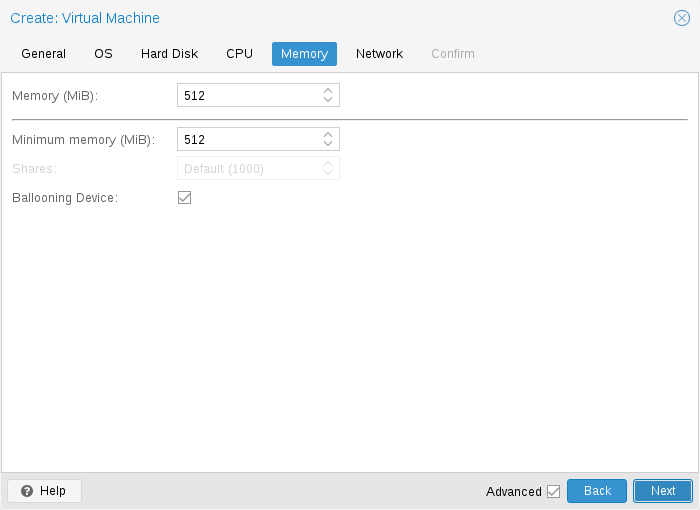

I have an Ubuntu 18.04 LTS VM running on PVE 7 which I assigned 32 GB of RAM and enabled ballooning. The VM does batch-processing and is using > 20 GB RAM for a few minutes, after which it goes back to use close to none (less then 500 MB); that's why ballooning is enabled.

The strage part is, that RAM goes missing sometimes. I mean the total amount of RAM the VM has drops well below 32 GB while the VM is running. Lowest I have seen was 14 GB; `htop` then shows only 14 GB total, `free -h` shows only 14 GB total, `/proc/meminfo` shows only 14 GB `MemTotal`.

My first guess was, that the ramdisk which I use might cause this. But limiting the ramdisk to 1 GB did not improve things.

Also the RAM is still gone even if I reboot from within the VM. If I do a VM shutdown from proxmox and restart it, the full ram is back.

Any Idea what might cause this?

The strage part is, that RAM goes missing sometimes. I mean the total amount of RAM the VM has drops well below 32 GB while the VM is running. Lowest I have seen was 14 GB; `htop` then shows only 14 GB total, `free -h` shows only 14 GB total, `/proc/meminfo` shows only 14 GB `MemTotal`.

My first guess was, that the ramdisk which I use might cause this. But limiting the ramdisk to 1 GB did not improve things.

Also the RAM is still gone even if I reboot from within the VM. If I do a VM shutdown from proxmox and restart it, the full ram is back.

Any Idea what might cause this?