Hello!,

I'm trying to integrate PVE via OIDC to a Keycloak server. The thing is, the server is running as a VM on top of the same cluster and is a client of a EVPN/VXLAN VNI/Subnet.

Even though the anycast GW is attached to a VRF, the traffic originated from the Management plane seems to exit through the directly attached interface, even though it should be isolated.

Am I missing anything here?

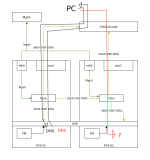

Topology:

PVE Management <-- VLAN --> switch <--VLAN--> vFW <--BGP--> PVE node Exit nodes

Instead of going all the way throught the peering point, the PVE node is taking a shortcut via the local interface.

tcpdump shows the "gateway" trying to reach the web servers,

I'm trying to integrate PVE via OIDC to a Keycloak server. The thing is, the server is running as a VM on top of the same cluster and is a client of a EVPN/VXLAN VNI/Subnet.

Even though the anycast GW is attached to a VRF, the traffic originated from the Management plane seems to exit through the directly attached interface, even though it should be isolated.

Am I missing anything here?

Topology:

PVE Management <-- VLAN --> switch <--VLAN--> vFW <--BGP--> PVE node Exit nodes

Instead of going all the way throught the peering point, the PVE node is taking a shortcut via the local interface.

tcpdump shows the "gateway" trying to reach the web servers,

Last edited: