Hello everyone,

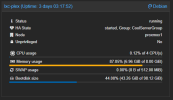

I'm currently running Plex inside a docker container inside an LXC container. The cluster is backed by CEPH storage. The issue I'm encountering though is that sometimes the LXC container runs out of memory. I have this happening for a few other LXC containers too.

The container has a memory limit of 8gb but after a while it gets closer and closer to this limit until it eventually runs out. When this happens I can't even connect to the container anymore and have to force stop it.

Today when I wanted to investigate the issue a bit further I noticed my Plex LXC was filling up again. So I logged in and started stopping all processed.

I first manually stopped all containers, and then also stopped docker and containerd using systemctl stop.

The strange thing is though that after that the memory usage in both Proxmox and htop still reported 7gb/8gb used:

What is weird though is that none of the processes in htop show any significant memory usage.

The command free -m also shows that about 7gb is used:

Next I ran `ps aux` to find out the memory usage per process:

ps aux again suggest that there's barely any memory usage.

Another interesting one is `cat /proc/meminfo`:

This lists about 6.5gb and 6.3gb respectively for Active and Active(anon).

Even with all this information I have no clue what is using the memory inside this LXC container.

One vague idea I have is that it might have something to do with me mapping the Intel N100 chip to the container so that Plex can do hardware transcoding, but again, this might be something that's completely unrelated.

Here's my LXC config `/etc/pve/lsx/201.conf`:

I know I could just restart the container and have things resolved again but I think I have a beautiful scenario now for debugging. Let's hope I can get some input here to figure out what's going wrong.

Note: I also crossposted this issue here:

https://discuss.linuxcontainers.org...-90-of-memory-with-all-processed-killed/19389

I'm currently running Plex inside a docker container inside an LXC container. The cluster is backed by CEPH storage. The issue I'm encountering though is that sometimes the LXC container runs out of memory. I have this happening for a few other LXC containers too.

The container has a memory limit of 8gb but after a while it gets closer and closer to this limit until it eventually runs out. When this happens I can't even connect to the container anymore and have to force stop it.

Today when I wanted to investigate the issue a bit further I noticed my Plex LXC was filling up again. So I logged in and started stopping all processed.

I first manually stopped all containers, and then also stopped docker and containerd using systemctl stop.

The strange thing is though that after that the memory usage in both Proxmox and htop still reported 7gb/8gb used:

What is weird though is that none of the processes in htop show any significant memory usage.

The command free -m also shows that about 7gb is used:

Code:

root@lxc-plex:~/dockercomposers/plexplox# free -m

total used free shared buff/cache available

Mem: 8192 7129 769 0 292 1062

Swap: 0 0 0Next I ran `ps aux` to find out the memory usage per process:

Code:

root@lxc-plex:~/dockercomposers/plexplox# ps aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

root 1 0.0 0.0 168800 8320 ? Ss Mar13 0:26 /sbin/init

root 43 0.0 0.2 74308 20608 ? Ss Mar13 0:02 /lib/systemd/systemd-journald

systemd+ 82 0.0 0.0 17996 3840 ? Ss Mar13 0:00 /lib/systemd/systemd-networkd

root 114 0.0 0.0 3600 640 ? Ss Mar13 0:00 /usr/sbin/cron -f

message+ 115 0.0 0.0 9296 2176 ? Ss Mar13 0:02 /usr/bin/dbus-daemon --system --address=systemd: --nofork --nopidfile --systemd-activation --syslog-only

root 119 0.0 0.0 17164 2688 ? Ss Mar13 0:00 /lib/systemd/systemd-logind

root 124 0.0 0.0 2516 640 pts/0 Ss+ Mar13 0:00 /sbin/agetty -o -p -- \u --noclear --keep-baud - 115200,38400,9600 linux

root 125 0.0 0.0 6120 1152 pts/1 Ss Mar13 0:00 /bin/login -p --

root 126 0.0 0.0 2516 512 pts/2 Ss+ Mar13 0:00 /sbin/agetty -o -p -- \u --noclear - linux

root 132 0.0 0.0 15412 1920 ? Ss Mar13 0:00 sshd: /usr/sbin/sshd -D [listener] 0 of 10-100 startups

root 287 0.0 0.0 42652 788 ? Ss Mar13 0:00 /usr/lib/postfix/sbin/master -w

postfix 289 0.0 0.0 43088 896 ? S Mar13 0:00 qmgr -l -t unix -u

root 3367 0.0 0.0 6632 3712 pts/1 S Mar13 0:00 -bash

postfix 519708 0.0 0.0 43052 6400 ? S 18:09 0:00 pickup -l -t unix -u -c

root 519711 0.0 0.0 8088 4096 pts/1 R+ 18:25 0:00 ps auxps aux again suggest that there's barely any memory usage.

Another interesting one is `cat /proc/meminfo`:

Code:

root@lxc-plex:~/dockercomposers/plexplox# cat /proc/meminfo

MemTotal: 8388608 kB

MemFree: 788216 kB

MemAvailable: 1087576 kB

Buffers: 0 kB

Cached: 299360 kB

SwapCached: 0 kB

Active: 6515140 kB

Inactive: 444628 kB

Active(anon): 6337184 kB

Inactive(anon): 323328 kB

Active(file): 177956 kB

Inactive(file): 121300 kB

Unevictable: 0 kB

Mlocked: 221280 kB

SwapTotal: 0 kB

SwapFree: 0 kB

Zswap: 0 kB

Zswapped: 0 kB

Dirty: 0 kB

Writeback: 0 kB

AnonPages: 6660408 kB

Mapped: 0 kB

Shmem: 104 kB

KReclaimable: 787484 kB

Slab: 0 kB

SReclaimable: 0 kB

SUnreclaim: 0 kB

KernelStack: 20336 kB

PageTables: 41200 kB

SecPageTables: 0 kB

NFS_Unstable: 0 kB

Bounce: 0 kB

WritebackTmp: 0 kB

CommitLimit: 16314548 kB

Committed_AS: 16080536 kB

VmallocTotal: 34359738367 kB

VmallocUsed: 253936 kB

VmallocChunk: 0 kB

Percpu: 4448 kB

HardwareCorrupted: 0 kB

AnonHugePages: 0 kB

ShmemHugePages: 0 kB

ShmemPmdMapped: 0 kB

FileHugePages: 0 kB

FilePmdMapped: 0 kB

Unaccepted: 0 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

Hugetlb: 0 kB

DirectMap4k: 372040 kB

DirectMap2M: 15124480 kB

DirectMap1G: 17825792 kBThis lists about 6.5gb and 6.3gb respectively for Active and Active(anon).

Even with all this information I have no clue what is using the memory inside this LXC container.

One vague idea I have is that it might have something to do with me mapping the Intel N100 chip to the container so that Plex can do hardware transcoding, but again, this might be something that's completely unrelated.

Here's my LXC config `/etc/pve/lsx/201.conf`:

Code:

root@proxmox1:/etc/pve/lxc# cat 201.conf

arch: amd64

cores: 4

features: nesting=1

hostname: lxc-plex

memory: 8192

mp0: /mnt/lxc_shares/Plex/,mp=/mnt/Plex,shared=1

net0: name=eth0,bridge=vmbr0,gw=10.88.20.254,hwaddr=8E:48:71:B7:12:98,ip=10.88.21.201/23,type=veth

onboot: 1

ostype: debian

rootfs: ReplicatedPool_2:vm-201-disk-0,size=100G

swap: 512

lxc.cgroup2.devices.allow: c 226:0 rwm

lxc.cgroup2.devices.allow: c 226:128 rwm

lxc.mount.entry: /dev/dri dev/dri none bind,optional,create=dirI know I could just restart the container and have things resolved again but I think I have a beautiful scenario now for debugging. Let's hope I can get some input here to figure out what's going wrong.

Note: I also crossposted this issue here:

https://discuss.linuxcontainers.org...-90-of-memory-with-all-processed-killed/19389

Last edited: