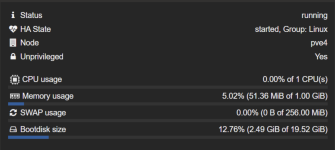

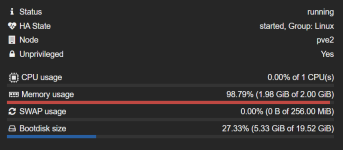

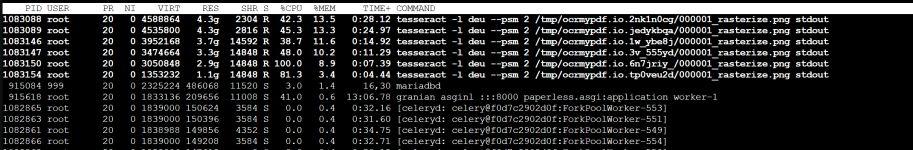

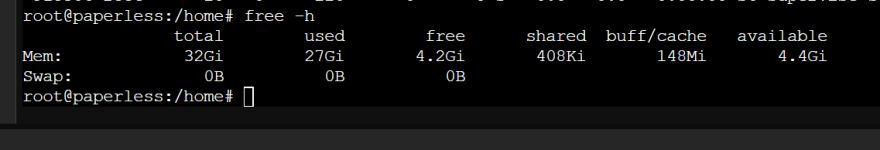

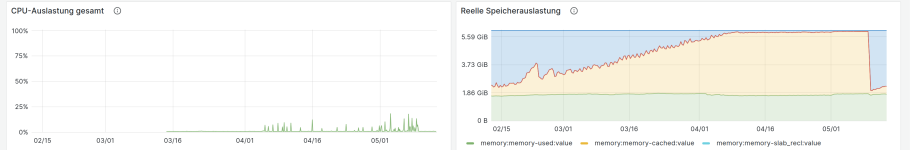

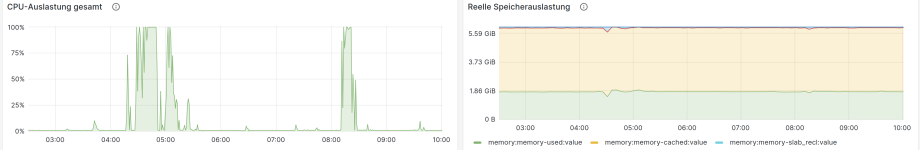

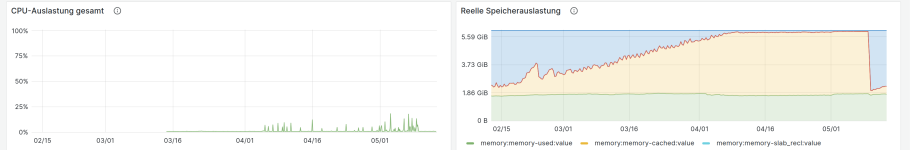

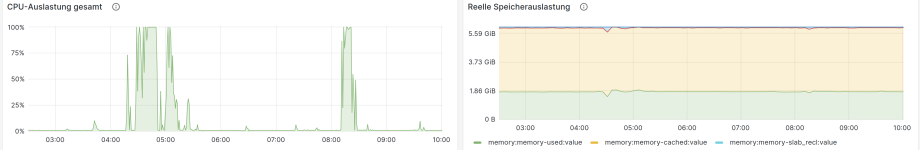

With some of our Lxc containers, we have observed that ct's cached memory is constantly increasing. As soon as almost the entire memory is occupied, the CPU utilisation in the container sporadically rises to 100% and the container is no longer accessible. Restarting the container (and thus clearing the cache) solves the problem.

I was also able to create the problem artificially by lowering the soft memory limit of the container during operation with:

Here, too, the CPU within the container increased to 100%, which is why I assume that this is the usual behaviour when the memory of a ct is full. However, most of the memory in the container is only occupied with cached memory, which should actually be released automatically?

The hypervisor does not have a swap partition as we use Zfs and did not want to use swap. Could there be a connection with this?

Do you have any ideas what else I could try?

I was also able to create the problem artificially by lowering the soft memory limit of the container during operation with:

echo 5113695436 > /sys/fs/cgroup/lxc/123/memory.high

echo 5113695436 > /sys/fs/cgroup/lxc/123/ns/memory.high

Here, too, the CPU within the container increased to 100%, which is why I assume that this is the usual behaviour when the memory of a ct is full. However, most of the memory in the container is only occupied with cached memory, which should actually be released automatically?

The hypervisor does not have a swap partition as we use Zfs and did not want to use swap. Could there be a connection with this?

Do you have any ideas what else I could try?

# cat /etc/pve/lxc/123.conf

arch: amd64

cpulimit: 4

features: mknod=1,nesting=1

hostname: 123.xyz.com

memory: 6144

mp0: freenas:123/vm-123-disk-0.raw,mp=/backups,size=1000G

nameserver: 127.0.0.1

net0: name=eth0,bridge=vmbr0,firewall=1,gw=111.111.111.111,gw6=xxxx:xxxx:xxxx::x,hwaddr=BC:24:11A:CD:64,ip=111.111.111.111/24,ip6=xxxx:xxxx:xxxx/56,type=veth

onboot: 1

ostype: centos

rootfs: local-zfs2:subvol-123-disk-0,size=100G

swap: 512

unprivileged: 1

# pveversion -v

proxmox-ve: 8.4.0 (running kernel: 6.8.12-7-pve)

pve-manager: 8.4.1 (running version: 8.4.1/2a5fa54a8503f96d)

proxmox-kernel-helper: 8.1.1

proxmox-kernel-6.8: 6.8.12-9

proxmox-kernel-6.8.12-9-pve-signed: 6.8.12-9

proxmox-kernel-6.8.12-8-pve-signed: 6.8.12-8

proxmox-kernel-6.8.12-7-pve-signed: 6.8.12-7

proxmox-kernel-6.8.12-4-pve-signed: 6.8.12-4

ceph-fuse: 17.2.7-pve3

corosync: 3.1.9-pve1

criu: 3.17.1-2+deb12u1

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx11

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-5

libknet1: 1.30-pve2

libproxmox-acme-perl: 1.6.0

libproxmox-backup-qemu0: 1.5.1

libproxmox-rs-perl: 0.3.5

libpve-access-control: 8.2.2

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.1.0

libpve-cluster-perl: 8.1.0

libpve-common-perl: 8.3.1

libpve-guest-common-perl: 5.2.2

libpve-http-server-perl: 5.2.2

libpve-network-perl: 0.11.2

libpve-rs-perl: 0.9.4

libpve-storage-perl: 8.3.6

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.6.0-2

proxmox-backup-client: 3.4.0-1

proxmox-backup-file-restore: 3.4.0-1

proxmox-firewall: 0.7.1

proxmox-kernel-helper: 8.1.1

proxmox-mail-forward: 0.3.2

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.7

proxmox-widget-toolkit: 4.3.10

pve-cluster: 8.1.0

pve-container: 5.2.6

pve-docs: 8.4.0

pve-edk2-firmware: 4.2025.02-3

pve-esxi-import-tools: 0.7.3

pve-firewall: 5.1.1

pve-firmware: 3.15-3

pve-ha-manager: 4.0.7

pve-i18n: 3.4.2

pve-qemu-kvm: 9.2.0-5

pve-xtermjs: 5.5.0-2

qemu-server: 8.3.12

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.7-pve2