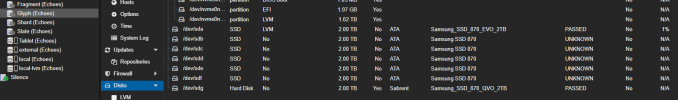

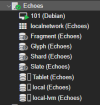

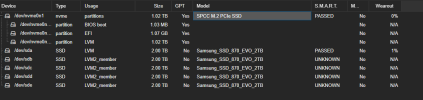

LVM-thin drives showing a grey question mark. Had this happen with a single drive before, thought the drive may have failed. It was a recent purchase so I returned it and also got a sata card, just in case there was an issue with the sata ports on the motherboard. All the drives minus the NVME were created through the Web GUI under LVM-thin, and each as a single device they were not pooled together. The drives were working and the smart values were good with 0% to 1% wearout with 2 of them being brand new. These are not RAID. Just trying to figure out how to fix and troubleshoot this. Below are some commands Ive seen used for similar issues, and I've upload a chunk of the system log that looks roughly around when the issue started.

Code:

root@Echoes:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 1.8T 0 disk

├─Tablet-Tablet_tmeta 252:8 0 15.9G 0 lvm

│ └─Tablet-Tablet-tpool 252:10 0 1.8T 0 lvm

│ ├─Tablet-Tablet 252:23 0 1.8T 1 lvm

│ └─Tablet-vm--101--disk--0 252:24 0 1.9T 0 lvm

└─Tablet-Tablet_tdata 252:9 0 1.8T 0 lvm

└─Tablet-Tablet-tpool 252:10 0 1.8T 0 lvm

├─Tablet-Tablet 252:23 0 1.8T 1 lvm

└─Tablet-vm--101--disk--0 252:24 0 1.9T 0 lvm

sdb 8:16 0 1.8T 0 disk

├─Slate-Slate_tmeta 252:11 0 15.9G 0 lvm

│ └─Slate-Slate-tpool 252:13 0 1.8T 0 lvm

│ ├─Slate-Slate 252:25 0 1.8T 1 lvm

│ └─Slate-vm--101--disk--0 252:26 0 1.9T 0 lvm

└─Slate-Slate_tdata 252:12 0 1.8T 0 lvm

└─Slate-Slate-tpool 252:13 0 1.8T 0 lvm

├─Slate-Slate 252:25 0 1.8T 1 lvm

└─Slate-vm--101--disk--0 252:26 0 1.9T 0 lvm

sdc 8:32 0 1.8T 0 disk

├─Shard-Shard_tmeta 252:14 0 15.9G 0 lvm

│ └─Shard-Shard-tpool 252:16 0 1.8T 0 lvm

│ ├─Shard-Shard 252:27 0 1.8T 1 lvm

│ └─Shard-vm--101--disk--0 252:28 0 1.9T 0 lvm

└─Shard-Shard_tdata 252:15 0 1.8T 0 lvm

└─Shard-Shard-tpool 252:16 0 1.8T 0 lvm

├─Shard-Shard 252:27 0 1.8T 1 lvm

└─Shard-vm--101--disk--0 252:28 0 1.9T 0 lvm

sdd 8:48 0 1.8T 0 disk

├─Fragment-Fragment_tmeta 252:17 0 15.9G 0 lvm

│ └─Fragment-Fragment-tpool 252:19 0 1.8T 0 lvm

│ ├─Fragment-Fragment 252:29 0 1.8T 1 lvm

│ └─Fragment-vm--101--disk--0 252:30 0 1.9T 0 lvm

└─Fragment-Fragment_tdata 252:18 0 1.8T 0 lvm

└─Fragment-Fragment-tpool 252:19 0 1.8T 0 lvm

├─Fragment-Fragment 252:29 0 1.8T 1 lvm

└─Fragment-vm--101--disk--0 252:30 0 1.9T 0 lvm

sde 8:64 0 1.8T 0 disk

├─Glyph-Glyph_tmeta 252:20 0 15.9G 0 lvm

│ └─Glyph-Glyph-tpool 252:22 0 1.8T 0 lvm

│ ├─Glyph-Glyph 252:31 0 1.8T 1 lvm

│ └─Glyph-vm--101--disk--0 252:32 0 1.9T 0 lvm

└─Glyph-Glyph_tdata 252:21 0 1.8T 0 lvm

└─Glyph-Glyph-tpool 252:22 0 1.8T 0 lvm

├─Glyph-Glyph 252:31 0 1.8T 1 lvm

└─Glyph-vm--101--disk--0 252:32 0 1.9T 0 lvm

nvme0n1 259:0 0 953.9G 0 disk

├─nvme0n1p1 259:1 0 1007K 0 part

├─nvme0n1p2 259:2 0 1G 0 part

└─nvme0n1p3 259:3 0 952.9G 0 part

├─pve-swap 252:0 0 8G 0 lvm [SWAP]

├─pve-root 252:1 0 96G 0 lvm /

├─pve-data_tmeta 252:2 0 8.3G 0 lvm

│ └─pve-data-tpool 252:4 0 816.2G 0 lvm

│ ├─pve-data 252:5 0 816.2G 1 lvm

│ ├─pve-vm--101--disk--0 252:6 0 4M 0 lvm

│ └─pve-vm--101--disk--1 252:7 0 500G 0 lvm

└─pve-data_tdata 252:3 0 816.2G 0 lvm

└─pve-data-tpool 252:4 0 816.2G 0 lvm

├─pve-data 252:5 0 816.2G 1 lvm

├─pve-vm--101--disk--0 252:6 0 4M 0 lvm

└─pve-vm--101--disk--1 252:7 0 500G 0 lvm

Code:

root@Echoes:~# pvesm status

Command failed with status code 5.

command '/sbin/vgscan --ignorelockingfailure --mknodes' failed: exit code 5

no such logical volume Shard/Shard

no such logical volume Slate/Slate

no such logical volume Fragment/Fragment

no such logical volume Glyph/Glyph

Name Type Status Total Used Available %

Fragment lvmthin inactive 0 0 0 0.00%

Glyph lvmthin inactive 0 0 0 0.00%

Shard lvmthin inactive 0 0 0 0.00%

Slate lvmthin inactive 0 0 0 0.00%

Tablet lvmthin active 1919827968 959913 1918868054 0.05%

local dir active 98497780 6711384 86736848 6.81%

local-lvm lvmthin active 855855104 67441382 788413721 7.88%

Code:

root@Echoes:~# pvscan

PV /dev/nvme0n1p3 VG pve lvm2 [<952.87 GiB / 16.00 GiB free]

PV /dev/sda VG Tablet lvm2 [<1.82 TiB / 376.00 MiB free]

Total: 2 [<2.75 TiB] / in use: 2 [<2.75 TiB] / in no VG: 0 [0 ]

Code:

root@Echoes:~# cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content vztmpl,iso,backup

lvmthin: local-lvm

thinpool data

vgname pve

content images,rootdir

lvmthin: Tablet

thinpool Tablet

vgname Tablet

content images,rootdir

nodes Echoes

lvmthin: Slate

thinpool Slate

vgname Slate

content rootdir,images

nodes Echoes

lvmthin: Shard

thinpool Shard

vgname Shard

content images,rootdir

nodes Echoes

lvmthin: Fragment

thinpool Fragment

vgname Fragment

content images,rootdir

nodes Echoes

lvmthin: Glyph

thinpool Glyph

vgname Glyph

content images,rootdir

nodes Echoes

Code:

root@Echoes:~# vgscan

Found volume group "pve" using metadata type lvm2

Found volume group "Tablet" using metadata type lvm2

Code:

root@Echoes:~# lvscan

ACTIVE '/dev/pve/data' [<816.21 GiB] inherit

ACTIVE '/dev/pve/swap' [8.00 GiB] inherit

ACTIVE '/dev/pve/root' [96.00 GiB] inherit

ACTIVE '/dev/pve/vm-101-disk-0' [4.00 MiB] inherit

ACTIVE '/dev/pve/vm-101-disk-1' [500.00 GiB] inherit

ACTIVE '/dev/Tablet/Tablet' [<1.79 TiB] inherit

ACTIVE '/dev/Tablet/vm-101-disk-0' [<1.86 TiB] inherit

Code:

root@Echoes:~# pvs

PV VG Fmt Attr PSize PFree

/dev/nvme0n1p3 pve lvm2 a-- <952.87g 16.00g

/dev/sda Tablet lvm2 a-- <1.82t 376.00m

Code:

root@Echoes:~# vgs

VG #PV #LV #SN Attr VSize VFree

Tablet 1 2 0 wz--n- <1.82t 376.00m

pve 1 5 0 wz--n- <952.87g 16.00g

Code:

root@Echoes:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

Tablet Tablet twi-aotz-- <1.79t 0.05 0.15

vm-101-disk-0 Tablet Vwi-aotz-- <1.86t Tablet 0.05

data pve twi-aotz-- <816.21g 7.88 0.49

root pve -wi-ao---- 96.00g

swap pve -wi-ao---- 8.00g

vm-101-disk-0 pve Vwi-aotz-- 4.00m data 14.06

vm-101-disk-1 pve Vwi-aotz-- 500.00g data 12.86