Hi.

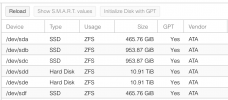

I was not able to ssh to one of our nodes in a cluster. I hard reset the server. After restart my ZFS pool "ssdpool" has a "?" in the web ui. I cannot activate the zpool. 2 SSD was bind to "ssdpool" mirrored. I can see both disks with fdisk -l.

I tried this: https://forum.proxmox.com/threads/proxmox-not-mounting-zfs-correctly-at-boot.65724/ not solving the problem. What can I do? Thank for helping!

Greetings

Khanh Nguyen

I was not able to ssh to one of our nodes in a cluster. I hard reset the server. After restart my ZFS pool "ssdpool" has a "?" in the web ui. I cannot activate the zpool. 2 SSD was bind to "ssdpool" mirrored. I can see both disks with fdisk -l.

Code:

proxmox-ve: 5.4-2 (running kernel: 4.15.18-18-pve)

pve-manager: 5.4-11 (running version: 5.4-11/6df3d8d0)

pve-kernel-4.15: 5.4-6

pve-kernel-4.15.18-18-pve: 4.15.18-44

pve-kernel-4.15.18-7-pve: 4.15.18-27

pve-kernel-4.15.17-1-pve: 4.15.17-9

corosync: 2.4.4-pve1

criu: 2.11.1-1~bpo90

glusterfs-client: 3.8.8-1

ksm-control-daemon: 1.2-2

libjs-extjs: 6.0.1-2

libpve-access-control: 5.1-11

libpve-apiclient-perl: 2.0-5

libpve-common-perl: 5.0-53

libpve-guest-common-perl: 2.0-20

libpve-http-server-perl: 2.0-13

libpve-storage-perl: 5.0-44

libqb0: 1.0.3-1~bpo9

lvm2: 2.02.168-pve6

lxc-pve: 3.1.0-3

lxcfs: 3.0.3-pve1

novnc-pve: 1.0.0-3

proxmox-widget-toolkit: 1.0-28

pve-cluster: 5.0-37

pve-container: 2.0-39

pve-docs: 5.4-2

pve-edk2-firmware: 1.20190312-1

pve-firewall: 3.0-22

pve-firmware: 2.0-6

pve-ha-manager: 2.0-9

pve-i18n: 1.1-4

pve-libspice-server1: 0.14.1-2

pve-qemu-kvm: 3.0.1-4

pve-xtermjs: 3.12.0-1

qemu-server: 5.0-54

smartmontools: 6.5+svn4324-1

spiceterm: 3.0-5

vncterm: 1.5-3

zfsutils-linux: 0.7.13-pve1~bpo2I tried this: https://forum.proxmox.com/threads/proxmox-not-mounting-zfs-correctly-at-boot.65724/ not solving the problem. What can I do? Thank for helping!

Greetings

Khanh Nguyen