Hello,

My local-zfs volume (2 ssd in mirror) is full and I don't understand why. I have only one VM with 3 disks configured and the total size of my virtuals disks doesn't match the used space. I don't have any snapshots.

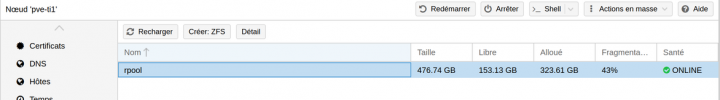

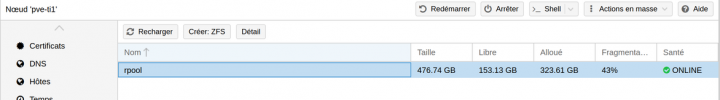

ZFS volume stat on the PVE GUI:

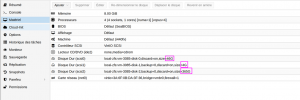

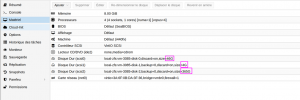

The virtuals disks configured for the unique VM hosted on that PVE server, total max size should never be greater than 46+4+365, so 415GB w/o using snapshots.

In the VM:

I tried to fstrim my file systems inside the VM, but this didn't free up anything.

Any idea?

Thanks!

My local-zfs volume (2 ssd in mirror) is full and I don't understand why. I have only one VM with 3 disks configured and the total size of my virtuals disks doesn't match the used space. I don't have any snapshots.

Code:

root@pve-ti1:~# zfs list

NAME USED AVAIL REFER MOUNTPOINT

rpool 430G 627M 104K /rpool

rpool/ROOT 1.48G 627M 96K /rpool/ROOT

rpool/ROOT/pve-1 1.48G 627M 1.48G /

rpool/data 428G 627M 96K /rpool/data

rpool/data/vm-3085-disk-0 47.4G 46.0G 2.07G -

rpool/data/vm-3085-disk-1 4.13G 4.51G 239M -

rpool/data/vm-3085-disk-2 376G 79.5G 298G -

root@pve-ti1:~# zfs list -t snapshot

no datasets availableZFS volume stat on the PVE GUI:

The virtuals disks configured for the unique VM hosted on that PVE server, total max size should never be greater than 46+4+365, so 415GB w/o using snapshots.

In the VM:

Code:

root@pbs:/data# LANG=C df -h

Filesystem Size Used Avail Use% Mounted on

[removed useless lines]

/dev/mapper/pbs-root 39G 2.6G 35G 7% /

/dev/sdb 359G 202G 139G 60% /dataI tried to fstrim my file systems inside the VM, but this didn't free up anything.

Any idea?

Thanks!

Last edited: