I am totally surprised by this result and i am hoping we can find some kind of explanation for this.

To eliminate my CEPH cluster as cause of VM slowdown, i have cloned a Windows XP machine on Proxmox Local SATA HDD Storage. Then i ran CrystalDiskMark program on both identical machines, one on local and the other on RBD storage. Both VM has writeback cache enabled on virtual disks.

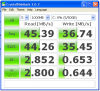

VM Running on CEPH RBD storage

=======================

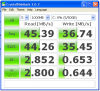

VM Running on Proxmox Local Storage

===========================

Am i seeing correctly that VM running on Local storage is little slower than RBD Storage? I triple checked that i did not mix up 2 images. I was hoping to see Local storage benchmark well above RBD benchmarks. I am almost sure i have done something wrong somewhere. Any ideas?

To eliminate my CEPH cluster as cause of VM slowdown, i have cloned a Windows XP machine on Proxmox Local SATA HDD Storage. Then i ran CrystalDiskMark program on both identical machines, one on local and the other on RBD storage. Both VM has writeback cache enabled on virtual disks.

VM Running on CEPH RBD storage

=======================

VM Running on Proxmox Local Storage

===========================

Am i seeing correctly that VM running on Local storage is little slower than RBD Storage? I triple checked that i did not mix up 2 images. I was hoping to see Local storage benchmark well above RBD benchmarks. I am almost sure i have done something wrong somewhere. Any ideas?