Hi,

After migration from QNAP to proxmox one of my VM which is TVHeadend server for IPTV do not get IPTV streams anymore...

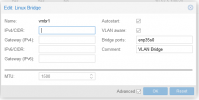

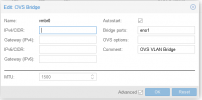

I have standard "Linux Bridge" which is "VLAN aware" and all VM with different VLANS or VLAN trunks work normaly except that one which use two network cards.

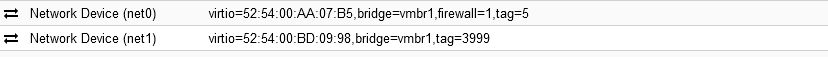

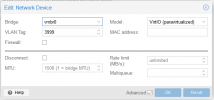

First network card ens3 is fro private network and second one ens4 is for IPTV.

If I run ip address command on old VM(On QNAP) and on new VM(On Proxmox) both return same output, so there is no issues with VM configuration...

Switch configuration is ok and exact as on QNAP lan port. Network card(Mellanox CX354) used on Proxmox has been before used on QNAP and is work normaly...

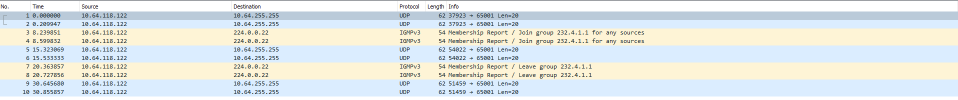

Does Proxmox on bridge filter multicast? Any idea what to check and why IPTV multicast don't work?

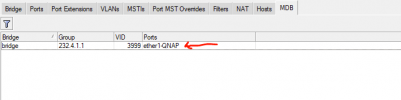

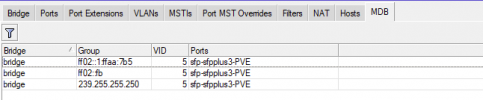

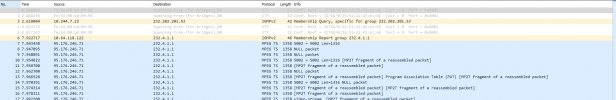

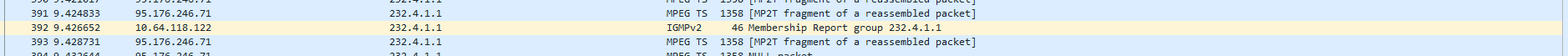

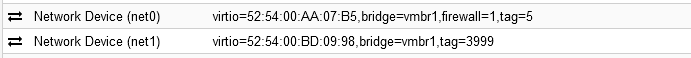

Proxmox VM:

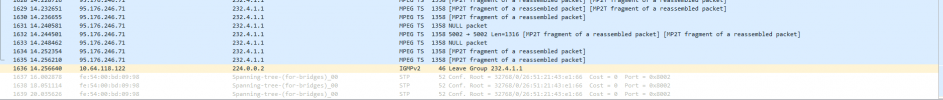

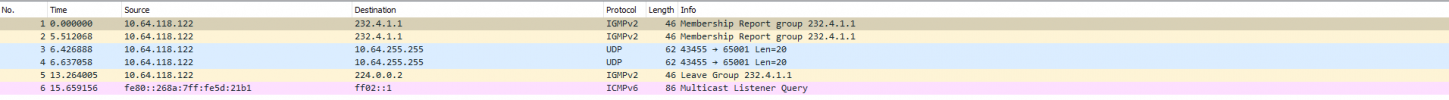

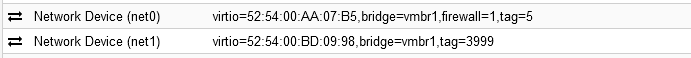

QNAP VM:

After migration from QNAP to proxmox one of my VM which is TVHeadend server for IPTV do not get IPTV streams anymore...

I have standard "Linux Bridge" which is "VLAN aware" and all VM with different VLANS or VLAN trunks work normaly except that one which use two network cards.

First network card ens3 is fro private network and second one ens4 is for IPTV.

If I run ip address command on old VM(On QNAP) and on new VM(On Proxmox) both return same output, so there is no issues with VM configuration...

Switch configuration is ok and exact as on QNAP lan port. Network card(Mellanox CX354) used on Proxmox has been before used on QNAP and is work normaly...

Does Proxmox on bridge filter multicast? Any idea what to check and why IPTV multicast don't work?

Proxmox VM:

Code:

:~$ ip address

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:aa:07:b5 brd ff:ff:ff:ff:ff:ff

inet 10.60.5.40/24 brd 10.60.5.255 scope global dynamic ens3

valid_lft 1678sec preferred_lft 1678sec

inet6 fe80::5054:ff:feaa:7b5/64 scope link

valid_lft forever preferred_lft forever

3: ens4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:bd:09:98 brd ff:ff:ff:ff:ff:ff

inet 10.64.118.122/16 brd 10.64.255.255 scope global ens4

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:febd:998/64 scope link

valid_lft forever preferred_lft foreverQNAP VM:

Code:

:~$ ip address

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:aa:07:b5 brd ff:ff:ff:ff:ff:ff

inet 10.60.5.40/24 brd 10.60.5.255 scope global dynamic ens3

valid_lft 3573sec preferred_lft 3573sec

inet6 fe80::5054:ff:feaa:7b5/64 scope link

valid_lft forever preferred_lft forever

3: ens4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:bd:09:98 brd ff:ff:ff:ff:ff:ff

inet 10.64.118.122/16 brd 10.64.255.255 scope global ens4

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:febd:998/64 scope link

valid_lft forever preferred_lft forever