Unfortunately my patched kernel 2.6.2-19 did not start today.Same here: Lexar NM790 4 TB

The patch didn't apply with the patch file, so I changed it directly in the code file.

Kernel 2.6.2-19 seems to work now.

Lexar NM790 2TB

- Thread starter senkis

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

FYI, the current build of the 6.5 kernel, i.e.

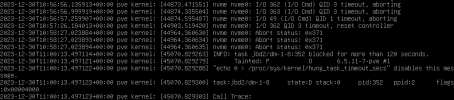

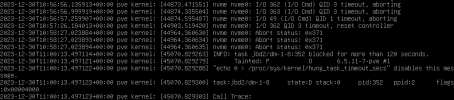

proxmox-kernel-6.5.11-1-pve, finally includes a backport of https://git.kernel.org/pub/scm/linu.../?id=6cc834ba62998c65c42d0c63499bdd35067151ecFixed daily crashes on Proxmox host with MSI PRO Z790-P WIFI and Lexar NM790 4TB SSD by updating BIOS. Original BIOS version was early 2023. Now running Linux 6.5.3-1-pve, host stable for 7 days without further modifications. Previous setup with old BIOS and kernels 6.2.16-19 or 6.5.3 experienced daily crashes.This seems to be the reason why my Proxmox host crashes about every 1-2 days, both with kernel versions 6.2.16-19 and 6.5.3.

Always good to update BIOS for stability with latest hardware.

So the good news is that with proxmox Version 8.1-1, i can confirm that the drives are now accressible without problems. Also in the intaller, which is great.

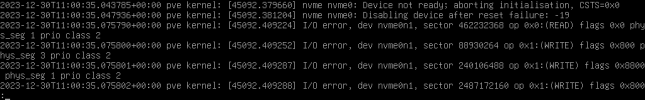

The bad news is that i see periodically this error on all systems with Lexar NM790 .. no crashed or other problems.. but quite concerning, isn't it?

The bad news is that i see periodically this error on all systems with Lexar NM790 .. no crashed or other problems.. but quite concerning, isn't it?

nvme 0000:01:00.0: AMD-Vi: Event logged [IO_PAGE_FAULT domain=0x000f address=0xfee00000 flags=0x0000]

I don´t get this error with proxmox 8.1.3.So the good news is that with proxmox Version 8.1-1, i can confirm that the drives are now accressible without problems. Also in the intaller, which is great.

The bad news is that i see periodically this error on all systems with Lexar NM790 .. no crashed or other problems.. but quite concerning, isn't it?

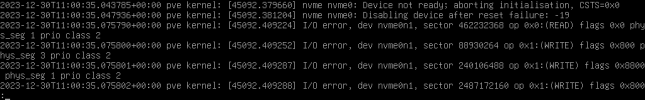

But in the last days I got two system freezes without any logging showing the following error on the display. I don´t know if this is a specific NM790 problem because I found similar reports for other nvme drives.

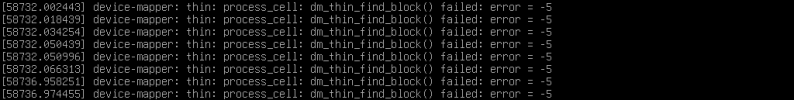

Code:

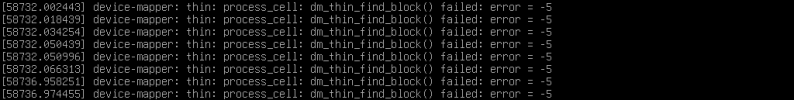

[...] device-mapper: thin: process_cell: dm_thin_find_block() failed: error = -5Updated kernel to Linux pve 6.5.11-7-pve

Removed the kernel cmd line workaround (nvme_core.default_ps_max_latency_us=0) which worked for me with an older kernel and a similar issue is back.

It takes less than a day for the issue to appear, similar to the way it was before I used the workaround with older kernel.

Removed the kernel cmd line workaround (nvme_core.default_ps_max_latency_us=0) which worked for me with an older kernel and a similar issue is back.

It takes less than a day for the issue to appear, similar to the way it was before I used the workaround with older kernel.

Last edited:

I have the same issues with 6.5.11-7-pve.Updated kernel to Linux pve 6.5.11-7-pve

Removed the kernel cmd line workaround (nvme_core.default_ps_max_latency_us=0) which worked for me with an older kernel and a similar issue is back.

It takes less than a day for the issue to appear, similar to the way it was before I used the workaround with older kernel.

Which kernel version an workaround do you use to have a stable system?

Last edited:

@jofland , vmlinuz-6.2.16-15-pveWhich kernel version an workaround do you use to have a stable system?

I am going to try the workaround with the new kernel.

OK, then let us know if it is stable.I am going to try the workaround with the new kernel

I would like to try this too. I will report the result here after a few days.@fiona the workaround (nvme_core.default_ps_max_latency_us=0) seems to work for the new kernel (Linux pve 6.5.11-7-pve) too. Uptime 2.5 days

To understand it clearly, would this the correct entry in my /etc/default/grub file?

Code:

GRUB_CMDLINE_LINUX="nvme_core.default_ps_max_latency_us=0"Me too. Uptime now 4 days.@fiona the workaround (nvme_core.default_ps_max_latency_us=0) seems to work for the new kernel (Linux pve 6.5.11-7-pve) too. Uptime 2.5 days

Same place as all other kernel parameters (on a single line in /etc/kernel/cmdline): https://pve.proxmox.com/pve-docs/pve-admin-guide.html#sysboot_edit_kernel_cmdlineWhere would one put `nvme_core.default_ps_max_latency_us=0` if using systemd-boot?