Hello!

Second time faced a problem, after about a month of stable work.

kvm 100% CPU used

load averages > 70

Server: Depo Storm 3000G2

2xIntel(R) Xeon(R) CPU E5606 @ 2.13GHz/48Gb DDR3-ECC REG Triple channel/Adaptec ASR-5805 512Mb + BBU

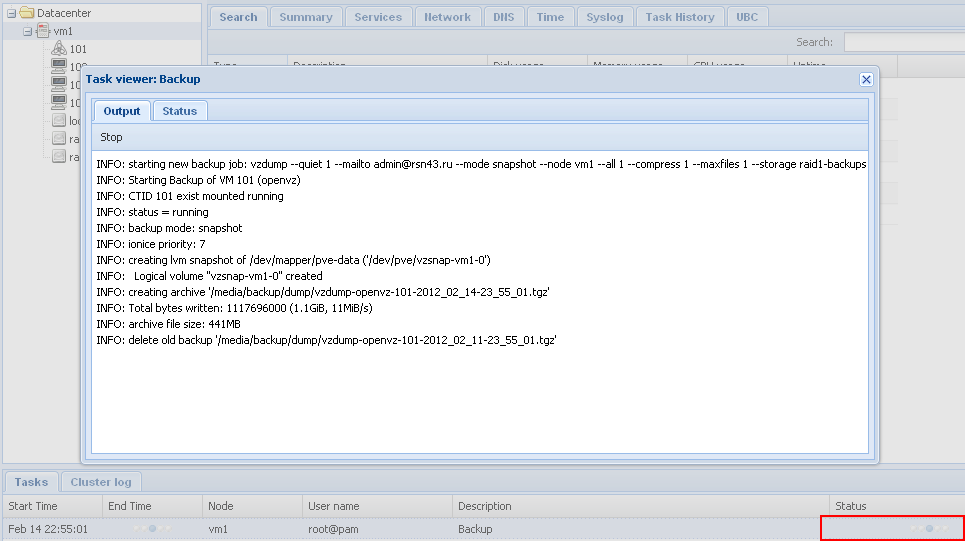

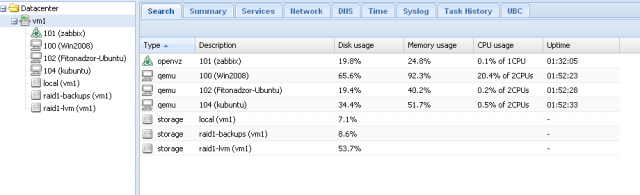

In the picture is the list of virtual machines in working order.

With 100% load no server access through http/ssh etc. "top" only works directly from the server.

/var/log/messages

In which direction to look? What's wrong?

Second time faced a problem, after about a month of stable work.

kvm 100% CPU used

load averages > 70

Server: Depo Storm 3000G2

2xIntel(R) Xeon(R) CPU E5606 @ 2.13GHz/48Gb DDR3-ECC REG Triple channel/Adaptec ASR-5805 512Mb + BBU

Code:

Linux vm1 2.6.32-6-pve #1 SMP Mon Dec 19 10:15:23 CET 2011 x86_64 GNU/Linux

Code:

root@vm1:/var/log# pveversion -v

pve-manager: 2.0-18 (pve-manager/2.0/16283a5a)

running kernel: 2.6.32-6-pve

proxmox-ve-2.6.32: 2.0-55

pve-kernel-2.6.32-6-pve: 2.6.32-55

lvm2: 2.02.88-2pve1

clvm: 2.02.88-2pve1

corosync-pve: 1.4.1-1

openais-pve: 1.1.4-1

libqb: 0.6.0-1

redhat-cluster-pve: 3.1.8-3

pve-cluster: 1.0-17

qemu-server: 2.0-13

pve-firmware: 1.0-14

libpve-common-perl: 1.0-11

libpve-access-control: 1.0-5

libpve-storage-perl: 2.0-9

vncterm: 1.0-2

vzctl: 3.0.29-3pve8

vzprocps: 2.0.11-2

vzquota: 3.0.12-3

pve-qemu-kvm: 1.0-1

ksm-control-daemon: 1.1-1In the picture is the list of virtual machines in working order.

With 100% load no server access through http/ssh etc. "top" only works directly from the server.

/var/log/messages

Code:

Feb 8 00:00:16 vm1 kernel: kjournald D ffff88063a2102c0 0 1330 2 0 0x00000000

Feb 8 00:00:16 vm1 kernel: ffff88062d399d40 0000000000000046 0000000000000000 ffff88063fc0c180

Feb 8 00:00:16 vm1 kernel: ffff88062d399cc0 ffffffff811145c6 ffff88063a2102c0 000000016a0899e2

Feb 8 00:00:16 vm1 kernel: ffff88063a210888 ffff88062d399fd8 000000000000f788 ffff88063a210888

Feb 8 00:00:16 vm1 kernel: Call Trace:

Feb 8 00:00:16 vm1 kernel: [<ffffffff811145c6>] ? __perf_event_task_sched_out+0x36/0x50

Feb 8 00:00:16 vm1 kernel: [<ffffffffa009a689>] journal_commit_transaction+0x169/0x1130 [jbd]

Feb 8 00:00:16 vm1 kernel: [<ffffffff81092a10>] ? autoremove_wake_function+0x0/0x40

Feb 8 00:00:16 vm1 kernel: [<ffffffff8107c34b>] ? try_to_del_timer_sync+0x7b/0xe0

Feb 8 00:00:16 vm1 kernel: [<ffffffffa009dfc8>] kjournald+0xe8/0x250 [jbd]

Feb 8 00:00:16 vm1 kernel: [<ffffffff81092a10>] ? autoremove_wake_function+0x0/0x40

Feb 8 00:00:16 vm1 kernel: [<ffffffffa009dee0>] ? kjournald+0x0/0x250 [jbd]

Feb 8 00:00:16 vm1 kernel: [<ffffffff81092436>] kthread+0x96/0xa0

Feb 8 00:00:16 vm1 kernel: [<ffffffff8100c2ca>] child_rip+0xa/0x20

Feb 8 00:00:16 vm1 kernel: [<ffffffff810923a0>] ? kthread+0x0/0xa0

Feb 8 00:00:16 vm1 kernel: [<ffffffff8100c2c0>] ? child_rip+0x0/0x20

Feb 8 00:00:16 vm1 kernel: kvm D ffff880639a8e600 0 2040 1 0 0x00000000

Feb 8 00:00:16 vm1 kernel: ffff88062d5d3b08 0000000000000082 ffff880639a8eed8 ffff880600000002

Feb 8 00:00:16 vm1 kernel: 0000000002000000 0000000000000000 ffffffff00000000 ffff88000004c288

Feb 8 00:00:16 vm1 kernel: ffff880639a8ebc8 ffff88062d5d3fd8 000000000000f788 ffff880639a8ebc8

Feb 8 00:00:16 vm1 kernel: Call Trace:

Feb 8 00:00:16 vm1 kernel: [<ffffffff81092cfe>] ? prepare_to_wait+0x4e/0x80

Feb 8 00:00:16 vm1 kernel: [<ffffffffa0098c6a>] start_this_handle+0x21a/0x460 [jbd]

Feb 8 00:00:16 vm1 kernel: [<ffffffff81092a10>] ? autoremove_wake_function+0x0/0x40

Feb 8 00:00:16 vm1 kernel: [<ffffffff8119d8d0>] ? pollwake+0x0/0x60

Feb 8 00:00:16 vm1 kernel: [<ffffffffa0099075>] journal_start+0xb5/0x100 [jbd]

Feb 8 00:00:16 vm1 kernel: [<ffffffffa00c4364>] ? ext3_xattr_get+0xf4/0x2b0 [ext3]

Feb 8 00:00:16 vm1 kernel: [<ffffffffa00bdf01>] ext3_journal_start_sb+0x31/0x60 [ext3]

Feb 8 00:00:16 vm1 kernel: [<ffffffffa00b4efd>] ext3_dirty_inode+0x3d/0xa0 [ext3]

Feb 8 00:00:16 vm1 kernel: [<ffffffff811b206b>] __mark_inode_dirty+0x3b/0x160

Feb 8 00:00:16 vm1 kernel: [<ffffffff811a247a>] file_update_time+0x10a/0x1a0

Feb 8 00:00:16 vm1 kernel: [<ffffffff8116d95a>] ? alloc_pages_vma+0x9a/0x150

Feb 8 00:00:16 vm1 kernel: [<ffffffff811202c0>] __generic_file_aio_write+0x210/0x470

Feb 8 00:00:16 vm1 kernel: [<ffffffff8119d8d0>] ? pollwake+0x0/0x60

Feb 8 00:00:16 vm1 kernel: [<ffffffff8119cf39>] ? set_fd_set+0x49/0x60

Feb 8 00:00:16 vm1 kernel: [<ffffffff8112058f>] generic_file_aio_write+0x6f/0xe0

Feb 8 00:00:16 vm1 kernel: [<ffffffff81120520>] ? generic_file_aio_write+0x0/0xe0

Feb 8 00:00:16 vm1 kernel: [<ffffffff811cf304>] aio_rw_vect_retry+0x84/0x200

Feb 8 00:00:16 vm1 kernel: [<ffffffff811d12e4>] aio_run_iocb+0x64/0x170

Feb 8 00:00:16 vm1 kernel: [<ffffffff811d1efd>] do_io_submit+0x2dd/0x910

Feb 8 00:00:16 vm1 kernel: [<ffffffff811d2540>] sys_io_submit+0x10/0x20

Feb 8 00:00:16 vm1 kernel: [<ffffffff8100b242>] system_call_fastpath+0x16/0x1b

Feb 8 00:00:16 vm1 kernel: kvm D ffff88063cfbe540 0 814933 1 0 0x00000000

Feb 8 00:00:16 vm1 kernel: ffff880557973b08 0000000000000082 ffff880557973b38 ffffffff81056453

Feb 8 00:00:16 vm1 kernel: ffff8806584de430 ffff880c3a80f400 0000000000000001 ffff8806584de430

Feb 8 00:00:16 vm1 kernel: ffff88063cfbeb08 ffff880557973fd8 000000000000f788 ffff88063cfbeb08

Feb 8 00:00:16 vm1 kernel: Call Trace:

Feb 8 00:00:16 vm1 kernel: [<ffffffff81056453>] ? perf_event_task_sched_out+0x33/0x80

Feb 8 00:00:16 vm1 kernel: [<ffffffff81092cfe>] ? prepare_to_wait+0x4e/0x80

Feb 8 00:00:16 vm1 kernel: [<ffffffffa0098c6a>] start_this_handle+0x21a/0x460 [jbd]

Feb 8 00:00:16 vm1 kernel: [<ffffffff81092a10>] ? autoremove_wake_function+0x0/0x40

Feb 8 00:00:16 vm1 kernel: [<ffffffffa0099075>] journal_start+0xb5/0x100 [jbd]

Feb 8 00:00:16 vm1 kernel: [<ffffffffa00c4364>] ? ext3_xattr_get+0xf4/0x2b0 [ext3]

Feb 8 00:00:16 vm1 kernel: [<ffffffffa00bdf01>] ext3_journal_start_sb+0x31/0x60 [ext3]

Feb 8 00:00:16 vm1 kernel: [<ffffffffa00b4efd>] ext3_dirty_inode+0x3d/0xa0 [ext3]

Feb 8 00:00:16 vm1 kernel: [<ffffffff811b206b>] __mark_inode_dirty+0x3b/0x160

Feb 8 00:00:16 vm1 kernel: [<ffffffff811a247a>] file_update_time+0x10a/0x1a0

Feb 8 00:00:16 vm1 kernel: [<ffffffff811202c0>] __generic_file_aio_write+0x210/0x470

Feb 8 00:00:16 vm1 kernel: [<ffffffff8119d8d0>] ? pollwake+0x0/0x60

Feb 8 00:00:16 vm1 kernel: [<ffffffff8119cf39>] ? set_fd_set+0x49/0x60

Feb 8 00:00:16 vm1 kernel: [<ffffffff8112058f>] generic_file_aio_write+0x6f/0xe0

Feb 8 00:00:16 vm1 kernel: [<ffffffff81120520>] ? generic_file_aio_write+0x0/0xe0

Feb 8 00:00:16 vm1 kernel: [<ffffffff811cf304>] aio_rw_vect_retry+0x84/0x200

Feb 8 00:00:16 vm1 kernel: [<ffffffff811d12e4>] aio_run_iocb+0x64/0x170

Feb 8 00:00:16 vm1 kernel: [<ffffffff811d1efd>] do_io_submit+0x2dd/0x910

Feb 8 00:00:16 vm1 kernel: [<ffffffff811d2540>] sys_io_submit+0x10/0x20

Feb 8 00:00:16 vm1 kernel: [<ffffffff8100b242>] system_call_fastpath+0x16/0x1b

Feb 8 00:00:16 vm1 kernel: kvm D ffff88091d9fe940 0 24962 1 0 0x00000000

Feb 8 00:00:16 vm1 kernel: ffff8809ddf95b08 0000000000000086 0000000000000000 ffffffffa0374cc0

Feb 8 00:00:16 vm1 kernel: 0000000e00000002 0000000000000000 ffff88076312e480 000000016a0906dc

Feb 8 00:00:16 vm1 kernel: ffff88091d9fef08 ffff8809ddf95fd8 000000000000f788 ffff88091d9fef08

Feb 8 00:00:16 vm1 kernel: Call Trace:

Feb 8 00:00:16 vm1 kernel: [<ffffffffa0374cc0>] ? kvm_set_ioapic_irq+0x0/0x50 [kvm]

Feb 8 00:00:16 vm1 kernel: [<ffffffffa0098c6a>] start_this_handle+0x21a/0x460 [jbd]

Feb 8 00:00:16 vm1 kernel: [<ffffffff81092a10>] ? autoremove_wake_function+0x0/0x40

Feb 8 00:00:16 vm1 kernel: [<ffffffffa0099075>] journal_start+0xb5/0x100 [jbd]

Feb 8 00:00:16 vm1 kernel: [<ffffffffa00c4364>] ? ext3_xattr_get+0xf4/0x2b0 [ext3]

Feb 8 00:00:16 vm1 kernel: [<ffffffffa00bdf01>] ext3_journal_start_sb+0x31/0x60 [ext3]

Feb 8 00:00:16 vm1 kernel: [<ffffffffa00b4efd>] ext3_dirty_inode+0x3d/0xa0 [ext3]

Feb 8 00:00:16 vm1 kernel: [<ffffffff811b206b>] __mark_inode_dirty+0x3b/0x160

Feb 8 00:00:16 vm1 kernel: [<ffffffff811a247a>] file_update_time+0x10a/0x1a0

Feb 8 00:00:16 vm1 kernel: [<ffffffff811202c0>] __generic_file_aio_write+0x210/0x470

Feb 8 00:00:16 vm1 kernel: [<ffffffff8112058f>] generic_file_aio_write+0x6f/0xe0

Feb 8 00:00:16 vm1 kernel: [<ffffffff81120520>] ? generic_file_aio_write+0x0/0xe0

Feb 8 00:00:16 vm1 kernel: [<ffffffff811cf304>] aio_rw_vect_retry+0x84/0x200

Feb 8 00:00:16 vm1 kernel: [<ffffffff811d12e4>] aio_run_iocb+0x64/0x170

Feb 8 00:00:16 vm1 kernel: [<ffffffff811d1efd>] do_io_submit+0x2dd/0x910

Feb 8 00:00:16 vm1 kernel: [<ffffffff811d2540>] sys_io_submit+0x10/0x20

Feb 8 00:00:16 vm1 kernel: [<ffffffff8100b242>] system_call_fastpath+0x16/0x1b

Feb 8 00:00:16 vm1 kernel: kvm D ffff880c3b602100 0 675078 1 0 0x00000000

Feb 8 00:00:16 vm1 kernel: ffff8809ddf81938 0000000000000082 0000000000000000 ffffffff813f4cac

Feb 8 00:00:16 vm1 kernel: ffff8809ddf818a8 ffffffff81059192 ffff8809ddf818c8 000000016a08940a

Feb 8 00:00:16 vm1 kernel: ffff880c3b6026c8 ffff8809ddf81fd8 000000000000f788 ffff880c3b6026c8In which direction to look? What's wrong?

Last edited: