If I may, I'd like to join this debate, since I'm also having same or similar KSM symptoms.

I'm not sure what info you'd like me to provide, so I went ahead and added all of the things you already asked from adamb.

Server is: HP Proliant SE326M1.

It's hosting the following lot:

- 17x Windows Server 2008 R2 (2 GB per VM assigned, no ballooning)

- 2x Windows Server 2012 (4 GB per VM, no ballooning)

- 1x Ubuntu (4 GB assigned, no ballooning)

All VMs are running.

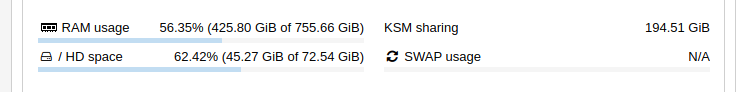

Uptime on the pic above shows when it was upgraded to 6.2.

Before upgrade, it was running latest 7.x PVE and KSM was sharing aprox. 25 GB.

After upgrade to 6.2, KSM only went up to aprox. 3 GB, but it really "prefers" to be at around 2.5 GB most of the time.

I took a note from adamb about tweaking ksmtuned.conf and made the changes as follows:

KSM_NPAGES_MIN=64000000

KSM_NPAGES_MAX=1250000000

With this, KSM gets to 10.15 GB (as pictured above). Adding more zeroes at the end of KSM_NPAGES_x yields diminishing returns.

Indeed, last 2 zeroes I added, only increased KSM by less than 1.5 GB

Below is other info that someone might find useful.

Code:

proxmox-ve: 8.0.2 (running kernel: 6.2.16-6-pve)

pve-manager: 8.0.4 (running version: 8.0.4/d258a813cfa6b390)

proxmox-kernel-helper: 8.0.3

pve-kernel-5.15: 7.4-4

pve-kernel-5.13: 7.1-9

proxmox-kernel-6.2.16-6-pve: 6.2.16-7

proxmox-kernel-6.2: 6.2.16-7

pve-kernel-5.15.108-1-pve: 5.15.108-2

pve-kernel-5.15.104-1-pve: 5.15.104-2

pve-kernel-5.15.102-1-pve: 5.15.102-1

pve-kernel-5.15.83-1-pve: 5.15.83-1

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-2-pve: 5.13.19-4

ceph-fuse: 16.2.11+ds-2

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-3

libknet1: 1.25-pve1

libproxmox-acme-perl: 1.4.6

libproxmox-backup-qemu0: 1.4.0

libproxmox-rs-perl: 0.3.1

libpve-access-control: 8.0.4

libpve-apiclient-perl: 3.3.1

libpve-common-perl: 8.0.7

libpve-guest-common-perl: 5.0.4

libpve-http-server-perl: 5.0.4

libpve-rs-perl: 0.8.5

libpve-storage-perl: 8.0.2

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 5.0.2-4

lxcfs: 5.0.3-pve3

novnc-pve: 1.4.0-2

proxmox-backup-client: 3.0.2-1

proxmox-backup-file-restore: 3.0.2-1

proxmox-kernel-helper: 8.0.3

proxmox-mail-forward: 0.2.0

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.2

proxmox-widget-toolkit: 4.0.6

pve-cluster: 8.0.3

pve-container: 5.0.4

pve-docs: 8.0.4

pve-edk2-firmware: 3.20230228-4

pve-firewall: 5.0.3

pve-firmware: 3.7-1

pve-ha-manager: 4.0.2

pve-i18n: 3.0.5

pve-qemu-kvm: 8.0.2-4

pve-xtermjs: 4.16.0-3

qemu-server: 8.0.6

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.1.12-pve1

Code:

for i in /sys/kernel/mm/ksm/*; do echo "$i:"; cat $i; done

Code:

/sys/kernel/mm/ksm/full_scans:

94490

/sys/kernel/mm/ksm/max_page_sharing:

256

/sys/kernel/mm/ksm/merge_across_nodes:

1

/sys/kernel/mm/ksm/pages_shared:

269340

/sys/kernel/mm/ksm/pages_sharing:

2665058

/sys/kernel/mm/ksm/pages_to_scan:

64753900

/sys/kernel/mm/ksm/pages_unshared:

220581

/sys/kernel/mm/ksm/pages_volatile:

194130

/sys/kernel/mm/ksm/run:

1

/sys/kernel/mm/ksm/sleep_millisecs:

33

/sys/kernel/mm/ksm/stable_node_chains:

19

/sys/kernel/mm/ksm/stable_node_chains_prune_millisecs:

2000

/sys/kernel/mm/ksm/stable_node_dups:

7679

/sys/kernel/mm/ksm/use_zero_pages:

0