Hello, I have a problem in my proxmox cluster for some time, I have 16 nodes in my cluster with their VMs in an external CEPH cluster with 6 nodes and 72 osd SSD, everything is connected in 25Gb networks in 2 100Gb core switches each, I use an RBD pool and in the proxmox when mounting the storage I select RBD and in the configuration I add KRBD to gain speed however, the problem I have is that all VMS (mainly windows) occupy 100% of the disks that are in the storage, if I run a test or use the VM for a long time, the disk reaches 100% usage and it takes time to get back to normal, sometimes just by moving the mouse it uses more than 50%.

My doubt is if I need to activate something else in the storage for KRBD to work, because the VMS are using 100% of the disk (all of which have winows) if I don't have anything running many times?

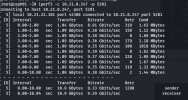

as I use the machine or some disk speed test, executing the writing on the storage is getting slow, but check the image below and the disk usage stays at 100% all the time, before 1 month ago the machines with the active KRBD were just like a rocket, fast and stable and from a few weeks ago today something has changed in the cluster that KRBD is no longer efficient

First test the resut is very very good

after a few seconds i have this result and DISK 100% ,

Its only test, but the same result when i use the VM machine for the internet, video edit etc .. for a few hours .

when I use pool nfs for example reading and writing are mainly better and do not use the disk at 100%, however with RBD and KRBD active I have this problem

Tks

My doubt is if I need to activate something else in the storage for KRBD to work, because the VMS are using 100% of the disk (all of which have winows) if I don't have anything running many times?

as I use the machine or some disk speed test, executing the writing on the storage is getting slow, but check the image below and the disk usage stays at 100% all the time, before 1 month ago the machines with the active KRBD were just like a rocket, fast and stable and from a few weeks ago today something has changed in the cluster that KRBD is no longer efficient

First test the resut is very very good

after a few seconds i have this result and DISK 100% ,

Its only test, but the same result when i use the VM machine for the internet, video edit etc .. for a few hours .

when I use pool nfs for example reading and writing are mainly better and do not use the disk at 100%, however with RBD and KRBD active I have this problem

Tks

Last edited: