Fresh installation of two proxmox 8.4 nodes (both on zfs raid1).

pve1: vmbr1: 192.168.0.200

pve2: vmbr1: 192.168.0.201

Both nodes can ping each other using both hostname and ip, time averages at 0.250 ms.

Both nodes can ssh to each other.

Both nodes can ping the outside world.

timedatecls shows exact the same output on both nodes ().

Create cluster pxc on pve1 works fine (GUI).

Using the join info from pve1 on pve2 (GUI) to join the cluster initially seems to work, but then throws "proxmox cluster permission denied invalid pve ticket 401".

GUI on pve2 is not accessible anymore from this point onwards.

I can still ssh into pve2.

journal on pve2 shows kernel timeout errors:

Subsequently, similar timeouts occur with the exact same call trace:

One of the processes where the timeouts occur is the certificate generation by pveproxy. (This snippet is after a reboot, so different pids.)

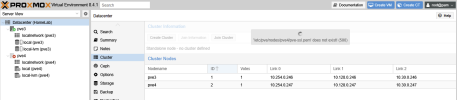

GUI on pve1:

- error for pve2 on Join Cluster: Error: unable to create directory '/etc/pve/nodes' - Permission denied.

- '/etc/pve/nodes/pve2/pve-ssl.pem' does not exist! (500)

- error:0A000086:SSL routines::certificate verify failed (596)

Journal on pve1:

pve1 always has quorum. pve2 initially has too, but fails to ouput pvecm status after processes are hung.

From what I see somehow the creation/saving of the certificates fails on pve2 after joining the cluster.

From there it seems to go south as that disables GUI access and any further communication within the cluster.

Updating to 8.4.1 (and kernel 6.8.12-10-pve) prior to creating/joining the cluster makes no difference.

Any pointers on how to get this cluster going are very welcome!

pve1: vmbr1: 192.168.0.200

pve2: vmbr1: 192.168.0.201

Both nodes can ping each other using both hostname and ip, time averages at 0.250 ms.

Both nodes can ssh to each other.

Both nodes can ping the outside world.

timedatecls shows exact the same output on both nodes ().

Create cluster pxc on pve1 works fine (GUI).

Using the join info from pve1 on pve2 (GUI) to join the cluster initially seems to work, but then throws "proxmox cluster permission denied invalid pve ticket 401".

GUI on pve2 is not accessible anymore from this point onwards.

I can still ssh into pve2.

journal on pve2 shows kernel timeout errors:

Code:

May 05 14:19:41 pve2 kernel: INFO: task cron:1214 blocked for more than 122 seconds.

May 05 14:19:41 pve2 kernel: Tainted: P O 6.8.12-9-pve #1

May 05 14:19:41 pve2 kernel: "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

May 05 14:19:41 pve2 kernel: task:cron state:D stack:0 pid:1214 tgid:1214 ppid:1 flags:0x00000002

May 05 14:19:41 pve2 kernel: Call Trace:

May 05 14:19:41 pve2 kernel: <TASK>

May 05 14:19:41 pve2 kernel: __schedule+0x42b/0x1500

May 05 14:19:41 pve2 kernel: ? mutex_lock+0x12/0x50

May 05 14:19:41 pve2 kernel: ? rrw_exit+0x72/0x170 [zfs]

May 05 14:19:41 pve2 kernel: ? xa_load+0x87/0xf0

May 05 14:19:41 pve2 kernel: schedule+0x33/0x110

May 05 14:19:41 pve2 kernel: schedule_preempt_disabled+0x15/0x30

May 05 14:19:41 pve2 kernel: rwsem_down_read_slowpath+0x284/0x4d0

May 05 14:19:41 pve2 kernel: ? dput+0xf2/0x1b0

May 05 14:19:41 pve2 kernel: down_read+0x48/0xc0

May 05 14:19:41 pve2 kernel: walk_component+0x108/0x190

May 05 14:19:41 pve2 kernel: path_lookupat+0x67/0x1a0

May 05 14:19:41 pve2 kernel: filename_lookup+0xe4/0x200

May 05 14:19:41 pve2 kernel: ? __pfx_zpl_put_link+0x10/0x10 [zfs]

May 05 14:19:41 pve2 kernel: ? strncpy_from_user+0x25/0x120

May 05 14:19:41 pve2 kernel: vfs_statx+0x95/0x1d0

May 05 14:19:41 pve2 kernel: vfs_fstatat+0xaa/0xe0

May 05 14:19:41 pve2 kernel: __do_sys_newfstatat+0x44/0x90

May 05 14:19:41 pve2 kernel: __x64_sys_newfstatat+0x1c/0x30

May 05 14:19:41 pve2 kernel: x64_sys_call+0x18bd/0x2480

May 05 14:19:41 pve2 kernel: do_syscall_64+0x81/0x170

May 05 14:19:41 pve2 kernel: ? __do_sys_newfstatat+0x53/0x90

May 05 14:19:41 pve2 kernel: ? syscall_exit_to_user_mode+0x86/0x260

May 05 14:19:41 pve2 kernel: ? do_syscall_64+0x8d/0x170

May 05 14:19:41 pve2 kernel: ? syscall_exit_to_user_mode+0x86/0x260

May 05 14:19:41 pve2 kernel: ? do_syscall_64+0x8d/0x170

May 05 14:19:41 pve2 kernel: ? syscall_exit_to_user_mode+0x86/0x260

May 05 14:19:41 pve2 kernel: ? do_syscall_64+0x8d/0x170

May 05 14:19:41 pve2 kernel: ? syscall_exit_to_user_mode+0x86/0x260

May 05 14:19:41 pve2 kernel: ? do_syscall_64+0x8d/0x170

May 05 14:19:41 pve2 kernel: ? do_syscall_64+0x8d/0x170

May 05 14:19:41 pve2 kernel: ? syscall_exit_to_user_mode+0x86/0x260

May 05 14:19:41 pve2 kernel: ? do_syscall_64+0x8d/0x170

May 05 14:19:41 pve2 kernel: ? do_syscall_64+0x8d/0x170

May 05 14:19:41 pve2 kernel: ? irqentry_exit+0x43/0x50

May 05 14:19:41 pve2 kernel: entry_SYSCALL_64_after_hwframe+0x78/0x80

May 05 14:19:41 pve2 kernel: RIP: 0033:0x72e89452b81a

May 05 14:19:41 pve2 kernel: RSP: 002b:00007ffd0ddcb1d8 EFLAGS: 00000246 ORIG_RAX: 0000000000000106

May 05 14:19:41 pve2 kernel: RAX: ffffffffffffffda RBX: 00005fa9970fb186 RCX: 000072e89452b81a

May 05 14:19:41 pve2 kernel: RDX: 00007ffd0ddcb3e0 RSI: 00007ffd0ddcb570 RDI: 00000000ffffff9c

May 05 14:19:41 pve2 kernel: RBP: 00007ffd0ddcb570 R08: 0000000000000000 R09: 0000000000000073

May 05 14:19:41 pve2 kernel: R10: 0000000000000000 R11: 0000000000000246 R12: 00005fa9970fb251

May 05 14:19:41 pve2 kernel: R13: 00005fa9b4b34d40 R14: 00005fa9b4b34f70 R15: 00007ffd0ddcd5d0

May 05 14:19:41 pve2 kernel: </TASK>Subsequently, similar timeouts occur with the exact same call trace:

Code:

May 05 14:19:41 pve2 kernel: task:cron state:D stack:0 pid:1214 tgid:1214 ppid:1 flags:0x00000002

May 05 14:19:41 pve2 kernel: task:pve-firewall state:D stack:0 pid:1225 tgid:1225 ppid:1 flags:0x00004002

May 05 14:19:41 pve2 kernel: task:pvestatd state:D stack:0 pid:1232 tgid:1232 ppid:1 flags:0x00000002

May 05 14:19:41 pve2 kernel: task:pveproxy worker state:D stack:0 pid:1281 tgid:1281 ppid:1280 flags:0x00000002

May 05 14:19:41 pve2 kernel: task:pveproxy worker state:D stack:0 pid:1282 tgid:1282 ppid:1280 flags:0x00004002

May 05 14:19:41 pve2 kernel: task:pveproxy worker state:D stack:0 pid:1283 tgid:1283 ppid:1280 flags:0x00004002

May 05 14:19:41 pve2 kernel: task:pve-ha-lrm state:D stack:0 pid:1291 tgid:1291 ppid:1 flags:0x00000002

May 05 14:19:41 pve2 kernel: task:pvescheduler state:D stack:0 pid:6961 tgid:6961 ppid:1300 flags:0x00000002

May 05 14:19:41 pve2 kernel: task:pvescheduler state:D stack:0 pid:6962 tgid:6962 ppid:1300 flags:0x00004002

May 05 14:21:44 pve2 kernel: task:cron state:D stack:0 pid:1214 tgid:1214 ppid:1 flags:0x00000002One of the processes where the timeouts occur is the certificate generation by pveproxy. (This snippet is after a reboot, so different pids.)

Code:

root 1292 0.4 0.1 88124 55552 ? Ss 14:52 0:00 /usr/bin/perl /usr/bin/pvecm updatecerts --silent

root 1293 0.0 0.1 88124 48184 ? D 14:52 0:00 \_ /usr/bin/perl /usr/bin/pvecm updatecerts --silent

May 05 14:52:23 pve2 systemd[1]: Starting pveproxy.service - PVE API Proxy Server...

May 05 14:53:53 pve2 systemd[1]: pveproxy.service: start-pre operation timed out. Terminating.

May 05 14:55:24 pve2 systemd[1]: pveproxy.service: State 'stop-sigterm' timed out. Killing.

May 05 14:55:24 pve2 systemd[1]: pveproxy.service: Killing process 1292 (pvecm) with signal SIGKILL.

May 05 14:55:24 pve2 systemd[1]: pveproxy.service: Killing process 1293 (pvecm) with signal SIGKILL.

May 05 14:56:54 pve2 systemd[1]: pveproxy.service: State 'final-sigterm' timed out. Killing.

May 05 14:56:54 pve2 systemd[1]: pveproxy.service: Killing process 1293 (pvecm) with signal SIGKILL.GUI on pve1:

- error for pve2 on Join Cluster: Error: unable to create directory '/etc/pve/nodes' - Permission denied.

- '/etc/pve/nodes/pve2/pve-ssl.pem' does not exist! (500)

- error:0A000086:SSL routines::certificate verify failed (596)

Journal on pve1:

Code:

May 05 14:32:44 pve1 pveproxy[1527]: '/etc/pve/nodes/pve2/pve-ssl.pem' does not exist!

May 05 14:32:44 pve1 pveproxy[1527]: '/etc/pve/nodes/pve2/pve-ssl.pem' does not exist!

May 05 14:32:54 pve1 pveproxy[9517]: '/etc/pve/nodes/pve2/pve-ssl.pem' does not exist!pve1 always has quorum. pve2 initially has too, but fails to ouput pvecm status after processes are hung.

Code:

Cluster information

-------------------

Name: pxc

Config Version: 2

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Mon May 5 14:58:44 2025

Quorum provider: corosync_votequorum

Nodes: 2

Node ID: 0x00000001

Ring ID: 1.23

Quorate: Yes

Votequorum information

----------------------

Expected votes: 2

Highest expected: 2

Total votes: 2

Quorum: 2

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 192.168.0.200 (local)

0x00000002 1 192.168.0.201From what I see somehow the creation/saving of the certificates fails on pve2 after joining the cluster.

From there it seems to go south as that disables GUI access and any further communication within the cluster.

Updating to 8.4.1 (and kernel 6.8.12-10-pve) prior to creating/joining the cluster makes no difference.

Any pointers on how to get this cluster going are very welcome!