Hi everyone,

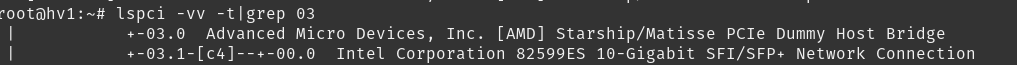

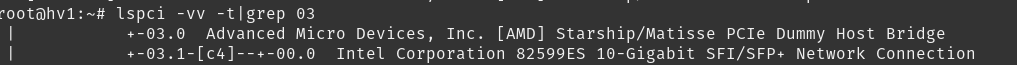

I was wondering if there is any fix for this issue. It looks like it's a problem with intel 10g SFP+. I have found a previous post and have used the ethtool work around because like original poster my logs were filling up and bringing down the server. For reference the machine and network adapter I have is a HPE DL385 gen10 with a HP Ethernet 10Gb 2-port 560FLR-SFP+ Adapter, running Proxmox VE 7.1-7

https://forum.proxmox.com/threads/pme-spurious-native-interrupt-kernel-meldungen.62850/

I was wondering if there is any fix for this issue. It looks like it's a problem with intel 10g SFP+. I have found a previous post and have used the ethtool work around because like original poster my logs were filling up and bringing down the server. For reference the machine and network adapter I have is a HPE DL385 gen10 with a HP Ethernet 10Gb 2-port 560FLR-SFP+ Adapter, running Proxmox VE 7.1-7

https://forum.proxmox.com/threads/pme-spurious-native-interrupt-kernel-meldungen.62850/