Many problems I've read now with the new 6.8.5.2 and 6.8.5.3 Kernel. In my case the server hops away and is rebooting in a simple backupjob, that has run for years through all other kernels. I think there must be something wrong with the memory management of the new kernel. In some hosts I also had the problem, that they can't boot, I solved that with the intel_iommu=off on one host, happy to have a remote console to start an old kernel, sad for the people who don't have that.

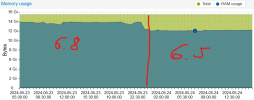

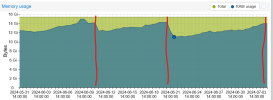

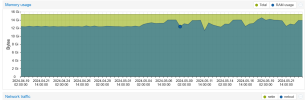

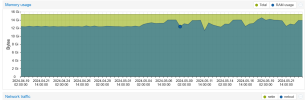

But what I'm wondering for.... here you see the host memory usage MAX for one month. Till 5th or so it was the old 6.5 kernel, all nice, like it was for years. ZFS ARC set to 5 GB RAM. After 5th the upgrade to kernel 6.8.5.2, directly 2 GB more usage, why? I put the ZFS ARC size down to 4 GB, one GB less. And what you see? Same higher memory usage killing the host sometimes, mostly in backup jobs with the integrated proxmox backup.

Hope, you solve all that fast, this evening is the third in this week, I need to start a server, cause my monitoring give me an evening-killing alert and I need to reboot the server again.

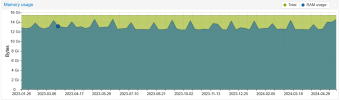

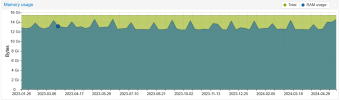

It's absolut untypical like you see in my screenshot with the RAM usage. Never have seen such graphics after the 5th before in proxmox for years now. Also if you look at the yearly max, it was never that high with the ram-usage the last 12 months, like it was in the last 2 weeks with kernel 6.8.5.2 and 6.8.5.3.

For now I stay on 6.5 kernel, till this is solved.

Or is it a new feature, that the kernel 6.8 consumes 2 GB more memory? Not nice, especially if you tuned your system exactly with ZFS ARC on little systems with only 16 GB RAM.

But what I'm wondering for.... here you see the host memory usage MAX for one month. Till 5th or so it was the old 6.5 kernel, all nice, like it was for years. ZFS ARC set to 5 GB RAM. After 5th the upgrade to kernel 6.8.5.2, directly 2 GB more usage, why? I put the ZFS ARC size down to 4 GB, one GB less. And what you see? Same higher memory usage killing the host sometimes, mostly in backup jobs with the integrated proxmox backup.

Hope, you solve all that fast, this evening is the third in this week, I need to start a server, cause my monitoring give me an evening-killing alert and I need to reboot the server again.

It's absolut untypical like you see in my screenshot with the RAM usage. Never have seen such graphics after the 5th before in proxmox for years now. Also if you look at the yearly max, it was never that high with the ram-usage the last 12 months, like it was in the last 2 weeks with kernel 6.8.5.2 and 6.8.5.3.

For now I stay on 6.5 kernel, till this is solved.

Or is it a new feature, that the kernel 6.8 consumes 2 GB more memory? Not nice, especially if you tuned your system exactly with ZFS ARC on little systems with only 16 GB RAM.

Last edited: