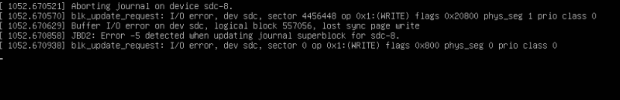

I run K3S and Rancher on my Proxmox virtual environment. Every now and then one of the nodes shows error messages concerning the hard-disk.

I see the error messages on the worker nodes and the storage nodes which i created for Longhorn.

I don't know if the problems relies to Rancher and Kubernetes or that it is a Proxmox problem.

I'm not pointing fingers i'm just seeking for a solution.

Maybe one of the forum members know what it is.

Normally if i reboot the vm the problem disappear, but it keeps coming back and not on the same VM but as a mention one of the worker nodes or one of the storage nodes

What Proxmox shows

I see the error messages on the worker nodes and the storage nodes which i created for Longhorn.

I don't know if the problems relies to Rancher and Kubernetes or that it is a Proxmox problem.

I'm not pointing fingers i'm just seeking for a solution.

Maybe one of the forum members know what it is.

Normally if i reboot the vm the problem disappear, but it keeps coming back and not on the same VM but as a mention one of the worker nodes or one of the storage nodes

What Proxmox shows