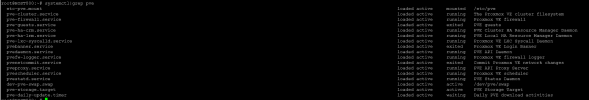

We connected the storage to the server (hardware) via a fiberchannel, set up a multipass, but any time you try to work with the storage, proxmox goes into question status.

But at the same time, VMs continue to work normally inside, but none of their proxmos tasks work, ssh is available, the console is also available via gui, but any other section is not available by time out error

The contents of the file /etc/multipass.conf

To get to the normal status of the green checkmark, you have to restart the server itself.

But at the same time, VMs continue to work normally inside, but none of their proxmos tasks work, ssh is available, the console is also available via gui, but any other section is not available by time out error

The contents of the file /etc/multipass.conf

Code:

defaults {

user_friendly_names yes

find_multipaths yes

}To get to the normal status of the green checkmark, you have to restart the server itself.