I recently got my new OVH Scale range server which comes with redundant network (2xpublic 2xvrack) and can't get the virtual machines network to work with or without virtual MACs

Below are the network settings I am not sure how to set it up correctly to get vmbr0 for the VMs working

/etc/network/interfaces

When applying this it does show the following error

ifup -a

warning: enp193s0f0: ignoring ip address. Assigning an IP address is not allowed on enslaved interfaces. enp193s0f0 is enslaved to bond0

ip addr

According to OVH website the following MACs are part of public network

public

Public Aggregation

04:3f:72:b4:6a:70, 04:3f:72:b4:6a:71

and the following are the vrack

vrack

Private Aggregation

0c:42:a1:6c:42:dd, 0c:42:a1:6c:42:dc

/etc/default/isc-dhcp-server

/etc/dhcp/dhcpd.conf

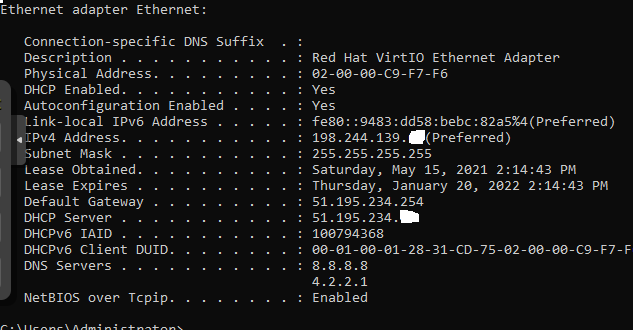

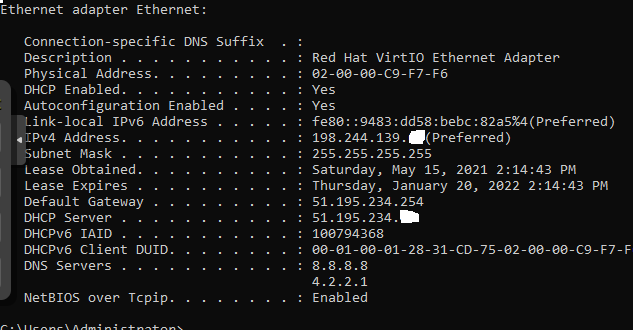

Windows ipconfig /all

Below are the network settings I am not sure how to set it up correctly to get vmbr0 for the VMs working

/etc/network/interfaces

Code:

auto lo

iface lo inet loopback

iface enp193s0f0 inet dhcp

iface enp133s0f0 inet manual

iface enp133s0f1 inet manual

iface enp193s0f1 inet manual

iface enp9s0f3u2u2c2 inet manual

auto bond0

iface bond0 inet manual

bond-slaves enp193s0f0 enp193s0f1

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2+3

auto vmbr0

iface vmbr0 inet static

address 51.195.234.XXX

gateway 51.195.234.254

bridge-ports bond0

bridge-stp off

bridge-fd 0When applying this it does show the following error

ifup -a

warning: enp193s0f0: ignoring ip address. Assigning an IP address is not allowed on enslaved interfaces. enp193s0f0 is enslaved to bond0

ip addr

Code:

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group defaul t qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp193s0f0: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc mq master bond0 state UP group default qlen 1000

link/ether 04:3f:72:b4:6a:70 brd ff:ff:ff:ff:ff:ff

3: enp193s0f1: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc mq master bond0 state UP group default qlen 1000

link/ether 04:3f:72:b4:6a:70 brd ff:ff:ff:ff:ff:ff

4: enp133s0f0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group defaul t qlen 1000

link/ether 0c:42:a1:6c:42:dc brd ff:ff:ff:ff:ff:ff

5: enp133s0f1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group defaul t qlen 1000

link/ether 0c:42:a1:6c:42:dd brd ff:ff:ff:ff:ff:ff

7: bond0: <BROADCAST,MULTICAST,MASTER,UP,LOWER_UP> mtu 1500 qdisc noqueue master vmbr0 state UP group default qlen 1000

link/ether 04:3f:72:b4:6a:70 brd ff:ff:ff:ff:ff:ff

8: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP grou p default qlen 1000

link/ether 04:3f:72:b4:6a:70 brd ff:ff:ff:ff:ff:ff

inet 51.195.234.XXX/32 scope global vmbr0

valid_lft forever preferred_lft forever

inet6 fe80::63f:72ff:feb4:6a70/64 scope link

valid_lft forever preferred_lft forever

10: tap106i0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fas t master vmbr0 state UNKNOWN group default qlen 1000

link/ether 72:e3:07:7f:f5:57 brd ff:ff:ff:ff:ff:ffAccording to OVH website the following MACs are part of public network

public

Public Aggregation

04:3f:72:b4:6a:70, 04:3f:72:b4:6a:71

and the following are the vrack

vrack

Private Aggregation

0c:42:a1:6c:42:dd, 0c:42:a1:6c:42:dc

/etc/default/isc-dhcp-server

Code:

INTERFACESv4="vmbr0"

INTERFACESv6=""/etc/dhcp/dhcpd.conf

Code:

ddns-update-style none;

default-lease-time 600;

max-lease-time 7200;

log-facility local7;

option rfc3442-classless-static-routes code 121 = array of integer 8;

option ms-classless-static-routes code 249 = array of integer 8;

subnet 0.0.0.0 netmask 0.0.0.0 {

authoritative;

default-lease-time 21600000;

max-lease-time 432000000;

option routers 51.195.234.254;

option domain-name-servers 8.8.8.8,4.2.2.1;

option rfc3442-classless-static-routes 32, 51, 195, 234, 254, 0, 0, 0, 0, 0, 51, 195, 234, 254;

option ms-classless-static-routes 32, 51, 195, 234, 254, 0, 0, 0, 0, 0, 51, 195, 234, 254;

#ProxmoxIPv4

host 1 {hardware ethernet 02:00:00:c9:f7:f6;fixed-address 198.244.139.XXX;option subnet-mask 255.255.255.255;option routers 51.195.234.254;}

}Windows ipconfig /all

Last edited: