Hi everyone,

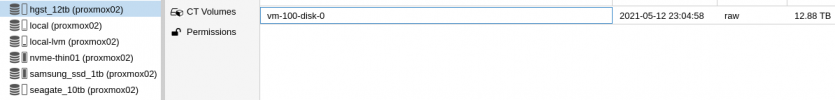

I have just upgraded to proxmox 7 from 6.4-13 and I have run into a problem with one of my VMs where the vm disk files on the two physical hard drives wont open. See the image for one of the drives below:

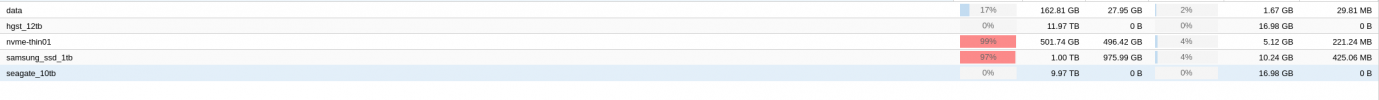

When I look at the size of the file on the drive it seems to be the right size, but as you can see from the picture below, something is not right. The issue is the same for both hgst_12tb and seagate_10tb:

The drives nvme-thin01 and samsung_ssd_1tb works just fine.

I would very much to get some help with this. Thanks.

I have just upgraded to proxmox 7 from 6.4-13 and I have run into a problem with one of my VMs where the vm disk files on the two physical hard drives wont open. See the image for one of the drives below:

When I look at the size of the file on the drive it seems to be the right size, but as you can see from the picture below, something is not right. The issue is the same for both hgst_12tb and seagate_10tb:

The drives nvme-thin01 and samsung_ssd_1tb works just fine.

I would very much to get some help with this. Thanks.