Hi Guys! I wanted to setup my own server and recently purchased a dell optiplex with an 8th gen i5 8500 CPU for its intel quicksync capabilities. The idea was to use this with Plex/Jellyfin for hardware transcoding but I'm hitting a snag. This is the guide I'm trying to follow: vGpu Passthrough Guide

I edited my grub file to enable GVT (IOMMU was already enabled):

I see dmesg output after rebooting:

Added the required modules:

Then rebooted and added the GPU to the ubuntu VM:

But every time I try to start the VM, it freezes at the Ubuntu splash screen. I suspect there is some issue with GPU conflicts/drivers I need to solve here but not sure how to move further ahead here. Any pointers would be appreciated. I tried looking at the Syslog and the Task log in the proxmox gui for the vm but dont really see any errors that would give me more info.

This is the vm conf file if required:

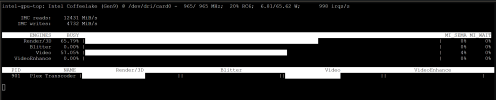

Also, this is where the VM crashes everytime I try to start it:

I edited my grub file to enable GVT (IOMMU was already enabled):

Code:

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt video=vesafb:off video=efifb:off initcall_blacklist=sysfb_init i915.enable_gvt=1"I see dmesg output after rebooting:

Code:

root@homeserver:~# dmesg | grep -e DMAR -e IOMMU

[ 0.011068] ACPI: DMAR 0x00000000B9B4B070 0000A8 (v01 INTEL EDK2 00000002 01000013)

[ 0.011101] ACPI: Reserving DMAR table memory at [mem 0xb9b4b070-0xb9b4b117]

[ 0.034261] DMAR: IOMMU enabled

[ 0.091471] DMAR: Host address width 39

[ 0.091472] DMAR: DRHD base: 0x000000fed90000 flags: 0x0

[ 0.091477] DMAR: dmar0: reg_base_addr fed90000 ver 1:0 cap 1c0000c40660462 ecap 19e2ff0505e

[ 0.091480] DMAR: DRHD base: 0x000000fed91000 flags: 0x1

[ 0.091483] DMAR: dmar1: reg_base_addr fed91000 ver 1:0 cap d2008c40660462 ecap f050da

[ 0.091485] DMAR: RMRR base: 0x000000ba5a4000 end: 0x000000ba7edfff

[ 0.091487] DMAR: RMRR base: 0x000000bd000000 end: 0x000000bf7fffff

[ 0.091489] DMAR-IR: IOAPIC id 2 under DRHD base 0xfed91000 IOMMU 1

[ 0.091490] DMAR-IR: HPET id 0 under DRHD base 0xfed91000

[ 0.091492] DMAR-IR: Queued invalidation will be enabled to support x2apic and Intr-remapping.

[ 0.094666] DMAR-IR: Enabled IRQ remapping in x2apic mode

[ 0.319766] DMAR: No ATSR found

[ 0.319767] DMAR: No SATC found

[ 0.319768] DMAR: IOMMU feature fl1gp_support inconsistent

[ 0.319769] DMAR: IOMMU feature pgsel_inv inconsistent

[ 0.319771] DMAR: IOMMU feature nwfs inconsistent

[ 0.319772] DMAR: IOMMU feature pasid inconsistent

[ 0.319773] DMAR: IOMMU feature eafs inconsistent

[ 0.319774] DMAR: IOMMU feature prs inconsistent

[ 0.319774] DMAR: IOMMU feature nest inconsistent

[ 0.319775] DMAR: IOMMU feature mts inconsistent

[ 0.319776] DMAR: IOMMU feature sc_support inconsistent

[ 0.319777] DMAR: IOMMU feature dev_iotlb_support inconsistent

[ 0.319778] DMAR: dmar0: Using Queued invalidation

[ 0.319781] DMAR: dmar1: Using Queued invalidation

[ 0.320216] DMAR: Intel(R) Virtualization Technology for Directed I/OAdded the required modules:

Code:

root@homeserver:~# cat /etc/modules

# /etc/modules: kernel modules to load at boot time.

#

# This file contains the names of kernel modules that should be loaded

# at boot time, one per line. Lines beginning with "#" are ignored.

# Parameters can be specified after the module name.

vfio

vfio_iommu_type1

vfio_pci

vfio_virqfd

# Modules required for Intel GVT

kvmgt

exngt

vfio-mdevThen rebooted and added the GPU to the ubuntu VM:

But every time I try to start the VM, it freezes at the Ubuntu splash screen. I suspect there is some issue with GPU conflicts/drivers I need to solve here but not sure how to move further ahead here. Any pointers would be appreciated. I tried looking at the Syslog and the Task log in the proxmox gui for the vm but dont really see any errors that would give me more info.

This is the vm conf file if required:

Code:

/etc/pve/nodes/homeserver/qemu-server/101.conf

bios: ovmf

boot: order=scsi0;ide2;net0

cores: 6

cpu: host

efidisk0: local-lvm:vm-101-disk-0,efitype=4m,pre-enrolled-keys=1,size=4M

hostpci0: 0000:00:02.0,mdev=i915-GVTg_V5_4

ide2: none,media=cdrom

machine: q35

memory: 8192

meta: creation-qemu=8.0.2,ctime=1698431405

name: UbuntuLTS-Server

net0: virtio=42:8E:F3:3C:D8:B4,bridge=vmbr0,firewall=1

numa: 0

ostype: l26

scsi0: local-lvm:vm-101-disk-1,iothread=1,size=100G

scsihw: virtio-scsi-single

smbios1: uuid=57a0d333-bd46-472c-a518-7fefbda1e638

sockets: 1

vga: qxl,memory=24

vmgenid: c7044731-a9e2-4ffa-a38a-6ca222c8a5d8Also, this is where the VM crashes everytime I try to start it:

Last edited: