Dear All,

I am having issues with restore the LXC container including mounted additional disk. To be clear I have restored container properly and it started and accesible but Ian't see any data in the mount point where additional disk was added.

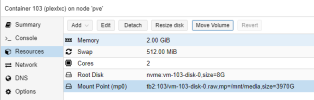

In my restored LXC I can see the disk as it was before restore:

root@plexlxc:~# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/nvme-vm--103--disk--1 7.8G 3.5G 4.0G 47% /

/dev/mapper/nvme-vm--103--disk--2 3.9T 32K 3.7T 1% /mnt/media

none 492K 4.0K 488K 1% /dev

udev 32G 0 32G 0% /dev/dri

tmpfs 32G 4.0K 32G 1% /dev/shm

tmpfs 13G 1.6M 13G 1% /run

tmpfs 5.0M 0 5.0M 0% /run/lock

but I can't see any data in this mountpoint. I had a lot of stuff there..

root@plexlxc:~# ll /mnt/media/

total 24

drwxr-xr-x 3 root root 4096 Feb 14 12:19 .

drwxr-xr-x 5 root root 4096 Feb 14 12:19 ..

drwx------ 2 root root 16384 Feb 14 12:19 lost+found

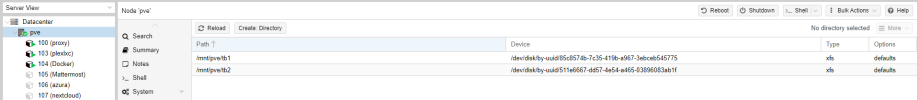

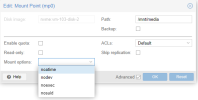

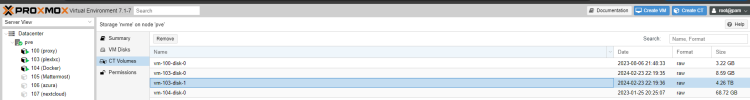

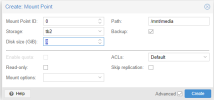

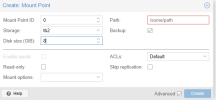

The disks are attached to proxmox server via Thunderbolt 4 directory (the second one is used for LXC):

What I am doing wrong ? How can I recover my data ?

I am having issues with restore the LXC container including mounted additional disk. To be clear I have restored container properly and it started and accesible but Ian't see any data in the mount point where additional disk was added.

In my restored LXC I can see the disk as it was before restore:

root@plexlxc:~# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/nvme-vm--103--disk--1 7.8G 3.5G 4.0G 47% /

/dev/mapper/nvme-vm--103--disk--2 3.9T 32K 3.7T 1% /mnt/media

none 492K 4.0K 488K 1% /dev

udev 32G 0 32G 0% /dev/dri

tmpfs 32G 4.0K 32G 1% /dev/shm

tmpfs 13G 1.6M 13G 1% /run

tmpfs 5.0M 0 5.0M 0% /run/lock

but I can't see any data in this mountpoint. I had a lot of stuff there..

root@plexlxc:~# ll /mnt/media/

total 24

drwxr-xr-x 3 root root 4096 Feb 14 12:19 .

drwxr-xr-x 5 root root 4096 Feb 14 12:19 ..

drwx------ 2 root root 16384 Feb 14 12:19 lost+found

The disks are attached to proxmox server via Thunderbolt 4 directory (the second one is used for LXC):

What I am doing wrong ? How can I recover my data ?

Attachments

Last edited: