I'm pretty new to Proxmox and a little experienced in Linux.

I am currently trying to use proxmox but when I spin up 2 or more VMs the NIC link seems to go down.

When I start the VMs without a network device attached, it works.

What I have tried:

- Changing cables

- PCIe Network Card

- Reinstalling Proxmox

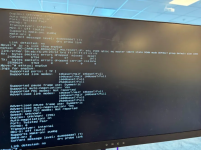

Logs from proxmox node:

I can provide more information if needed.

I am currently trying to use proxmox but when I spin up 2 or more VMs the NIC link seems to go down.

When I start the VMs without a network device attached, it works.

What I have tried:

- Changing cables

- PCIe Network Card

- Reinstalling Proxmox

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host noprefixroute

valid_lft forever preferred_lft forever

2: enp5s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master vmbr0 state UP group default qlen 1000

link/ether 50:eb:f6:29:29:5f brd ff:ff:ff:ff:ff:ff

3: wlo1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 44:e5:17:16:5d:55 brd ff:ff:ff:ff:ff:ff

altname wlp0s20f3

4: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 50:eb:f6:29:29:5f brd ff:ff:ff:ff:ff:ff

inet 10.247.160.69/22 scope global vmbr0

valid_lft forever preferred_lft forever

inet6 fe80::52eb:f6ff:fe29:295f/64 scope link

valid_lft forever preferred_lft forever

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host noprefixroute

valid_lft forever preferred_lft forever

2: enp5s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master vmbr0 state UP group default qlen 1000

link/ether 50:eb:f6:29:29:5f brd ff:ff:ff:ff:ff:ff

3: wlo1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 44:e5:17:16:5d:55 brd ff:ff:ff:ff:ff:ff

altname wlp0s20f3

4: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 50:eb:f6:29:29:5f brd ff:ff:ff:ff:ff:ff

inet 10.247.160.69/22 scope global vmbr0

valid_lft forever preferred_lft forever

inet6 fe80::52eb:f6ff:fe29:295f/64 scope link

valid_lft forever preferred_lft forever

Logs from proxmox node:

Rich (BB code):

Jan 31 10:09:38 pve pvedaemon[1181]: <root@pam> end task UPID:pve:00000648:000013CF:679C9351:vncshell::root@pam: OK

Jan 31 10:09:48 pve pvedaemon[1675]: starting termproxy UPID:pve:0000068B:00001831:679C935C:vncshell::root@pam:

Jan 31 10:09:48 pve pvedaemon[1181]: <root@pam> starting task UPID:pve:0000068B:00001831:679C935C:vncshell::root@pam:

Jan 31 10:09:48 pve pvedaemon[1180]: <root@pam> successful auth for user 'root@pam'

Jan 31 10:09:48 pve login[1678]: pam_unix(login:session): session opened for user root(uid=0) by root(uid=0)

Jan 31 10:09:48 pve systemd-logind[824]: New session 4 of user root.

Jan 31 10:09:48 pve systemd[1]: Started session-4.scope - Session 4 of User root.

Jan 31 10:09:48 pve login[1683]: ROOT LOGIN on '/dev/pts/0'

Jan 31 10:09:48 pve kernel: igc 0000:05:00.0 enp5s0: NIC Link is Down

Jan 31 10:09:48 pve kernel: vmbr0: port 1(enp5s0) entered disabled state

Jan 31 10:10:51 pve kernel: igc 0000:05:00.0 enp5s0: NIC Link is Up 1000 Mbps Full Duplex, Flow Control: RX

Jan 31 10:10:51 pve kernel: vmbr0: port 1(enp5s0) entered blocking state

Jan 31 10:10:51 pve kernel: vmbr0: port 1(enp5s0) entered forwarding state

Jan 31 10:10:52 pve kernel: igc 0000:05:00.0 enp5s0: NIC Link is Down

Jan 31 10:10:52 pve kernel: vmbr0: port 1(enp5s0) entered disabled state

Jan 31 10:11:55 pve kernel: igc 0000:05:00.0 enp5s0: NIC Link is Up 1000 Mbps Full Duplex, Flow Control: RX

Jan 31 10:11:55 pve kernel: vmbr0: port 1(enp5s0) entered blocking state

Jan 31 10:11:55 pve kernel: vmbr0: port 1(enp5s0) entered forwarding state

Jan 31 10:12:03 pve kernel: igc 0000:05:00.0 enp5s0: NIC Link is Down

Jan 31 10:12:03 pve kernel: vmbr0: port 1(enp5s0) entered disabled state

Jan 31 10:13:06 pve kernel: igc 0000:05:00.0 enp5s0: NIC Link is Up 1000 Mbps Full Duplex, Flow Control: RX

Jan 31 10:13:06 pve kernel: vmbr0: port 1(enp5s0) entered blocking state

Jan 31 10:13:06 pve kernel: vmbr0: port 1(enp5s0) entered forwarding stateI can provide more information if needed.