Hello all,

I am facing an issue with my Proxmox setup where my GPU devices remain in the same IOMMU group due to hardware bifurcation on the motherboard. This prevents me from isolating them properly for use in different VMs.

I would appreciate any assistance or suggestions on how to resolve this issue and achieve proper separation of the GPU devices into different IOMMU groups.

Thanks everyone!

I am facing an issue with my Proxmox setup where my GPU devices remain in the same IOMMU group due to hardware bifurcation on the motherboard. This prevents me from isolating them properly for use in different VMs.

System Details:

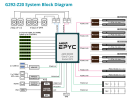

- Motherboard: Gigabyte G292-Z20

- GPUs: Nvidia RTX A4000

IOMMU Grouping:

Despite my efforts, the GPUs are grouped together in IOMMU Group 0. Here is the output:

Code:

IOMMU Group 0 c0:01.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge [1022:1482]

IOMMU Group 0 c0:01.1 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse GPP Bridge [1022:1483]

IOMMU Group 0 c1:00.0 PCI bridge [0604]: PMC-Sierra Inc. Device [11f8:4052]

IOMMU Group 0 c1:00.1 Memory controller [0580]: PMC-Sierra Inc. Device [11f8:4052]

IOMMU Group 0 c2:00.0 PCI bridge [0604]: PMC-Sierra Inc. Device [11f8:4052]

IOMMU Group 0 c2:01.0 PCI bridge [0604]: PMC-Sierra Inc. Device [11f8:4052]

IOMMU Group 0 c3:00.0 VGA compatible controller [0300]: NVIDIA Corporation GA104GL [RTX A4000] [10de:24b0] (rev a1)

IOMMU Group 0 c3:00.1 Audio device [0403]: NVIDIA Corporation GA104 High Definition Audio Controller [10de:228b] (rev a1)

IOMMU Group 0 c4:00.0 VGA compatible controller [0300]: NVIDIA Corporation GA104GL [RTX A4000] [10de:24b0] (rev a1)

IOMMU Group 0 c4:00.1 Audio device [0403]: NVIDIA Corporation GA104 High Definition Audio Controller [10de:228b] (rev a1)GRUB Configuration:

Here is my current GRUB configuration:

Code:

GRUB_DEFAULT=0

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR=`lsb_release -i -s 2> /dev/null || echo Debian`

GRUB_CMDLINE_LINUX="quiet amd_iommu=on pci_acs_override=downstream,multifunction"

GRUB_CMDLINE_LINUX_DEFAULT="quiet amd_iommu=on pci_acs_override=downstream,multifunction"Motherboard Configuration:

- IOMMU: Enabled

- ARI Support: Disabled

- ACS: Not visible in BIOS, but mentioned in the manual (considering BIOS downgrade)

- Build Date: 08/03/2021

- Firmware Version: R22

Error:

When trying to run both VMs on the two GPUs that are in the same group, I encounter the following error:

Code:

kvm: -device vfio-pci,host=0000:c4:00.0,id=hostpci0,bus=pci.0,addr=0x10,rombar=0: vfio 0000:c4:00.0: failed to open /dev/vfio/0: Device or resource busy

TASK ERROR: start failed: QEMU exited with code 1Thanks everyone!