Hi All

I have a LX container with Immich.

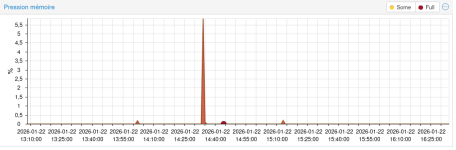

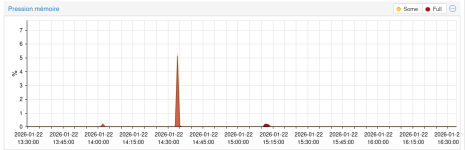

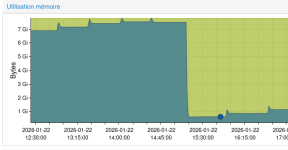

I see this error linked potentially to memory issue :

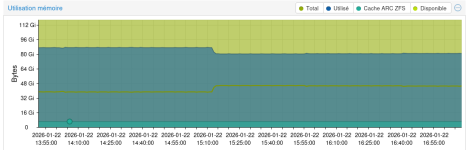

Arc_summari gives :

Any advice is welcome

I have a LX container with Immich.

I see this error linked potentially to memory issue :

Code:

Jan 22 15:12:16 pve kernel: immich-api invoked oom-killer: gfp_mask=0xcc0(GFP_KERNEL), order=0, oom_score_adj=0

Jan 22 15:12:16 pve kernel: CPU: 2 UID: 100999 PID: 2133645 Comm: immich-api Tainted: P O 6.17.4-2-pve #1 PREEMPT(voluntary)

Jan 22 15:12:16 pve kernel: Tainted: [P]=PROPRIETARY_MODULE, [O]=OOT_MODULE

Jan 22 15:12:16 pve kernel: Call Trace:

Jan 22 15:12:16 pve kernel: <TASK>

Jan 22 15:12:16 pve kernel: dump_stack_lvl+0x5f/0x90

Jan 22 15:12:16 pve kernel: dump_stack+0x10/0x18

Jan 22 15:12:16 pve kernel: dump_header+0x48/0x1be

Jan 22 15:12:16 pve kernel: oom_kill_process.cold+0x8/0x87

Jan 22 15:12:16 pve kernel: out_of_memory+0x22f/0x4d0

Jan 22 15:12:16 pve kernel: mem_cgroup_out_of_memory+0x100/0x120

Jan 22 15:12:16 pve kernel: try_charge_memcg+0x42b/0x6e0

Jan 22 15:12:16 pve kernel: charge_memcg+0x34/0x90

Jan 22 15:12:16 pve kernel: __mem_cgroup_charge+0x2d/0xa0

Jan 22 15:12:16 pve kernel: do_anonymous_page+0x389/0x990

Jan 22 15:12:16 pve kernel: ? ___pte_offset_map+0x1c/0x180

Jan 22 15:12:16 pve kernel: __handle_mm_fault+0xb55/0xfd0

Jan 22 15:12:16 pve kernel: handle_mm_fault+0x119/0x370

Jan 22 15:12:16 pve kernel: do_user_addr_fault+0x2f8/0x830

Jan 22 15:12:16 pve kernel: exc_page_fault+0x7f/0x1b0

Jan 22 15:12:16 pve kernel: asm_exc_page_fault+0x27/0x30

Jan 22 15:12:16 pve kernel: RIP: 0033:0x7aa9317080f4

Jan 22 15:12:16 pve kernel: Code: 49 14 00 48 8d 0c 1e 49 39 d0 49 89 48 60 0f 95 c2 48 29 d8 0f b6 d2 48 83 c8 01 48 c1 e2 02 48 09 da 48 83 ca 01 48 89 56 08 <48> 89 41 08 >

Jan 22 15:12:16 pve kernel: RSP: 002b:00007fffe8d3deb0 EFLAGS: 00010206

Jan 22 15:12:16 pve kernel: RAX: 00000000000088f1 RBX: 0000000000002010 RCX: 00000001b9cf0710

Jan 22 15:12:16 pve kernel: RDX: 0000000000002011 RSI: 00000001b9cee700 RDI: 0000000000000004

Jan 22 15:12:16 pve kernel: RBP: fffffffffffffe48 R08: 00007aa93184cac0 R09: 0000000000000001

Jan 22 15:12:16 pve kernel: R10: 00007aa93184cd10 R11: 00000000000001ff R12: 0000000000002000

Jan 22 15:12:16 pve kernel: R13: 0000000000000000 R14: 00000000000001ff R15: 00007aa93184cb20

Jan 22 15:12:16 pve kernel: </TASK>

Jan 22 15:12:16 pve kernel: Memory cgroup out of memory: Killed process 2133645 (immich-api) total-vm:26518312kB, anon-rss:7737848kB, file-rss:45788kB,shmem-rss:0kB, UID:100-api) total-vm:26518312kB, anon-rss:7737848kB, file-rss:45788kB, shmem-rss:0kB, UID:100999 pgtables:60420kB oom_score_adj:0Arc_summari gives :

Code:

ZFS Subsystem Report Thu Jan 22 21:47:31 2026

Linux 6.17.4-2-pve 2.3.4-pve1

Machine: pve (x86_64) 2.3.4-pve1

ARC status:

Total memory size: 125.7 GiB

Min target size: 3.1 % 3.9 GiB

Max target size: 5.0 % 6.3 GiB

Target size (adaptive): 99.9 % 6.3 GiB

Current size: 99.9 % 6.3 GiB

Free memory size: 40.2 GiB

Available memory size: 35.9 GiB

ARC structural breakdown (current size): 6.3 GiB

Compressed size: 80.7 % 5.1 GiB

Overhead size: 7.7 % 496.5 MiB

Bonus size: 1.9 % 119.3 MiB

Dnode size: 5.7 % 367.9 MiB

Dbuf size: 2.3 % 145.4 MiB

Header size: 1.4 % 92.6 MiB

L2 header size: 0.0 % 0 Bytes

ABD chunk waste size: 0.2 % 14.5 MiBAny advice is welcome