Hi All,

I am trying to mount a nfs folder from a nfs server which is in a proxmox VM.

in the VM i see the exports :

pool1/proxmox is a dataset

pool1/proxmox/save is a dataset

toto is a folder (I try also as only dataset was with error also)

On my node pve the command line:

displays

Iptables of the node show :

I try to in datacenter in storage :

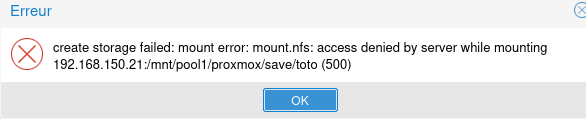

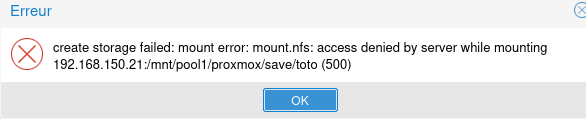

and I have this error

Same error if I export in the vm a folder or a dataset.

I tried to modify directly the file /etc/pve storage.conf

The storage appears but the item Save-NFS in the node as a ? and if I select the item, same error (500).

No firewall is active at this moment (datacenter level and vm level)

How can I solve this 500 error ?

Or what I need to look in order that host and the VM can have a common share ? NFS or other options ?

Thanks

I am trying to mount a nfs folder from a nfs server which is in a proxmox VM.

in the VM i see the exports :

Code:

nas4free: ~# showmount -e

Exports list on localhost:

/mnt/pool1/proxmox/save/toto 192.168.150.0pool1/proxmox/save is a dataset

toto is a folder (I try also as only dataset was with error also)

On my node pve the command line:

Code:

pvesm scan nfs 192.168.150.21

Code:

clnt_create: RPC: Program not registered

command '/sbin/showmount --no-headers --exports 192.168.150.21' failed: exit code 1Iptables of the node show :

Code:

root@pve:~# iptables -L

Chain INPUT (policy ACCEPT)

target prot opt source destination

ACCEPT tcp -- anywhere anywhere tcp dpt:nfs

ACCEPT tcp -- anywhere anywhere tcp dpt:sunrpc

ACCEPT udp -- anywhere anywhere udp dpt:nfs

ACCEPT udp -- anywhere anywhere udp dpt:sunrpcI try to in datacenter in storage :

and I have this error

Same error if I export in the vm a folder or a dataset.

I tried to modify directly the file /etc/pve storage.conf

The storage appears but the item Save-NFS in the node as a ? and if I select the item, same error (500).

No firewall is active at this moment (datacenter level and vm level)

How can I solve this 500 error ?

Or what I need to look in order that host and the VM can have a common share ? NFS or other options ?

Thanks

Last edited: