good day,

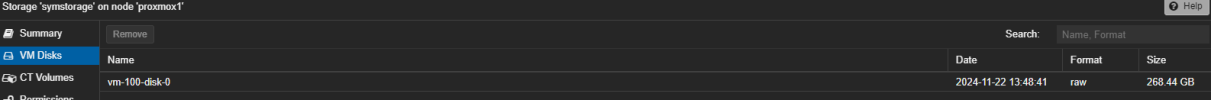

i have a PVE cluster that i setup and have connected a symbology ISCSI drive. I was able to install an OS on my cluster using the ISCSI for the data drive successfully. Everything was working correctly until i came back into the office today (Monday) and for some reason the IP changed on the ISCSI device and i lost my HDD for my VM. i was able to remap the ISCSI connection successfully but i was not able to access that VM HDD. (nothing from i can tell has changed other than the IP address) however when i go to the storage portion of the connection/node i can see the VM disk that i want to attach to the VM. my question is can i attach that disk to the VM and restart the VM with that connected? if so how do i do that? or is this a bug that needs to be fixed in a future release?

TIA

i have a PVE cluster that i setup and have connected a symbology ISCSI drive. I was able to install an OS on my cluster using the ISCSI for the data drive successfully. Everything was working correctly until i came back into the office today (Monday) and for some reason the IP changed on the ISCSI device and i lost my HDD for my VM. i was able to remap the ISCSI connection successfully but i was not able to access that VM HDD. (nothing from i can tell has changed other than the IP address) however when i go to the storage portion of the connection/node i can see the VM disk that i want to attach to the VM. my question is can i attach that disk to the VM and restart the VM with that connected? if so how do i do that? or is this a bug that needs to be fixed in a future release?

TIA