Hi guys,

I need some advice.

I have a QSAN NAS XS3326 with 2 controllers. Initially, I configured it ISCSI with Multipath. This NAS has 8 x 10GB ports so I did it individually and configured it.

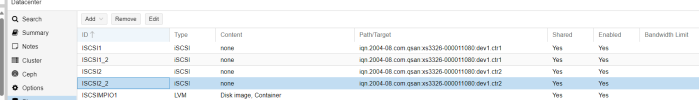

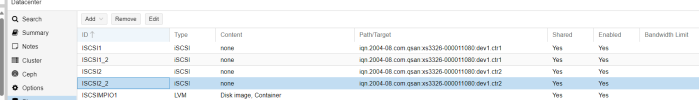

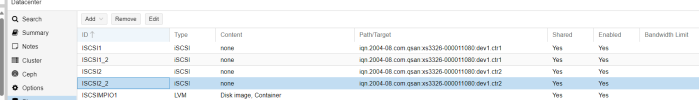

I manually added each ISCSI Storage individually and made one LVM to handle the storage.

Then I bonded 4 x 10GB ports for each controller in the NAS. However, and obviously at the proxmox level, there's like multipath to the original IPs and thus it shows faulty path.

*I removed some ISCSI storage to see if I can rectify the situation below.

root@imm4:/etc# iscsiadm -m session

tcp: [1] 10.254.16.102:3260,1 iqn.2004-08.com.qsan:xs3326-000011080:dev1.ctr1 (non-flash)

tcp: [2] 10.254.16.104:3260,1 iqn.2004-08.com.qsan:xs3326-000011080:dev1.ctr1 (non-flash)

tcp: [3] 10.254.16.101:3260,1 iqn.2004-08.com.qsan:xs3326-000011080:dev1.ctr1 (non-flash)

tcp: [4] 10.254.16.108:3260,1 iqn.2004-08.com.qsan:xs3326-000011080:dev1.ctr2 (non-flash)

tcp: [5] 10.254.16.106:3260,1 iqn.2004-08.com.qsan:xs3326-000011080:dev1.ctr2 (non-flash)

tcp: [6] 10.254.16.105:3260,1 iqn.2004-08.com.qsan:xs3326-000011080:dev1.ctr2 (non-flash)

root@imm4:/etc# multipath -ll

mpatha (3200a00137811a640) dm-4 QSAN,XS3326

size=16T features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

`-+- policy='round-robin 0' prio=50 status=active

|- 11:0:0:1 sdc 8:32 failed faulty running

|- 13:0:0:1 sdg 8:96 active ready running

|- 12:0:0:1 sdf 8:80 failed faulty running

|- 14:0:0:0 sdh 8:112 failed faulty running

|- 15:0:0:0 sdj 8:144 failed faulty running

|- 16:0:0:0 sdk 8:160 active ready running

|- 11:0:0:0 sdb 8:16 failed faulty running

|- 13:0:0:0 sde 8:64 active ready running

|- 12:0:0:0 sdd 8:48 failed faulty running

|- 14:0:0:1 sdi 8:128 failed faulty running

|- 15:0:0:1 sdl 8:176 failed faulty running

`- 16:0:0:1 sdm 8:192 active ready running

Without rebooting the server, how best can I modify these paths and leave just 2 main paths. Being 10.254.16.101 and 10.254.16.105.

Don't want to redo everything again as there are 4 hosts and 100s of VMs.

I need some advice.

I have a QSAN NAS XS3326 with 2 controllers. Initially, I configured it ISCSI with Multipath. This NAS has 8 x 10GB ports so I did it individually and configured it.

I manually added each ISCSI Storage individually and made one LVM to handle the storage.

Then I bonded 4 x 10GB ports for each controller in the NAS. However, and obviously at the proxmox level, there's like multipath to the original IPs and thus it shows faulty path.

*I removed some ISCSI storage to see if I can rectify the situation below.

root@imm4:/etc# iscsiadm -m session

tcp: [1] 10.254.16.102:3260,1 iqn.2004-08.com.qsan:xs3326-000011080:dev1.ctr1 (non-flash)

tcp: [2] 10.254.16.104:3260,1 iqn.2004-08.com.qsan:xs3326-000011080:dev1.ctr1 (non-flash)

tcp: [3] 10.254.16.101:3260,1 iqn.2004-08.com.qsan:xs3326-000011080:dev1.ctr1 (non-flash)

tcp: [4] 10.254.16.108:3260,1 iqn.2004-08.com.qsan:xs3326-000011080:dev1.ctr2 (non-flash)

tcp: [5] 10.254.16.106:3260,1 iqn.2004-08.com.qsan:xs3326-000011080:dev1.ctr2 (non-flash)

tcp: [6] 10.254.16.105:3260,1 iqn.2004-08.com.qsan:xs3326-000011080:dev1.ctr2 (non-flash)

root@imm4:/etc# multipath -ll

mpatha (3200a00137811a640) dm-4 QSAN,XS3326

size=16T features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

`-+- policy='round-robin 0' prio=50 status=active

|- 11:0:0:1 sdc 8:32 failed faulty running

|- 13:0:0:1 sdg 8:96 active ready running

|- 12:0:0:1 sdf 8:80 failed faulty running

|- 14:0:0:0 sdh 8:112 failed faulty running

|- 15:0:0:0 sdj 8:144 failed faulty running

|- 16:0:0:0 sdk 8:160 active ready running

|- 11:0:0:0 sdb 8:16 failed faulty running

|- 13:0:0:0 sde 8:64 active ready running

|- 12:0:0:0 sdd 8:48 failed faulty running

|- 14:0:0:1 sdi 8:128 failed faulty running

|- 15:0:0:1 sdl 8:176 failed faulty running

`- 16:0:0:1 sdm 8:192 active ready running

Without rebooting the server, how best can I modify these paths and leave just 2 main paths. Being 10.254.16.101 and 10.254.16.105.

Don't want to redo everything again as there are 4 hosts and 100s of VMs.