Hello.

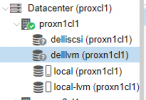

I have a proxmox cluster and ME4024 storage.

Configured iSCSI Multipath as written in the instructions.

For the test, I disconnected the port wire, the address of which is connected in proxmox. At the same time, the machine continued to work for me until I turn it off.

The main question.

After pulling out the wire, the device and the LVM drive become with question marks.

I think that means. that iSCSI Multipath does not work and does not switch to another route.

Is that how it should be or not?

root@proxn1cl1:~# multipath -ll

Jul 13 11:24:01 | /etc/multipath.conf line 25, invalid keyword: polling_interval

mpath0 (3600c0ff0005050974bfde26001000000) dm-5 DellEMC,ME4

size=16T features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

`-+- policy='round-robin 0' prio=23 status=active

|- 15:0:0:0 sdb 8:16 failed faulty running

|- 16:0:0:0 sdc 8:32 active ready running

|- 17:0:0:0 sdd 8:48 active ready running

`- 18:0:0:0 sde 8:64 active ready running

defaults {

polling_interval 2

path_selector "round-robin 0"

path_grouping_policy multibus

getuid_callout "/lib/udev/scsi_id -g -u -d /dev/%n"

rr_min_io 100

failback immediate

no_path_retry queue

}

blacklist {

wwid .*

}

blacklist_exceptions {

wwid 3600c0ff0005050974bfde26001000000

}

devices {

device {

vendor "DELL"

product "MD32xxi"

path_grouping_policy group_by_prio

prio rdac

polling_interval 5

path_checker rdac

path_selector "round-robin 0"

hardware_handler "1 rdac"

failback immediate

features "2 pg_init_retries 50"

no_path_retry 30

rr_min_io 100

}

}

I have a proxmox cluster and ME4024 storage.

Configured iSCSI Multipath as written in the instructions.

For the test, I disconnected the port wire, the address of which is connected in proxmox. At the same time, the machine continued to work for me until I turn it off.

The main question.

After pulling out the wire, the device and the LVM drive become with question marks.

I think that means. that iSCSI Multipath does not work and does not switch to another route.

Is that how it should be or not?

root@proxn1cl1:~# multipath -ll

Jul 13 11:24:01 | /etc/multipath.conf line 25, invalid keyword: polling_interval

mpath0 (3600c0ff0005050974bfde26001000000) dm-5 DellEMC,ME4

size=16T features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

`-+- policy='round-robin 0' prio=23 status=active

|- 15:0:0:0 sdb 8:16 failed faulty running

|- 16:0:0:0 sdc 8:32 active ready running

|- 17:0:0:0 sdd 8:48 active ready running

`- 18:0:0:0 sde 8:64 active ready running

defaults {

polling_interval 2

path_selector "round-robin 0"

path_grouping_policy multibus

getuid_callout "/lib/udev/scsi_id -g -u -d /dev/%n"

rr_min_io 100

failback immediate

no_path_retry queue

}

blacklist {

wwid .*

}

blacklist_exceptions {

wwid 3600c0ff0005050974bfde26001000000

}

devices {

device {

vendor "DELL"

product "MD32xxi"

path_grouping_policy group_by_prio

prio rdac

polling_interval 5

path_checker rdac

path_selector "round-robin 0"

hardware_handler "1 rdac"

failback immediate

features "2 pg_init_retries 50"

no_path_retry 30

rr_min_io 100

}

}

Attachments

Last edited: