So after my NFS issues behind me (http://forum.proxmox.com/threads/7563-ISO-and-Templates) I decided to move on and setup some image /container storage using our iSCSI OpenFiler storage. I created the iSCSI storage device with no issues, other than the fact that we see the following messages getting logged continually to syslog:

sd 8:0:0:0: [sdc] Very big device. Trying to use READ CAPACITY(16).

sdc: detected capacity change from 0 to 2199023255552

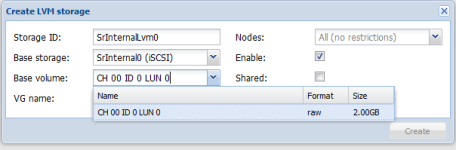

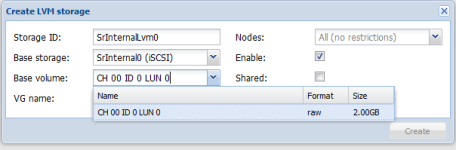

Which may be related to the bug where when we select the base volume it shows the size as 2.00GB instead of the actual 2.00TB:

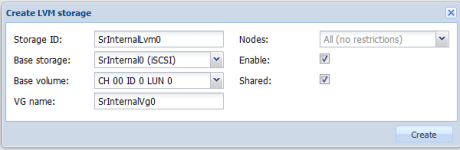

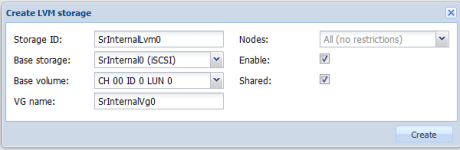

Following that we assign a VG name, select Shared and click create as shown below. The little processing indicator is displayed for about 30 seconds and then we are just brought back to the same window. Click create again and same thing:

Cannot seem to get past this part and create the LVM storage. Only option is to X out of the window.

sd 8:0:0:0: [sdc] Very big device. Trying to use READ CAPACITY(16).

sdc: detected capacity change from 0 to 2199023255552

Which may be related to the bug where when we select the base volume it shows the size as 2.00GB instead of the actual 2.00TB:

Following that we assign a VG name, select Shared and click create as shown below. The little processing indicator is displayed for about 30 seconds and then we are just brought back to the same window. Click create again and same thing:

Cannot seem to get past this part and create the LVM storage. Only option is to X out of the window.