Hello experts,

I recently undertake a infrastructure rebuilding project. I'm stuck at not being able to add/mount the previously created LUN/datapools to PVE.

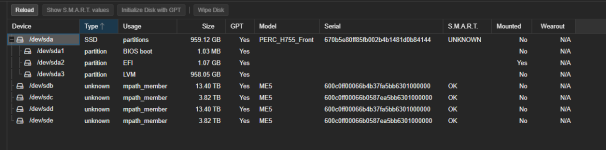

I have 2x Dell PowerEdge R650 and 1x Power Vault ME5024.

I haven't formed the cluster yet, from the first host I'm trying to add powervault as storage.

There is a misconfiguration or I'm missing a crucial step but cannot wrap my head around. I was hoping experts would point me to the right direction.

I can wipe and format the LUNs and re-create but previous team might have left some data that I don't want to discard. also previously VMWare was being used.

ME5024 is connected directly to host via iSCSi SAS cable.

I upgraded PVE to version 9.

open-iscsi installed.

read through instructions for multipath and iscsi https://pve.proxmox.com/wiki/Multipath#Introduction and previous posts however cannot seem to make it work for my own case.

NC commands returns connection refused for both IP addresses

iscsiadm discovery also returns connection refused

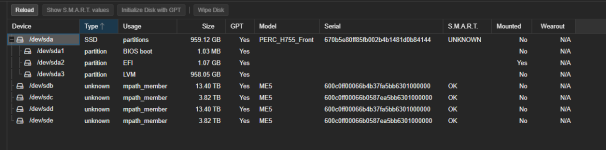

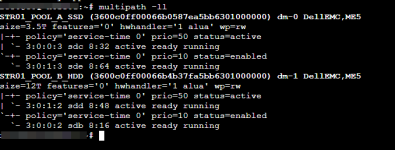

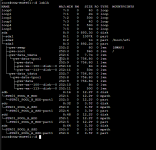

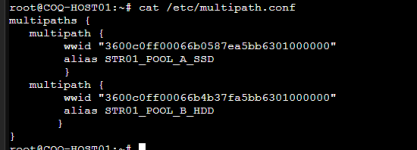

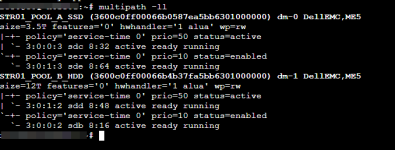

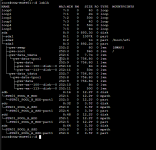

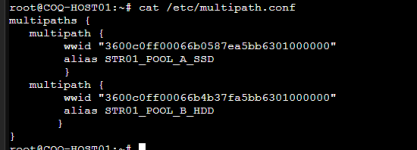

Multipaths are visible and seem active, ready, running state.

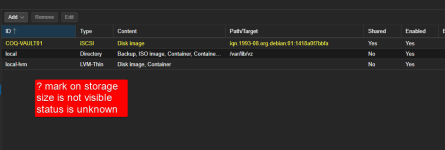

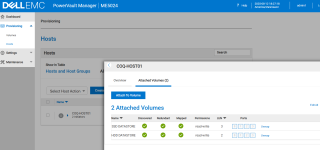

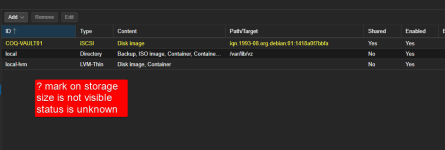

Datacenter > Storage

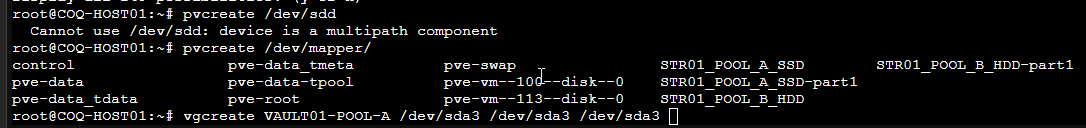

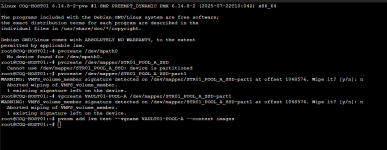

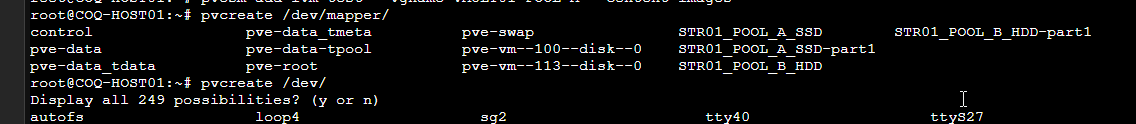

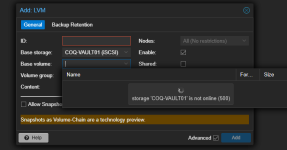

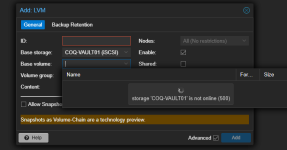

Datacenter > Storage > Add > LVM

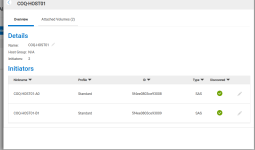

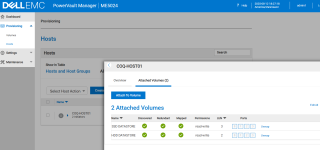

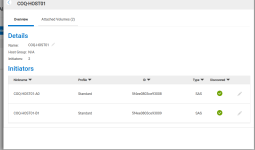

PVE initiatorname is different but dell storage won't allow me to change initiator name. below approved that I'm using correct naming convention but still won't accept the initiator name of the pve host.

https://www.dell.com/support/kbdoc/...-name-must-use-standard-iqn-format-convention

Thank you in advance!

I recently undertake a infrastructure rebuilding project. I'm stuck at not being able to add/mount the previously created LUN/datapools to PVE.

I have 2x Dell PowerEdge R650 and 1x Power Vault ME5024.

I haven't formed the cluster yet, from the first host I'm trying to add powervault as storage.

There is a misconfiguration or I'm missing a crucial step but cannot wrap my head around. I was hoping experts would point me to the right direction.

I can wipe and format the LUNs and re-create but previous team might have left some data that I don't want to discard. also previously VMWare was being used.

ME5024 is connected directly to host via iSCSi SAS cable.

I upgraded PVE to version 9.

open-iscsi installed.

read through instructions for multipath and iscsi https://pve.proxmox.com/wiki/Multipath#Introduction and previous posts however cannot seem to make it work for my own case.

NC commands returns connection refused for both IP addresses

Code:

nc 10.57.70.200 3260

(UNKNOWN) [10.57.70.200] 3260 (iscsi-target) : Connection refusediscsiadm discovery also returns connection refused

Code:

scsiadm -d 3 -m discovery -t st -p 10.57.70.200

iscsiadm: ip 10.57.70.200, port -1, tgpt -1

iscsiadm: Max file limits 1024 524288

iscsiadm: starting sendtargets discovery, address 10.57.70.200:3260,

iscsiadm: connecting to 10.57.70.200:3260

iscsiadm: cannot make connection to 10.57.70.200: Connection refused

iscsiadm: connecting to 10.57.70.200:3260

iscsiadm: cannot make connection to 10.57.70.200: Connection refused

iscsiadm: connecting to 10.57.70.200:3260

iscsiadm: cannot make connection to 10.57.70.200: Connection refused

iscsiadm: connecting to 10.57.70.200:3260

iscsiadm: cannot make connection to 10.57.70.200: Connection refused

iscsiadm: connecting to 10.57.70.200:3260

iscsiadm: cannot make connection to 10.57.70.200: Connection refused

iscsiadm: connecting to 10.57.70.200:3260

iscsiadm: cannot make connection to 10.57.70.200: Connection refused

iscsiadm: connection login retries (reopen_max) 5 exceeded

iscsiadm: Could not perform SendTargets discovery: iSCSI PDU timed outMultipaths are visible and seem active, ready, running state.

Datacenter > Storage

Datacenter > Storage > Add > LVM

PVE initiatorname is different but dell storage won't allow me to change initiator name. below approved that I'm using correct naming convention but still won't accept the initiator name of the pve host.

https://www.dell.com/support/kbdoc/...-name-must-use-standard-iqn-format-convention

Thank you in advance!