Hello,

I am running into an odd issue where my SYNOLOGY LUN is showing "?". I added a 2nd host/created a cluster today and the new host connected fine, but the old one still shows the same ?. I have rebooted both hosts and am trying to figure out why H1 (host 1) is continuing to show that the LUN is unavailable/offline but all the VMs are currently running and backing up from then LUN.

Someone recommended that THIS but I find it difficult to believe that PVE wouldn't have multipath enabled by default. The synology is already configured for multipath.

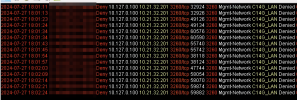

Here are results from my troubleshooting:

If I run on H2 (no VMs currently running from it) I get

storage.cfg file is IDENTICAL

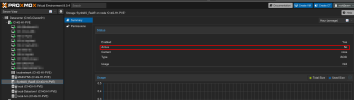

and here is the multipath support check in Synology LUN

I am running into an odd issue where my SYNOLOGY LUN is showing "?". I added a 2nd host/created a cluster today and the new host connected fine, but the old one still shows the same ?. I have rebooted both hosts and am trying to figure out why H1 (host 1) is continuing to show that the LUN is unavailable/offline but all the VMs are currently running and backing up from then LUN.

Someone recommended that THIS but I find it difficult to believe that PVE wouldn't have multipath enabled by default. The synology is already configured for multipath.

Here are results from my troubleshooting:

Code:

root@C14G-H1-PVE:~# cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content backup,iso

maxfiles 1

shared 0

lvmthin: local-lvm

thinpool data

vgname pve

content rootdir,images

lvm: local-Datastore1

vgname local-Datastore1

content rootdir,images

nodes C14G-H1-PVE

shared 0

iscsi: SynNAS_Raid5

portal 10.127.0.150

target iqn.2000-01.com.synology:SYNSAN1-c14g.Target-1.cb16691f22

content none

nfs: MAIN-FNS

export /volume1/MAIN-NFS

path /mnt/pve/MAIN-FNS

server 10.127.0.150

content iso,snippets,rootdir,backup,images,vztmpl

maxfiles 7

Code:

root@C14G-H1-PVE:~# pvesm scan iscsi 10.127.0.150

iscsiadm: Could not stat /etc/iscsi/nodes//,3260,-1/default to delete node: No such file or directory

iscsiadm: Could not add/update [tcp:[hw=,ip=,net_if=,iscsi_if=default] 10.127.0.150,3260,1 iqn.2000-01.com.synology:SYNSAN1-c14g.Target-1.cb16691f22]

iscsiadm: Could not stat /etc/iscsi/nodes//,3260,-1/default to delete node: No such file or directory

iscsiadm: Could not add/update [tcp:[hw=,ip=,net_if=,iscsi_if=default] fe80::211:32ff:fe6a:19b5,3260,1 iqn.2000-01.com.synology:SYNSAN1-c14g.Target-1.cb16691f22]

iqn.2000-01.com.synology:SYNSAN1-c14g.Target-1.cb16691f22 10.127.0.150:3260,[fe80::211:32ff:fe6a:19b5]:3260

Code:

root@C14G-H1-PVE:~# iscsiadm -m session -P 1 | grep 'iSCSI.*State'

iscsiadm: No active sessions.If I run on H2 (no VMs currently running from it) I get

Code:

iscsiadm -m session -P 1 | grep 'iSCSI.*State'

Code:

root@C14G-H2-PVE:~# pvesm scan iscsi 10.127.0.150

iqn.2000-01.com.synology:SYNSAN1-c14g.Target-1.cb16691f22 10.127.0.150:3260,[fe80::211:32ff:fe6a:19b5]:3260storage.cfg file is IDENTICAL

and here is the multipath support check in Synology LUN