I know I've been able to get k3s running on my LXC containers in the past. This was when I just ran a straight up OS and setup LXD myself.

Then I decided to try and be clever, wiped my box, and installed Proxmox in order to, among other things, benefit from the support for LXC containers.

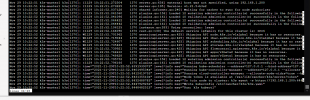

Between the well documented problems with /dev/kmsg (mistakenly marked as resolved in this forum) and other issues involving cgroups, I just want to know if anyone here is actually running kubernetes on their containers? I have a sneaking suspicion everyone just bailed on this approach and just went to the VM side of things. Please prove me wrong

Then I decided to try and be clever, wiped my box, and installed Proxmox in order to, among other things, benefit from the support for LXC containers.

Between the well documented problems with /dev/kmsg (mistakenly marked as resolved in this forum) and other issues involving cgroups, I just want to know if anyone here is actually running kubernetes on their containers? I have a sneaking suspicion everyone just bailed on this approach and just went to the VM side of things. Please prove me wrong