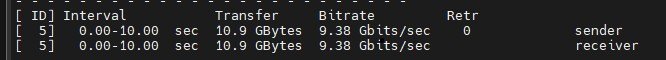

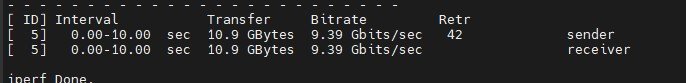

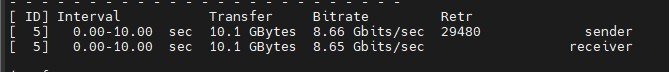

I was benchmarking my virtualized TrueNAS setup and I noticed some seemingly unusual behavior in my main proxmox host. When running iperf3 between two different hosts on the same VLAN, but not on the same server, traffic coming out of the main proxmox box is slower than traffic going in:

I tried two different NICs with the same effect (both Intel X520-DA2 clones from Amazon). My set up is that my main proxmox host is connected by two AOC connections using LACP to my switch. The other host is connected via a 10 base T connection (no LAG, one connection only). It seems like the outbound traffic from my main node is generating a lot of retries.

Is this just a peculiarity of the Intel X520 cards, or is there a configuration I could tweak to address the retries and get the inbound and outbound traffic up to approximately the same speed? If I run iperf3 -c 192.168.50.XX -P 5, I get 9.35 Gbits/second both ways. Most of my network traffic is data moving over NFS. Does NFS use multithreading? Also I don't see this type of behavior on my other other Proxmox node which has a single 10 base T NIC in it (acquantia chipset I believe)

louie@Admin:~$ iperf3 -c 192.168.50.3

Connecting to host 192.168.50.3, port 5201

[ 5] local 192.168.50.36 port 33222 connected to 192.168.50.3 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 1.09 GBytes 9.39 Gbits/sec 11 3.56 MBytes

[ 5] 1.00-2.00 sec 1.09 GBytes 9.39 Gbits/sec 0 3.78 MBytes

[ 5] 2.00-3.00 sec 1.09 GBytes 9.39 Gbits/sec 8 3.98 MBytes

[ 5] 3.00-4.00 sec 1.09 GBytes 9.39 Gbits/sec 0 4.00 MBytes

[ 5] 4.00-5.00 sec 1.09 GBytes 9.39 Gbits/sec 0 4.00 MBytes

[ 5] 5.00-6.00 sec 1.09 GBytes 9.39 Gbits/sec 0 4.00 MBytes

[ 5] 6.00-7.00 sec 1.09 GBytes 9.39 Gbits/sec 0 4.00 MBytes

[ 5] 7.00-8.00 sec 1.09 GBytes 9.39 Gbits/sec 0 4.00 MBytes

[ 5] 8.00-9.00 sec 1.08 GBytes 9.26 Gbits/sec 52 2.93 MBytes

[ 5] 9.00-10.00 sec 1.09 GBytes 9.38 Gbits/sec 0 3.20 MBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 10.9 GBytes 9.38 Gbits/sec 71 sender

[ 5] 0.00-10.00 sec 10.9 GBytes 9.37 Gbits/sec receiver

iperf Done.

louie@Admin:~$ iperf3 -c 192.168.50.3 -R

Connecting to host 192.168.50.3, port 5201

Reverse mode, remote host 192.168.50.3 is sending

[ 5] local 192.168.50.36 port 42202 connected to 192.168.50.3 port 5201

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 980 MBytes 8.21 Gbits/sec

[ 5] 1.00-2.00 sec 962 MBytes 8.07 Gbits/sec

[ 5] 2.00-3.00 sec 978 MBytes 8.21 Gbits/sec

[ 5] 3.00-4.00 sec 978 MBytes 8.21 Gbits/sec

[ 5] 4.00-5.00 sec 945 MBytes 7.93 Gbits/sec

[ 5] 5.00-6.00 sec 939 MBytes 7.88 Gbits/sec

[ 5] 6.00-7.00 sec 970 MBytes 8.13 Gbits/sec

[ 5] 7.00-8.00 sec 995 MBytes 8.35 Gbits/sec

[ 5] 8.00-9.00 sec 979 MBytes 8.21 Gbits/sec

[ 5] 9.00-10.00 sec 991 MBytes 8.31 Gbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 9.49 GBytes 8.15 Gbits/sec 19290 sender

[ 5] 0.00-10.00 sec 9.49 GBytes 8.15 Gbits/sec receiver

I tried two different NICs with the same effect (both Intel X520-DA2 clones from Amazon). My set up is that my main proxmox host is connected by two AOC connections using LACP to my switch. The other host is connected via a 10 base T connection (no LAG, one connection only). It seems like the outbound traffic from my main node is generating a lot of retries.

Is this just a peculiarity of the Intel X520 cards, or is there a configuration I could tweak to address the retries and get the inbound and outbound traffic up to approximately the same speed? If I run iperf3 -c 192.168.50.XX -P 5, I get 9.35 Gbits/second both ways. Most of my network traffic is data moving over NFS. Does NFS use multithreading? Also I don't see this type of behavior on my other other Proxmox node which has a single 10 base T NIC in it (acquantia chipset I believe)