I just stumbled over something very weird with LXC Containers, but i bet it happens with VM's either:

i have 2 Identical Nodes in a Cluster:

- both are connected over 2x25G in LACP (NIC: Intel E810)

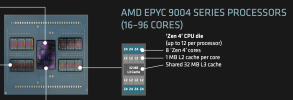

- CPU: Genoa 9374F

- RAM: 12x64GB (All Channels 1DPC) 768GB

- Storage: ZFS Raid10 (8x Micron 7450 Max)

So those nodes are everything, but not slow in any regard. PVE is working great, its actually the first issue i have.

I have more as 10 PVE Servers and im around here since forever possibly.

--> What i mean is, this issue should have everyone! But probably no one realized, maybe even came to the idea to test.

I imagined at least till today, that an iperf3 test, or general network speed, should be insane on a communication between 2 LXC's/VM's on the same Node.

Because the Packets doesn't leave the Node (if both Containers/VMs are in the same Network), means they never actually leave the vmbridge.

But this is absolutely not the case...

i get with iperf3 (no special arguments, just -s and -c) fullowing:

- Both LXC's on the same Node: 14.1 Gbits/sec

- Each LXC on separate Node: 20.5 Gbits/sec

It feels like my understanding is broken now, because the Packets leave the Host, so there is at least the Hardcore Limit of 25G.

But on the same Host, you actually don't have any Limits, so i expected to see at least like 40Gbits/s

Anyone has a Clue, tryed already an iperf3 test?

Do at least like 3-5 tests please.

My issue is, thats the first servers with 25G links i have, all others have like 10G, and 10G was never an issue.

But i never camed to the idea to test Iperf3 on 2 Containers or VM's on the same node xD

Cheers

i have 2 Identical Nodes in a Cluster:

- both are connected over 2x25G in LACP (NIC: Intel E810)

- CPU: Genoa 9374F

- RAM: 12x64GB (All Channels 1DPC) 768GB

- Storage: ZFS Raid10 (8x Micron 7450 Max)

So those nodes are everything, but not slow in any regard. PVE is working great, its actually the first issue i have.

I have more as 10 PVE Servers and im around here since forever possibly.

--> What i mean is, this issue should have everyone! But probably no one realized, maybe even came to the idea to test.

I imagined at least till today, that an iperf3 test, or general network speed, should be insane on a communication between 2 LXC's/VM's on the same Node.

Because the Packets doesn't leave the Node (if both Containers/VMs are in the same Network), means they never actually leave the vmbridge.

But this is absolutely not the case...

i get with iperf3 (no special arguments, just -s and -c) fullowing:

- Both LXC's on the same Node: 14.1 Gbits/sec

- Each LXC on separate Node: 20.5 Gbits/sec

It feels like my understanding is broken now, because the Packets leave the Host, so there is at least the Hardcore Limit of 25G.

But on the same Host, you actually don't have any Limits, so i expected to see at least like 40Gbits/s

Anyone has a Clue, tryed already an iperf3 test?

Do at least like 3-5 tests please.

My issue is, thats the first servers with 25G links i have, all others have like 10G, and 10G was never an issue.

But i never camed to the idea to test Iperf3 on 2 Containers or VM's on the same node xD

Cheers