Hi All,

Recently I've started up an VM with Ubuntu server on it.

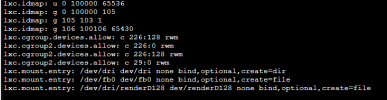

I wanted to share the GPU with it so that it can be used for Frigate purposes.

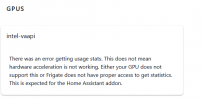

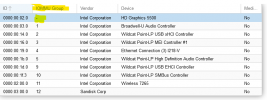

Sadly enough when I tried to pass through the Intel HD Graphics 5000 I get the error as stated in the topic title.

Digging in some stuff I find the following:

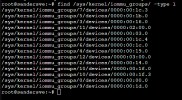

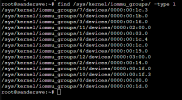

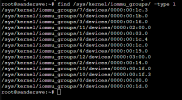

No IOMMU groupnumber for the intel card:

No corresponding group found when running:

Checked the BIOS and VT-d seems to be enabled properly. Also I've disabled legacy boot and the BIOS.

Hope to get this to work otherwise my current Intel Nuc5i3ryh will be kind of use less...

Recently I've started up an VM with Ubuntu server on it.

I wanted to share the GPU with it so that it can be used for Frigate purposes.

Sadly enough when I tried to pass through the Intel HD Graphics 5000 I get the error as stated in the topic title.

Digging in some stuff I find the following:

No IOMMU groupnumber for the intel card:

No corresponding group found when running:

find /sys/kernel/iommu_groups/ -type l

Checked the BIOS and VT-d seems to be enabled properly. Also I've disabled legacy boot and the BIOS.

Hope to get this to work otherwise my current Intel Nuc5i3ryh will be kind of use less...

Attachments

Last edited: