Hy all ,

I have a Hetzner server with Promox since 3-4 years.

Every thing is very nice , with quite ~ 40 LXCrunnings.

pveversion :

root@px ~ # pveversion -v

proxmox-ve: 8.2.0 (running kernel: 6.8.12-1-pve)

pve-manager: 8.2.4 (running version: 8.2.4/faa83925c9641325)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.8: 6.8.12-1

proxmox-kernel-6.8.12-1-pve-signed: 6.8.12-1

proxmox-kernel-6.8.8-4-pve-signed: 6.8.8-4

proxmox-kernel-6.8.8-2-pve-signed: 6.8.8-2

proxmox-kernel-6.5.13-6-pve-signed: 6.5.13-6

proxmox-kernel-6.5: 6.5.13-6

amd64-microcode: 3.20240820.1~deb12u1

ceph-fuse: 16.2.11+ds-2

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown: residual config

ifupdown2: 3.2.0-1+pmx9

intel-microcode: 3.20240813.1~deb12u1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.1

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.3

libpve-access-control: 8.1.4

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.0.7

libpve-cluster-perl: 8.0.7

libpve-common-perl: 8.2.2

libpve-guest-common-perl: 5.1.4

libpve-http-server-perl: 5.1.0

libpve-rs-perl: 0.8.9

libpve-storage-perl: 8.2.3

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.2.7-1

proxmox-backup-file-restore: 3.2.7-1

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-widget-toolkit: 4.2.3

pve-cluster: 8.0.7

pve-container: 5.1.12

pve-docs: 8.2.3

pve-edk2-firmware: 4.2023.08-4

pve-firewall: 5.0.7

pve-firmware: 3.13-1

pve-ha-manager: 4.0.5

pve-i18n: 3.2.2

pve-qemu-kvm: 9.0.2-2

pve-xtermjs: 5.3.0-3

qemu-server: 8.2.4

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

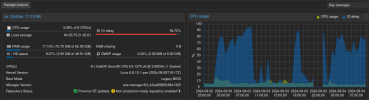

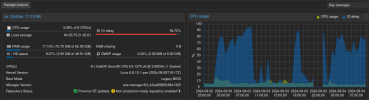

But since few days , I am facing iodelays issue.

I don't know what happened .

I ask for a rebooting with a Hard drive check. Every thing seems ok .

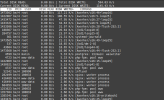

But SMART values do not seem ok

What do you think ?

I have a Hetzner server with Promox since 3-4 years.

Every thing is very nice , with quite ~ 40 LXCrunnings.

pveversion :

root@px ~ # pveversion -v

proxmox-ve: 8.2.0 (running kernel: 6.8.12-1-pve)

pve-manager: 8.2.4 (running version: 8.2.4/faa83925c9641325)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.8: 6.8.12-1

proxmox-kernel-6.8.12-1-pve-signed: 6.8.12-1

proxmox-kernel-6.8.8-4-pve-signed: 6.8.8-4

proxmox-kernel-6.8.8-2-pve-signed: 6.8.8-2

proxmox-kernel-6.5.13-6-pve-signed: 6.5.13-6

proxmox-kernel-6.5: 6.5.13-6

amd64-microcode: 3.20240820.1~deb12u1

ceph-fuse: 16.2.11+ds-2

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown: residual config

ifupdown2: 3.2.0-1+pmx9

intel-microcode: 3.20240813.1~deb12u1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.1

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.3

libpve-access-control: 8.1.4

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.0.7

libpve-cluster-perl: 8.0.7

libpve-common-perl: 8.2.2

libpve-guest-common-perl: 5.1.4

libpve-http-server-perl: 5.1.0

libpve-rs-perl: 0.8.9

libpve-storage-perl: 8.2.3

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.2.7-1

proxmox-backup-file-restore: 3.2.7-1

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-widget-toolkit: 4.2.3

pve-cluster: 8.0.7

pve-container: 5.1.12

pve-docs: 8.2.3

pve-edk2-firmware: 4.2023.08-4

pve-firewall: 5.0.7

pve-firmware: 3.13-1

pve-ha-manager: 4.0.5

pve-i18n: 3.2.2

pve-qemu-kvm: 9.0.2-2

pve-xtermjs: 5.3.0-3

qemu-server: 8.2.4

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

But since few days , I am facing iodelays issue.

I don't know what happened .

I ask for a rebooting with a Hard drive check. Every thing seems ok .

But SMART values do not seem ok

What do you think ?