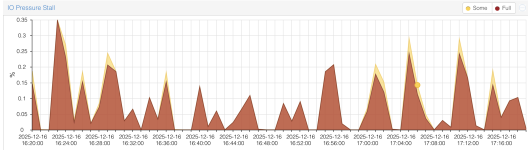

IO Pressure Stall

- Thread starter Andrii.B

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Anyone can me explain why the IO pressure stall always display average 0.1% on ZFS on 4x NVMe, but LVM on hardware raid with SSD usually display zero for all time,

Perhaps because ZFS does write much more often than LVM.

That graph shows an average below 0.15 percent. Do you feel that's a problem?

I don't feel it, of course. But it's strange, because it's there is less writes on ZFS and iostat show the following:

- LVM: AVG 1000 r/s, 25 MB/s, 2 %rrqm, 0.4 r_await, 500 w/s, 7 MB/s, 2 %wrqm, 0.05 w_await, 10 %util

- ZFS: AVG 100 r/s, 1.2 MB/s, 0 %rrqm, 0.1 r_await, 150 w/s, 7 MB/s, 0 %wrqm, 0.2 w_await, 2 %util

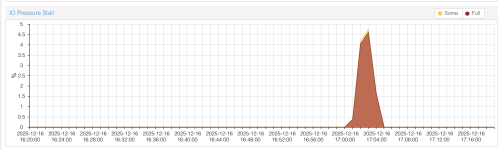

The same issue I see on VMs. I limited a drive performance on VM (iops, MB/s). There is 3% IO Pressure Stall on ZFS VM and 0% IO Pressure Stall on LVM VM.

It seems IO Pressure Stall doesn't work correctly with LVM or hardware raid.

It seems IO Pressure Stall doesn't work correctly with LVM or hardware raid.

maybe your hardware raid have writeback cache ?The same issue I see on VMs. I limited a drive performance on VM (iops, MB/s). There is 3% IO Pressure Stall on ZFS VM and 0% IO Pressure Stall on LVM VM.

It seems IO Pressure Stall doesn't work correctly with LVM or hardware raid.

what is your ssd model ? datacenter grade ssd with supercapacitor ? (zfs will run poorly on consumer ssd).

0% pressure is totally fine, it should be 0% to have good performance.

Yes, I use it on all my nodes, except NVMe.maybe your hardware raid have writeback cache ?

As for ZFS, I use Micron 7500 Pro. I'm testing a new configuration with ZFS, NVMe, instead of Enterprise SSD in HW Raid.

I can't say that it works bad, but the graphs show different things than on LVM.zfs will run poorly on consumer ssd