Ok, yes this is normal. The system have not enough power. 2 Disk and backup on same machine, that can't go fine.

BUFFERED READS: 31.33 MB/sec

AVERAGE SEEK TIME: 15.26 ms

FSYNCS/SECOND: 26.44

31,33 MB/s is not really much. And fsync should me much more higher. A good value is about 3000 upward. For example here two servers.

Littel HP with 4 SATA Disks in Raid10

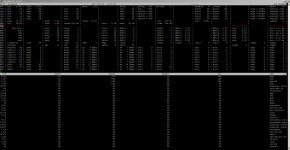

Code:

CPU BOGOMIPS: 24742.04

REGEX/SECOND: 1524866

HD SIZE: 9.72 GB (/dev/dm-0)

BUFFERED READS: 188.50 MB/sec

AVERAGE SEEK TIME: 7.78 ms

FSYNCS/SECOND: 5259.39

DNS EXT: 44.98 ms

Or an Supermicro with 6 SATA Disk in ZFS Raid10

Code:

CPU BOGOMIPS: 40002.00

REGEX/SECOND: 2686294

HD SIZE: 1920.82 GB (v-machines)

FSYNCS/SECOND: 6812.31

DNS EXT: 61.02 ms

The Systems Performance is not always depending on these values, but you read that you can have problem with your hardware. Or

not enough / to little harddrives.

And the last thing: Softraid with mdadm is not supportet... but yes should also work

with more disks, or some Enterprise SSD's.

I recommend, that you upgrade to newest PVEversion too In the course of the hardware change / upgrade.

Please Post the details of your HDD's

Code:

smartctl -a /dev/sda

smartctl -a /dev/sdb

smartctl -a /dev/sdc