Hi,

we are having an IO Problem with (at least) one of our PVE hosts. The host has only one VM running which has 2 Drives:

One of the drives is /, the other one is a 10TB data mount.

The VM has a high IO utilisation:

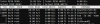

But the Host does not:

as all the writes in the VM are going to the 10T HDD I don't think that IO Thread will bring a benifit.

What I'm wondering about is that the VM is so much slower than the Host. Is anyone else having the same Problem or a solution?

Thanks and Regards, Jonas

we are having an IO Problem with (at least) one of our PVE hosts. The host has only one VM running which has 2 Drives:

One of the drives is /, the other one is a 10TB data mount.

The VM has a high IO utilisation:

But the Host does not:

as all the writes in the VM are going to the 10T HDD I don't think that IO Thread will bring a benifit.

What I'm wondering about is that the VM is so much slower than the Host. Is anyone else having the same Problem or a solution?

Thanks and Regards, Jonas