Lab Equipment Overview

Router:

Mikrotik CCR2004-1G-12S-2XS

Switches:

- SW01: Mikrotik CRS504-4XQ-IN, equipped with four XQ+BC0003-XS+ cables

- SW02: Mikrotik CRS310-8G-25+IN

- SW03: Mikrotik CRS310-8G-25+IN

Proxmox Hosts:

Minisforum MS-01

I have successfully deployed a Proxmox cluster comprising six Minisforum MS-01 machines. Each host is outfitted with dual 10G NICs, dual 2.5G NICs, and dual Thunderbolt 4 NICs, effectively functioning as 25G network interface cards (NICs). Notably, one of the 2.5G NICs is connected to the switches as an access port for Intel vPro, while all other ports are connected to the switches' trunk ports.

In addition to this setup, I have configured three VLAN networks:

- VLAN 30 is designated for Proxmox Corosync on the 2.5G port.

- VLAN 60 and VLAN 70 utilize the bonded 10G NIC ports for Virtual Machines (VMs) and Kubernetes (K8s).

SW01 is connected to the router using two 25G ports configured in a bond (802.3ad, Layer 3+4). Additionally, SW02 and SW03 are linked via two 10G ports, also set up in a bonding configuration.

The router acts as a DHCP server and serves as the main gateway to the internet.

View attachment 80430

View attachment 80430

----

Following this

guide I configured Thunderbolt networking

Bash:

root@pve01:~# vtysh -c 'show openfabric route'

Area 1:

IS-IS L2 IPv4 routing table:

Prefix Metric Interface Nexthop Label(s)

------------------------------------------------------

10.0.0.81/32 0 - - -

10.0.0.82/32 20 en06 10.0.0.82 -

10.0.0.83/32 30 en06 10.0.0.82 -

10.0.0.84/32 20 en05 10.0.0.84 -

10.0.0.85/32 30 en05 10.0.0.84 -

10.0.0.86/32 40 en05 10.0.0.84 -

en06 10.0.0.82 -

IS-IS L2 IPv6 routing table:

root@pve01:~# vtysh -c 'show bgp summary'

L2VPN EVPN Summary (VRF default):

BGP router identifier 10.0.0.81, local AS number 65000 vrf-id 0

BGP table version 0

RIB entries 17, using 3264 bytes of memory

Peers 5, using 3623 KiB of memory

Peer groups 1, using 64 bytes of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt Desc

pve02(10.0.0.82) 4 65000 366 366 0 0 0 00:18:07 1 1 N/A

pve03(10.0.0.83) 4 65000 371 368 0 0 0 00:15:53 1 1 N/A

pve04(10.0.0.84) 4 65000 366 367 0 0 0 00:18:05 1 1 N/A

pve05(10.0.0.85) 4 65000 370 371 0 0 0 00:15:39 1 1 N/A

pve06(10.0.0.86) 4 65000 377 373 0 0 0 00:15:48 1 1 N/A

Total number of neighbors 5

___

Proxmox hosts can ping each other using their loopback interface IP addresses.

I have installed and cofigured Ceph cluster overt that thunderbolt mesh network:

YAML:

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 10.0.0.81/24

fsid = 7b54fd80-5f11-416b-ad5b-6e0ce7cb0694

mon_allow_pool_delete = true

mon_host = 10.0.0.81 10.0.0.82 10.0.0.83 10.0.0.84 10.0.0.85 10.0.0.86

ms_bind_ipv4 = true

ms_bind_ipv6 = false

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = 10.0.0.81/24

---

Currently, my network configuration across the Proxmox hosts is as follows:

- vmbr1.70 (2.5G NIC): Subnet 10.1.70.0/28 for Corosync and management purposes.

- vmbr0.60 (10G NICs in a bond): Subnet 10.1.60.0/24 designated for virtual machines (VMs).

- vmbr0.80 (10G NICs in a bond): Subnet 10.1.80.0/27 allocated for Kubernetes (K8S) operations.

I would like to implement Ceph-CSI in my Kubernetes (K8s) virtual machine environment. Could you advise on the optimal scenario for configuring network access from the K8s VMs to the Ceph network?

Currently, I am considering the following:

- Directing traffic to each Proxmox VE (PVE) host's loopback interface (10.0.0.8x) through their respective IP addresses within VLAN 80.

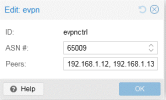

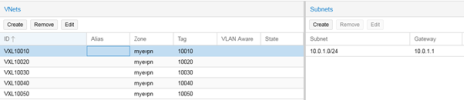

- Configuring a virtual network (vNet) that allows access to the loopback network and attaching it as a second NIC to the K8s VMs.

Thank you for your assistance!