I'll assume this was directed at me. Yes, I did. I haven't been able to find a combination that achieves ~10/~10 Gbps in both directions. Usually incoming is fine but outgoing is ridiculously low which is just... odd. It implies the optics are fine, the ones I have are just generic 10GTeks and that it's a definite driver issue, which is also backed up by the fact older kernels seem to have worked mostly fine. I'm half tempted to install RHEL on the machine and benchmark it to see if the issue goes away. That'd at least give me a starting place for figuring out what changed.Did you do a make AND

make install

Then reboot?

[SOLVED] intel x553 SFP+ ixgbe no go on PVE8

- Thread starter vesalius

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hi,

I tested it on my ATOM C3958 machine with kernel 6.8.8-2-pve + ixgbe driver 5.20.9.

There were no warnings when building the driver.

The machine is connected to the switch (Mikrotik CRS317-1G-16S+RM) by the DAC cable.

The measurement results using iperf3 on the machine equipped with X710 (FreeBSD 13) are as follows.

promox# iperf3 -c nfs

(snip)

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 11.5 GBytes 9.90 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 11.5 GBytes 9.89 Gbits/sec receiver

promox# iperf3 -s

(snip)

[ ID] Interval Transfer Bitrate

[ 5] 0.00-10.06 sec 11.1 GBytes 9.44 Gbits/sec receiver

Looks like it's running at wire speed both up and down to me!

I tested it on my ATOM C3958 machine with kernel 6.8.8-2-pve + ixgbe driver 5.20.9.

There were no warnings when building the driver.

The machine is connected to the switch (Mikrotik CRS317-1G-16S+RM) by the DAC cable.

The measurement results using iperf3 on the machine equipped with X710 (FreeBSD 13) are as follows.

promox# iperf3 -c nfs

(snip)

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 11.5 GBytes 9.90 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 11.5 GBytes 9.89 Gbits/sec receiver

promox# iperf3 -s

(snip)

[ ID] Interval Transfer Bitrate

[ 5] 0.00-10.06 sec 11.1 GBytes 9.44 Gbits/sec receiver

Looks like it's running at wire speed both up and down to me!

yes like I did when I reported this originally.Did you do a make AND

make install

Then reboot?

https://forum.proxmox.com/threads/intel-x553-sfp-ixgbe-no-go-on-pve8.135129/post-626516

As you say, it may be a limit of the hardware you are using. I personally have not verified if you can get bidirectional 10gb/s over this x553 port, but I would definitely start looking into your transceivers and switch. Maybe as a quick and easy test, connect two of the x553 ports together and see if they can talk to each other without a switch involved at the speed you are expecting.I've been trying to get this to work for about a week, works at 10 gbit only in one direction. Maybe the problem is switch or module dependant?

The other thing to consider is maybe these x553 ports are only rated at about 10gb/s max throughput. I have no idea, but just tossing out an idea here because this is older/cheaper hardware we are using.

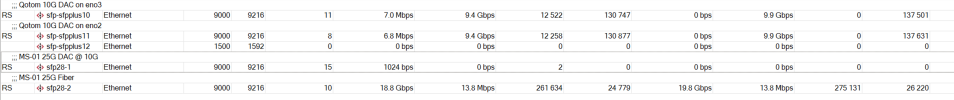

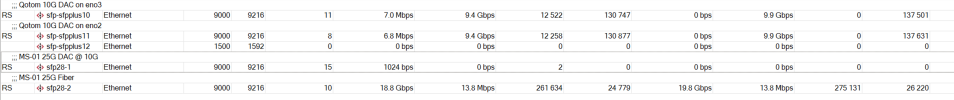

Here are my own tests using kernel 6.8.8.2 with the OOT ixgbe 5.20.9 on the Qotom Q20332G9-S10 device along with some Minisforum MS-01 devices that have an Intel X710 controller that works with the default kernel drivers. Also, I'm using DAC cables with an ICX 6610 switch and leaving the default MTU of 1500.

Bidirectional testing I do see a slow down on the Qotom when it comes to receiving. If I'm not doing bidirectional, I get around 8.7 to 9.4 gb/s, but the Qotom with the X553 does have a slowdown on receiving if it's also busy with outgoing. Seems to be a lot of retries, so something isn't quite right.

Testing between two MS-01 servers with the exact same DAC and switch, just with the X710 controllers, I get about 9.35gb/s whether I do bidirectional or not.

This is results from Qotom server testing with an MS-01 server:

This is results between two MS-01 servers with X710 controllers:

Bidirectional testing I do see a slow down on the Qotom when it comes to receiving. If I'm not doing bidirectional, I get around 8.7 to 9.4 gb/s, but the Qotom with the X553 does have a slowdown on receiving if it's also busy with outgoing. Seems to be a lot of retries, so something isn't quite right.

Testing between two MS-01 servers with the exact same DAC and switch, just with the X710 controllers, I get about 9.35gb/s whether I do bidirectional or not.

This is results from Qotom server testing with an MS-01 server:

Code:

root@qotom:~# iperf3 -t 5 -c ms01-1 --bidir

Connecting to host -----, port 5201

[ 5] local ---- port 43594 connected to -------- port 5201

[ 7] local ---- port 43604 connected to -------- port 5201

[ ID][Role] Interval Transfer Bitrate Retr Cwnd

[ 5][TX-C] 0.00-1.00 sec 1.10 GBytes 9.40 Gbits/sec 36 1.40 MBytes

[ 7][RX-C] 0.00-1.00 sec 294 MBytes 2.46 Gbits/sec

[ 5][TX-C] 1.00-2.00 sec 1.09 GBytes 9.37 Gbits/sec 0 1.64 MBytes

[ 7][RX-C] 1.00-2.00 sec 555 MBytes 4.66 Gbits/sec

[ 5][TX-C] 2.00-3.00 sec 1.09 GBytes 9.38 Gbits/sec 0 1.72 MBytes

[ 7][RX-C] 2.00-3.00 sec 563 MBytes 4.72 Gbits/sec

[ 5][TX-C] 3.00-4.00 sec 1.09 GBytes 9.38 Gbits/sec 1 1.74 MBytes

[ 7][RX-C] 3.00-4.00 sec 602 MBytes 5.05 Gbits/sec

[ 5][TX-C] 4.00-5.00 sec 1.09 GBytes 9.39 Gbits/sec 0 1.75 MBytes

[ 7][RX-C] 4.00-5.00 sec 620 MBytes 5.20 Gbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID][Role] Interval Transfer Bitrate Retr

[ 5][TX-C] 0.00-5.00 sec 5.46 GBytes 9.39 Gbits/sec 37 sender

[ 5][TX-C] 0.00-5.00 sec 5.46 GBytes 9.38 Gbits/sec receiver

[ 7][RX-C] 0.00-5.00 sec 2.58 GBytes 4.43 Gbits/sec 6180 sender

[ 7][RX-C] 0.00-5.00 sec 2.57 GBytes 4.42 Gbits/sec receiverThis is results between two MS-01 servers with X710 controllers:

Code:

root@ms01-1:~# iperf3 -t 5 -c ms01-2 --bidir

Connecting to host ----, port 5201

[ 5] local ---- port 47968 connected to ------- port 5201

[ 7] local ---- port 47978 connected to ------- port 5201

[ ID][Role] Interval Transfer Bitrate Retr Cwnd

[ 5][TX-C] 0.00-1.00 sec 1.09 GBytes 9.35 Gbits/sec 579 837 KBytes

[ 7][RX-C] 0.00-1.00 sec 1.05 GBytes 8.99 Gbits/sec

[ 5][TX-C] 1.00-2.00 sec 1.06 GBytes 9.14 Gbits/sec 23 905 KBytes

[ 7][RX-C] 1.00-2.00 sec 1.09 GBytes 9.37 Gbits/sec

[ 5][TX-C] 2.00-3.00 sec 1.08 GBytes 9.31 Gbits/sec 24 660 KBytes

[ 7][RX-C] 2.00-3.00 sec 1.09 GBytes 9.37 Gbits/sec

[ 5][TX-C] 3.00-4.00 sec 1.09 GBytes 9.32 Gbits/sec 68 701 KBytes

[ 7][RX-C] 3.00-4.00 sec 1.09 GBytes 9.36 Gbits/sec

[ 5][TX-C] 4.00-5.00 sec 1.09 GBytes 9.33 Gbits/sec 37 836 KBytes

[ 7][RX-C] 4.00-5.00 sec 1.09 GBytes 9.36 Gbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID][Role] Interval Transfer Bitrate Retr

[ 5][TX-C] 0.00-5.00 sec 5.41 GBytes 9.29 Gbits/sec 731 sender

[ 5][TX-C] 0.00-5.00 sec 5.41 GBytes 9.29 Gbits/sec receiver

[ 7][RX-C] 0.00-5.00 sec 5.41 GBytes 9.30 Gbits/sec 153 sender

[ 7][RX-C] 0.00-5.00 sec 5.41 GBytes 9.29 Gbits/sec receiverJust an update to give a little more information.

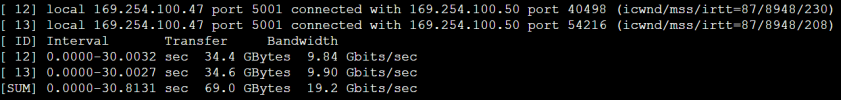

1st These x553 ports work perfectly on kernel 5.15.152-1-pve, in fact I run 2 in a bonded pair, over 2 DACs to a MikroTik CCR2004 (MTU 9000), tested with multithreaded iperf2 (not using --bidir using -R to test receive only) in both directions these are results:

However the problems start as soon as I switch to any version 6 kernel, Worst results are seen with a 10GTEK Intel Compatible 10Gb SFP+ RJ45 Module connected to a Netgear XS712T 10G switch as I posted previously, the DACs fair better on my MikroTik giving full 10G when sending traffic but about half 4-6G when receiving. I'm hoping a fix will come along in the future which I will test and post results again but until then I'm stuck on version 5 kernel.

Has anyone got these working on version 6 kernel with full 10G in both directions? Is so what combo of Modules/DAC/Switch kernel and driver?

1st These x553 ports work perfectly on kernel 5.15.152-1-pve, in fact I run 2 in a bonded pair, over 2 DACs to a MikroTik CCR2004 (MTU 9000), tested with multithreaded iperf2 (not using --bidir using -R to test receive only) in both directions these are results:

However the problems start as soon as I switch to any version 6 kernel, Worst results are seen with a 10GTEK Intel Compatible 10Gb SFP+ RJ45 Module connected to a Netgear XS712T 10G switch as I posted previously, the DACs fair better on my MikroTik giving full 10G when sending traffic but about half 4-6G when receiving. I'm hoping a fix will come along in the future which I will test and post results again but until then I'm stuck on version 5 kernel.

Has anyone got these working on version 6 kernel with full 10G in both directions? Is so what combo of Modules/DAC/Switch kernel and driver?

You didn't do a great job all those years then, shame on you.Dude I have no idea what your deal is, but I've been a Linux sysadmin since the late 90's and I'm in charge of technology procurement and buildout.

If you have nothing helpful to contribute as far as actually fixing this issue, stop spamming the thread with your opinions.

On topic:

5.20.9 on 6.8.8-2 works here btw!

Just an update to give a little more information.

1st These x553 ports work perfectly on kernel 5.15.152-1-pve, in fact I run 2 in a bonded pair, over 2 DACs to a MikroTik CCR2004 (MTU 9000), tested with multithreaded iperf2 (not using --bidir using -R to test receive only) in both directions these are results:

View attachment 71088

View attachment 71089

However the problems start as soon as I switch to any version 6 kernel, Worst results are seen with a 10GTEK Intel Compatible 10Gb SFP+ RJ45 Module connected to a Netgear XS712T 10G switch as I posted previously, the DACs fair better on my MikroTik giving full 10G when sending traffic but about half 4-6G when receiving. I'm hoping a fix will come along in the future which I will test and post results again but until then I'm stuck on version 5 kernel.

Has anyone got these working on version 6 kernel with full 10G in both directions? Is so what combo of Modules/DAC/Switch kernel and driver?

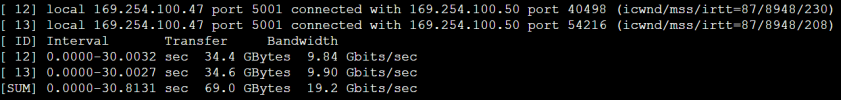

I also have minisforum MS-01 on hand so I tried it out.

MS-01 is connected to BKHD 1310np (C3958).

Both kernels are 6.8.8-2-pve, but the ATOM C3xxx machines use ixgbe driver 5.20.9. MS-01 uses stock driver.

All connections are made by fs.com's Direct attach cable (https://www.fs.com/jp/products/74616.html)

I am not using the 10GBase-T module.

MS-01 - CRS317-1G-16S+RM - BKHD 1310np

Both upstream and downstream speeds are approximately 9.9Gbps.

Hi

I don't know if anyone can help i sourced one of the Qotom 1u servers like a lot of you last year and couldn't get the SPF ports working.

At the time the forum advice was install PVE 7 and pin the kernel. Which i did to 5.15.143-1pve.

Obviously PVE7 goes out of support end of the month and I've been trying to follow the various forum threads but wondered if someone could post a step by step guide based on latest driver release etc?

or confirm if vesalius's instructions are strill valid?

A very new noob!

I don't know if anyone can help i sourced one of the Qotom 1u servers like a lot of you last year and couldn't get the SPF ports working.

At the time the forum advice was install PVE 7 and pin the kernel. Which i did to 5.15.143-1pve.

Obviously PVE7 goes out of support end of the month and I've been trying to follow the various forum threads but wondered if someone could post a step by step guide based on latest driver release etc?

or confirm if vesalius's instructions are strill valid?

A very new noob!

Last edited:

kernel 6.8.8-3-pve + ixgbe 5.20.9 also works fine. (The only difference between ixgbe 5.20.9 and 5.20.10 is the version string.)Has anyone tried the new drivers 5.20.10 new kernel 6.8.8.3? if so, any better throughput?

As long as you back everything up, you should be able to dist-upgrade in place all the way to latest PVE 8.2.4 and still run the 5.15 kernel, that's what I'm doing.

This is also what I'm having to do, as said dist-upgrade to 8.2.4, install kernel 5.15.152-1-pve then useAs long as you back everything up, you should be able to dist-upgrade in place all the way to latest PVE 8.2.4 and still run the 5.15 kernel, that's what I'm doing.

Code:

proxmox-boot-tool kernel pin 5.15.152-1-pve

Last edited:

In order to get dkms automatically working again with ixgbe/5.20.10 and kernel 6.8.8-* updates, obviously need to replace 5.20.10 in the above steps, but also I had to rm -rf /var/lib/dkms/ixgbe (before going through the last 3 commands starting withSo with the help of @aarononeal and the How to build a kernel module with DKMS on Linux webpage I have DKMS autoinstall working with the last kernel upgrade.

Code:apt-get install proxmox-default-headers build-essential dkms gcc make cd /tmp wget https://sourceforge.net/projects/e1000/files/ixgbe%20stable/5.19.9/ixgbe-5.19.9.tar.gz tar xvfvz ixgbe-5.19.9.tar.gz -C /usr/src nano /usr/src/ixgbe-5.19.9/dkms.conf

Copy the below into the dkms.conf file then save.

Code:sudo dkms add ixgbe/5.19.9 sudo dkms build ixgbe/5.19.9 sudo dkms install ixgbe/5.19.9

After this DKMS should work to autoinstall with new kernels.

sudo dkms add ixgbe/5.2.10) or DKMS would fail as it attempted to rerun the older ixgbe/5.19.9 with a new kernel upgrade, even though /usr/src/ixgbe-5.19.19/ had been deleted.

Last edited:

There is a problematic commit from March 2022 in kernel 6.1 (https://lore.kernel.org/all/8267673cce94022974bcf35b2bf0f6545105d03e@ycharbi.fr/T/)

It was later reverted in 6.9 and backported to LTS Kernels 6.6 and 6.1 in May 2024.

Unfortunately PVE does not use LTS kernels and as such does not profit from the kernel maintainers work.

I manually applied the revert of the patch (https://patchwork.kernel.org/projec...karound-v1-1-50f80f261c94@intel.com/#25860468) to a 6.8.8-4 pve kernel I built from source.

As soon as I booted from this patched kernel all the SFP+ came up and connected to my switch.

Without the patch only one of the SFP+ comes up (eno4, port on the bottom right.)

If the proxmox team can apply this patch to their pve kernel this will fix the SFP+ on all platforms using Intel C3xxx/X553.

It was later reverted in 6.9 and backported to LTS Kernels 6.6 and 6.1 in May 2024.

Unfortunately PVE does not use LTS kernels and as such does not profit from the kernel maintainers work.

I manually applied the revert of the patch (https://patchwork.kernel.org/projec...karound-v1-1-50f80f261c94@intel.com/#25860468) to a 6.8.8-4 pve kernel I built from source.

As soon as I booted from this patched kernel all the SFP+ came up and connected to my switch.

Without the patch only one of the SFP+ comes up (eno4, port on the bottom right.)

If the proxmox team can apply this patch to their pve kernel this will fix the SFP+ on all platforms using Intel C3xxx/X553.

Thanks, hopefully, this is a universal way forward. Copied your post to the bug report for this issue: Bug 5103 - intel x553 SFP+ ixgbe does not link up on pve8 or pve8.1There is a problematic commit from March 2022 in kernel 6.1 (https://lore.kernel.org/all/8267673cce94022974bcf35b2bf0f6545105d03e@ycharbi.fr/T/)

It was later reverted in 6.9 and backported to LTS Kernels 6.6 and 6.1 in May 2024.

Unfortunately PVE does not use LTS kernels and as such does not profit from the kernel maintainers work.

I manually applied the revert of the patch (https://patchwork.kernel.org/projec...karound-v1-1-50f80f261c94@intel.com/#25860468) to a 6.8.8-4 pve kernel I built from source.

As soon as I booted from this patched kernel all the SFP+ came up and connected to my switch.

Without the patch only one of the SFP+ comes up (eno4, port on the bottom right.)

If the proxmox team can apply this patch to their pve kernel this will fix the SFP+ on all platforms using Intel C3xxx/X553.

After a couple of years Ubuntu seems to have recently reverted the Linux kernel regression causing this issue dating back to kernel 6.1 AND Proxmox may finally pick up the fix in an upcoming kernel.

https://lists.proxmox.com/pipermail/pve-devel/2024-August/065041.html

https://lists.proxmox.com/pipermail/pve-devel/2024-August/065041.html